When should Seti jetison the weak?

Message boards :

Number crunching :

When should Seti jetison the weak?

Message board moderation

| Author | Message |

|---|---|

|

PhonAcq Send message Joined: 14 Apr 01 Posts: 1656 Credit: 30,658,217 RAC: 1

|

(Snazzy title selected to catch your attention and to incite your emotions.) * There is some amount of fixed overhead at Berkeley for each host in seti. I won't speculate how much, but in aggregrate over the thousands of hosts it represents some amount (alot?) of work and resource consumption. * The oldest, least productive computer hosts are contributing an increasingly inconsequential amount of work as time marches on. They are probably valued more for nostalgia and idealism, than for their bone-dry value to the project. Whatever, the ancient machines are consumming their overhead's worth of resources and contributing very little (in absolute terms) in return. * There is a limited amount of resources to go around. So I conclude that at some point, it would benefit Seti to stop giving work to the least productive computers. Have we passed that point with the 200K or so hosts? If not, what number of P-II's and P-III's and so forth should the project support? One answer to this is 'natural attrition', where the computers die, clients become apathetic, and the science application morphs into something the oldest machines can't support. That's fine, but I view that as a separate evolution mechanism. |

KW2E KW2E Send message Joined: 18 May 99 Posts: 346 Credit: 104,396,190 RAC: 34

|

That's kind-of like the Salvation Army telling people not to put any loose change into the bucket because it costs too much to count the change. Only bills please. I understand what you are saying, and I agree to a certain extent. But I think the evolution of the app will kill off the old machines in due time. Won't be too long before they double the work unit data size again. IMO BTW, I'd love to see a 200k RAC machine. Rob

|

S@NL - Eesger - www.knoop.nl S@NL - Eesger - www.knoop.nl Send message Joined: 7 Oct 01 Posts: 385 Credit: 50,200,038 RAC: 0

|

..BTW, I'd love to see a 200k RAC machine. That way you'dd need to only maintain 1.65 machines you mean ? ;) The SETI@Home Gauntlet 2012 april 16 - 30| info / chat | STATS |

KW2E KW2E Send message Joined: 18 May 99 Posts: 346 Credit: 104,396,190 RAC: 34

|

Wouldn't that be nice!

|

James Sotherden James Sotherden Send message Joined: 16 May 99 Posts: 10436 Credit: 110,373,059 RAC: 54

|

Me, I think attrition will get rid of the slow computers. I have and old P4 that takes 6.5 hours to crunch a WU. My mac will do 2 in 2 hours.Thats with stock apps. Sure i could do the OPT Apps. But i dont have the skill to install them yet. So when the IRS says yes to me, i will buy a quad i7 to replace the old P4.  [/quote] [/quote]Old James |

|

gomeyer Send message Joined: 21 May 99 Posts: 488 Credit: 50,370,425 RAC: 0

|

Well, I don’t see a snowball’s chance of them “jettisoning the weak†and I’m not so sure it’s really a good idea in any event. Where would you draw the line? Who decides? Only my opinion of course. I would be satisfied if they just began refusing new work to 4.xx clients that give low or zero credits/wu. I can't imagine how unhappy I would be if I had a workstation that takes 3 or 4 days to finish an AP WU and got zero credit for a perfectly good result just because my wingman is a cave dweller. Now that’s just plain unfair. |

|

Josef W. Segur Send message Joined: 30 Oct 99 Posts: 4504 Credit: 1,414,761 RAC: 0

|

(Snazzy title selected to catch your attention and to incite your emotions.) My 200 MHz Pentium MMX system has an average turnaround time of 4.54 days. I've seen many much faster systems with longer turnaround than that, so they're causing more resource usage for workunit storage on the download server. Each system does have a host record in the database, but even hosts which are no longer active have that. What other kind of resources did you have in mind? About 14 months ago the estimates and deadlines were adjusted such that a host needs a floating point benchmark of around 40 million ops/sec to get work. Perhaps Moore's Law suggests that should be about doubled every two years. But my opinion is that slow hardware which is still producing valid work is not really more of a burden on the system than new hardware. If anything I'd suggest taking steps to eliminate hosts which don't produce at least 90% valid results, and that low a limit only because server-side problems can cause invalidations. Perhaps some method of dropping hosts and/or users who haven't contributed any results in the last x years would also be a sensible BOINC modification (x being selectable by each project). Joe |

Bill Walker Bill Walker Send message Joined: 4 Sep 99 Posts: 3868 Credit: 2,697,267 RAC: 0

|

snip snip Your lack of concern for nostaliga tells me you are relatively young. Your time will come. Your lack of concern for idealism causes me grave concern for the future of the species. To make you a little happier, how about if SETI and BOINC charge the old machines twice what they charge you? Oh what the heck, three times. But seriously, if Matt tells us they are lacking in total computing power right now, dropping the low productivity machines just means the big crunchers still don't have enough CPU for what SETI wants. Maybe you should offer to pay us little guys to upgrade our CPUs?

|

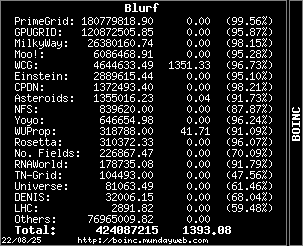

Blurf Blurf Send message Joined: 2 Sep 06 Posts: 8962 Credit: 12,678,685 RAC: 0

|

* The oldest, least productive computer hosts are contributing an increasingly inconsequential amount of work as time marches on. They are probably valued more for nostalgia and idealism, than for their bone-dry value to the project. Whatever, the ancient machines are consumming their overhead's worth of resources and contributing very little (in absolute terms) in return. I find this to kind of be insulting towards people with smaller farms or older systems. As long as any computer is contributing anything I find it extremely difficult to call it "bone-dry value" that they offer.   |

Misfit Misfit Send message Joined: 21 Jun 01 Posts: 21804 Credit: 2,815,091 RAC: 0

|

(Snazzy title selected to catch your attention and to incite your emotions.) I thought you had misspelled week. SETI on vacation. me@rescam.org |

zoom3+1=4 zoom3+1=4 Send message Joined: 30 Nov 03 Posts: 66001 Credit: 55,293,173 RAC: 49

|

* The oldest, least productive computer hosts are contributing an increasingly inconsequential amount of work as time marches on. They are probably valued more for nostalgia and idealism, than for their bone-dry value to the project. Whatever, the ancient machines are consumming their overhead's worth of resources and contributing very little (in absolute terms) in return. Blurf You read My mind, The project needs any capable computer It can get, Besides they do the work, Just really slow. The T1 Trust, PRR T1 Class 4-4-4-4 #5550, 1 of America's First HST's

|

|

HFB1217 Send message Joined: 25 Dec 05 Posts: 102 Credit: 9,424,572 RAC: 0

|

Hell that is a poor way to treat members who can not afford to upgrade or do not need to upgrade because what they have meets their needs. It is also a bit elitist after all this is a project for all who want to participate in the project. Who knows one of them just might find ET!! Come and Visit Us at BBR TeamStarFire ****My 9th year of Seti****A Founding Member of the Original Seti Team Starfire at Broadband Reports.com **** |

|

PhonAcq Send message Joined: 14 Apr 01 Posts: 1656 Credit: 30,658,217 RAC: 1

|

I find it curious that no one thus far has based their response on objectively analyzing the argument I tried to outline. Let me ask again a different way. If one-half of the 200K active participants ran P-II class machines and produced a result every week, I wondered if that was helpful. There is overhead involved with keeping up the various databases, with keeping the long residence time wu's active on the servers that limit available storage for additional wu's, with backing up and resurecting this information during server failures, and so forth. Time and money spent doing this for the weakest hosts may be better spent on other tasks. But if 100K P-II's were tolerable, then would 300K or 1000K be the breaking point? Obviously that depends on available resources. But I hope we can all agree there is an upper bound. So my challenge to readers is to estimate (objectively) what this limit is. I don't have access to the data needed to analyze the problem. For example, just how many 'slow' cpu's are out there and what percentage of the daily production do they represent? Or, what could be saved if the bottom X% of hosts were jettisoned? Surely, this type of data is buried somewhere. I would hope someone reading this thread would be able to post some of it, or there estimates of it. And yes, I have a dual P-II 333MHz that keeps chugging and heating my house. It won't fail (as I had hoped a year or so ago so that I could turn it off for good), so I'm looking down this line of reasoning for another objective reason to turn if off. |

|

-ShEm- Send message Joined: 25 Feb 00 Posts: 139 Credit: 4,129,448 RAC: 0 |

...I don't have access to the data needed to analyze the problem. For example, just how many 'slow' cpu's are out there and what percentage of the daily production do they represent? Or, what could be saved if the bottom X% of hosts were jettisoned? Surely, this type of data is buried somewhere. I would hope someone reading this thread would be able to post some of it, or there estimates of it... Maybe boincstats's S@h processor-stats (sorted by average credit) can help? |

Bill Walker Bill Walker Send message Joined: 4 Sep 99 Posts: 3868 Credit: 2,697,267 RAC: 0

|

...I don't have access to the data needed to analyze the problem. For example, just how many 'slow' cpu's are out there and what percentage of the daily production do they represent? Or, what could be saved if the bottom X% of hosts were jettisoned? Surely, this type of data is buried somewhere. I would hope someone reading this thread would be able to post some of it, or there estimates of it... The fact remains that Matt tells us the choke point right now is CPU power, not anything on the server side. This is data accessible to anybody who can read and reason. Jettisoning ANY CPU power will not change this. That is a rational, logical argument,that can be added to the previous posts about the original question's lack of humanity. Sorry, I know it is just a troll, but us old guys get worked up easily.

|

elbea64 elbea64 Send message Joined: 16 Aug 99 Posts: 114 Credit: 6,352,198 RAC: 0

|

Objectively there is no limit because for every limit one can think of, there's a workaround. so nothing curious about non-objective answers subjectively - means from a special point of view - we need the point of view you want to discuss

|

Bob Mahoney Design Bob Mahoney Design Send message Joined: 4 Apr 04 Posts: 178 Credit: 9,205,632 RAC: 0

|

And yes, I have a dual P-II 333MHz that keeps chugging and heating my house. It won't fail (as I had hoped a year or so ago so that I could turn it off for good), so I'm looking down this line of reasoning for another objective reason to turn if off. The "incite" portion of your plan worked! This sounds like the tough decision I had to make a few years ago when I finally decided to limit my online system's support of 110baud and 300baud modems. People still used them, but maintaining compatibility with them was stifling to the overall system progress. In the big SETI@home picture, I think that is happening here, maybe more than a little. Like, the micro-size of the MB WU vs. the mega-size of the AP WU.... well, one is too stuck in the past, and the big one needs a fast, modern computer. This makes the science side of SETI a bit schizy in appearance. It also makes the project less competitive with all the other projects competing for volunteer distributed computer time. That is, users of old computers hate AP, and users of hot-shot new stuff get tangled in a mess of micro-sized MB workunits that clog up the servers, so nobody wins. I'm sorry to harp on saving energy, but doesn't anyone worry about the extremely ineffecient use of electricity with the very old systems? I've wondered if progressive thinking at Berkeley is in denial on this topic? Hey, don't get too angry at my opinions..... I still worry about how much of an energy usage pulse the U.S. experiences when a national talk-radio host pounds on his desk during a broadcast - each of those bass pulses cause a lot of speaker movement, and multiply that by 5,000,000 listeners with two speakers each..... I just solved the energy crisis..... cut off the weak computers on SETI, and put pillows under the arms of Rush Limbaugh and Howard Stern! Energy Czar, you say? Aw shucks. Bob Opinion stated as fact? Who, me? |

|

Andy Williams Send message Joined: 11 May 01 Posts: 187 Credit: 112,464,820 RAC: 0

|

doesn't anyone worry about the extremely ineffecient use of electricity with the very old systems? That is the best argument for retiring old systems. FLOPS per watt. You can do two things with a newer system. Generate a lot more results for the same amount of electricity ($) or generate the same number of results with a lot less electricity ($). In the latter case, the new system will eventually pay for itself. Personally, I have retired all single core CPUs. -- Classic 82353 WU / 400979 h

|

Bill Walker Bill Walker Send message Joined: 4 Sep 99 Posts: 3868 Credit: 2,697,267 RAC: 0

|

doesn't anyone worry about the extremely ineffecient use of electricity with the very old systems? A lot of this makes sense, but surely it is the user who decides this, not SETI admins. If, for example, an energy concerned user spends all their capitol making the rest of the household very energy efficient (new light bulbs, insulation, appliances, solar panels, etc.), and then can't afford to upgrade the old PC, his total energy use per person could be much less than somebody running a shiny new quad processor in a big house, with the windows open while the a/c runs, and with all the incandescent lights left on. The new PC could be replaced later, when the cash flow permits. Meanwhile, they continue to search for ET with a clear conscience.

|

elbea64 elbea64 Send message Joined: 16 Aug 99 Posts: 114 Credit: 6,352,198 RAC: 0

|

doesn't anyone worry about the extremely ineffecient use of electricity with the very old systems? BOINC is intended to use not otherwise used cpu-cycles, which means that the old systems are running regardless if BOINC is running or not, so it's not BOINC that is inefficient in that case, it's something else and therefore no reason not to run BOINC on such systems. So the question could be if it is inefficient to to buy/run systems only for BOINC which is the same argument for old and new systems, i mean even more for new systems *just another point of view* |

©2024 University of California

SETI@home and Astropulse are funded by grants from the National Science Foundation, NASA, and donations from SETI@home volunteers. AstroPulse is funded in part by the NSF through grant AST-0307956.