The Server Issues / Outages Thread - Panic Mode On! (118)

Message boards :

Number crunching :

The Server Issues / Outages Thread - Panic Mode On! (118)

Message board moderation

Previous · 1 . . . 56 · 57 · 58 · 59 · 60 · 61 · 62 . . . 94 · Next

| Author | Message |

|---|---|

|

Ville Saari Send message Joined: 30 Nov 00 Posts: 1158 Credit: 49,177,052 RAC: 82,530

|

Total pendings are 556, so those two batches are ~8% of the total. If every host has similar, then almost a million workunits are stuck until there deadlines hopefully trigger another validation sequence. Put another way that is 2 million of the tasks in the "Results returned and awaiting validation" entry on the Server status page.The server problems just before christmas produced lot of those missed validations. (what's wrong with my spelling of christmas? My browser highlights it as misspelled) |

|

Ville Saari Send message Joined: 30 Nov 00 Posts: 1158 Credit: 49,177,052 RAC: 82,530

|

Looks like the noise bombing blc35 files are not being split any more. Either they got completed or they have been removed. I'm micromanaging my client to make it process the remaining noise bombs first. |

W-K 666  Send message Joined: 18 May 99 Posts: 19725 Credit: 40,757,560 RAC: 67

|

(what's wrong with my spelling of christmas? My browser highlights it as misspelled)Christmas |

|

TBar Send message Joined: 22 May 99 Posts: 5204 Credit: 840,779,836 RAC: 2,768

|

I think that problem is caused when one task is completed just before a Maintenance, and the other task completed just after the Maintenance. The validator can't handle the outrage.Total pendings are 556, so those two batches are ~8% of the total. If every host has similar, then almost a million workunits are stuck until there deadlines hopefully trigger another validation sequence. Put another way that is 2 million of the tasks in the "Results returned and awaiting validation" entry on the Server status page.The server problems just before christmas produced lot of those missed validations. Try a Capital C. |

|

Ville Saari Send message Joined: 30 Nov 00 Posts: 1158 Credit: 49,177,052 RAC: 82,530

|

ChristmasThanks. Christmas happens every year so my native language doesn't consider it a proper noun identifying a specific unique thing. |

|

Grant (SSSF) Send message Joined: 19 Aug 99 Posts: 13960 Credit: 208,696,464 RAC: 304

|

Yep, that's why it was at the end of my list.I'd prefer to stress-test one problem at a time. We've got...Then re-release some of the BLC35s in small batches ...If we're going to stress test it, we might might as well really stress test it. Grant Darwin NT |

|

Grant (SSSF) Send message Joined: 19 Aug 99 Posts: 13960 Credit: 208,696,464 RAC: 304

|

Total pendings are 556, so those two batches are ~8% of the total. If every host has similar, then almost a million workunits are stuck until there deadlines hopefully trigger another validation sequence. Put another way that is 2 million of the tasks in the "Results returned and awaiting validation" entry on the Server status page.Add to that the fact that the Validators just aren't keeping up, hence their increasing backlog (although that has dropped slightly over the last few hours (but only by by less than 2%. ie bugger all). Although my Linux System spends much of it's day without any GPU work, and occasionally runs out of CPU work as well, it's RAC is actually increasing from it's post outage low point- due to the huge backlog of work waiting to be Validated slowly being done. Grant Darwin NT |

|

Ville Saari Send message Joined: 30 Nov 00 Posts: 1158 Credit: 49,177,052 RAC: 82,530

|

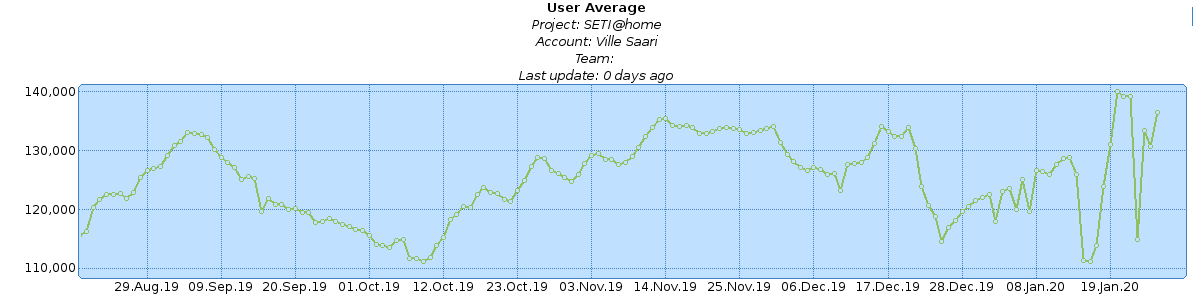

My RAC is now actually higher than it has ever been before the Christmas outage when everything was working normally. CreditScrew has given us quite variable credit per flop and apparently this variation is now peaking up. About a week ago between the two very long outages my RAC was even higher and that was my new all time high! A bit over 140000 when my long time average during the time I have been using my current crunching hardware has been about 125000 and the previous all time high had been about 135000. I have a program that calculates the predicted credit per day of my current work queue using the average credit per processing time I have recently got for each task type (Arecibo/BLC, BLC number, vlar or not, CPU/GPU and host) and this prediction is now 173000! When things have been stable for long enough time for RAC to reflect the true credit per day, this prediction and my RAC have been pretty close together. RAC usually a little bit lower. The difference reflecting the processing power I have used for non boinc computer usage.  |

|

Ville Saari Send message Joined: 30 Nov 00 Posts: 1158 Credit: 49,177,052 RAC: 82,530

|

Looks like the situation is returning to normal. The noise bomb files are no longer being split, the splitters have now been running continuously for over three hours and the sum of result counts on ssp has been slowly going down despite of this. The queues of my hosts are filling up rapidly. |

Wiggo Wiggo Send message Joined: 24 Jan 00 Posts: 38208 Credit: 261,360,520 RAC: 489

|

Looks like the situation is returning to normal. The noise bomb files are no longer being split, the splitters have now been running continuously for over three hours and the sum of result counts on ssp has been slowly going down despite of this. The queues of my hosts are filling up rapidly.I'm glad that you think that there's no more noise bombs being split, but I can tell you otherwise. Cheers. |

juan BFP  Send message Joined: 16 Mar 07 Posts: 9786 Credit: 572,710,851 RAC: 3,799

|

Looks like the situation is returning to normal. The noise bomb files are no longer being split, the splitters have now been running continuously for over three hours and the sum of result counts on ssp has been slowly going down despite of this. The queues of my hosts are filling up rapidly. Get some WU. But not enough to start refill the cache.

|

|

Grant (SSSF) Send message Joined: 19 Aug 99 Posts: 13960 Credit: 208,696,464 RAC: 304

|

Yep.Looks like the situation is returning to normal. The noise bomb files are no longer being split, the splitters have now been running continuously for over three hours and the sum of result counts on ssp has been slowly going down despite of this. The queues of my hosts are filling up rapidly.I'm glad that you think that there's no more noise bombs being split, but I can tell you otherwise. Although the percentage of BLC35s that are noise bombs is a lot less than over the last few weeks, there's still plenty of noise bombs there. Grant Darwin NT |

Keith Myers Keith Myers Send message Joined: 29 Apr 01 Posts: 13164 Credit: 1,160,866,277 RAC: 1,873

|

Looks like the situation is returning to normal. The noise bomb files are no longer being split, the splitters have now been running continuously for over three hours and the sum of result counts on ssp has been slowly going down despite of this. The queues of my hosts are filling up rapidly.I'm glad that you think that there's no more noise bombs being split, but I can tell you otherwise. I agree. Over 50% of the BLC35 tasks I receive are still noise bombs. Seti@Home classic workunits:20,676 CPU time:74,226 hours   A proud member of the OFA (Old Farts Association) |

|

Grant (SSSF) Send message Joined: 19 Aug 99 Posts: 13960 Credit: 208,696,464 RAC: 304

|

I agree. Over 50% of the BLC35 tasks I receive are still noise bombs.Still way better than 95%+ :-) Edit- although when you get BLC35 resends, it's back to 95%+ noise bombs. Grant Darwin NT |

|

Ville Saari Send message Joined: 30 Nov 00 Posts: 1158 Credit: 49,177,052 RAC: 82,530

|

blc35 from 27th of July before 8pm (MJD 58691, seconds < 70000) were about 95% noise bombs. I haven't observed any significant amount of overflows from any blc35 later than that. And the only blc35 from the noise window I have received after those disappeared from the splitter list have been _2 ones (resends). And the constantly running splitters are diluting the stream of those resends. The current queue of my faster host has 0.6% of them. The slower host has 3% because its queue is longer so the time window the stuff in there has been downloaded extends further to the past. Both queues are now about two thirds Arecibo. |

Wiggo Wiggo Send message Joined: 24 Jan 00 Posts: 38208 Credit: 261,360,520 RAC: 489

|

I see that we've now hit the point again where the replica is falling behind again. :-( Cheers. |

|

Ville Saari Send message Joined: 30 Nov 00 Posts: 1158 Credit: 49,177,052 RAC: 82,530

|

I see that we've now hit the point again where the replica is falling behind again. :-(It can fall - there's only 36 hours to Tuesday downtime. |

|

Grant (SSSF) Send message Joined: 19 Aug 99 Posts: 13960 Credit: 208,696,464 RAC: 304

|

21ja20af is providing plenty of noise bombs of it's own to offset the reduction in BLC35 noise bombs. Grant Darwin NT |

|

TBar Send message Joined: 22 May 99 Posts: 5204 Credit: 840,779,836 RAC: 2,768

|

Stuck Uploads....ALL my machines have Many Uploads waiting Retry. |

|

Ville Saari Send message Joined: 30 Nov 00 Posts: 1158 Credit: 49,177,052 RAC: 82,530

|

Replica db is catching up! It was 2993s behind four ssp updates ago has been slightly less in each update after that. Now 2755s. |

©2025 University of California

SETI@home and Astropulse are funded by grants from the National Science Foundation, NASA, and donations from SETI@home volunteers. AstroPulse is funded in part by the NSF through grant AST-0307956.