Peformance tool for BoincTasks users

Message boards :

Number crunching :

Peformance tool for BoincTasks users

Message board moderation

| Author | Message |

|---|---|

Joseph Stateson Joseph Stateson  Send message Joined: 27 May 99 Posts: 309 Credit: 70,759,933 RAC: 3

|

I put together a program that uses the history files produced by BoincTasks to do some performance analysis. It only works with BoincTasks. There is a description of the program here (Fred created a 3rd party forum for add-ins) and the sources and executables are at github |

Joseph Stateson Joseph Stateson  Send message Joined: 27 May 99 Posts: 309 Credit: 70,759,933 RAC: 3

|

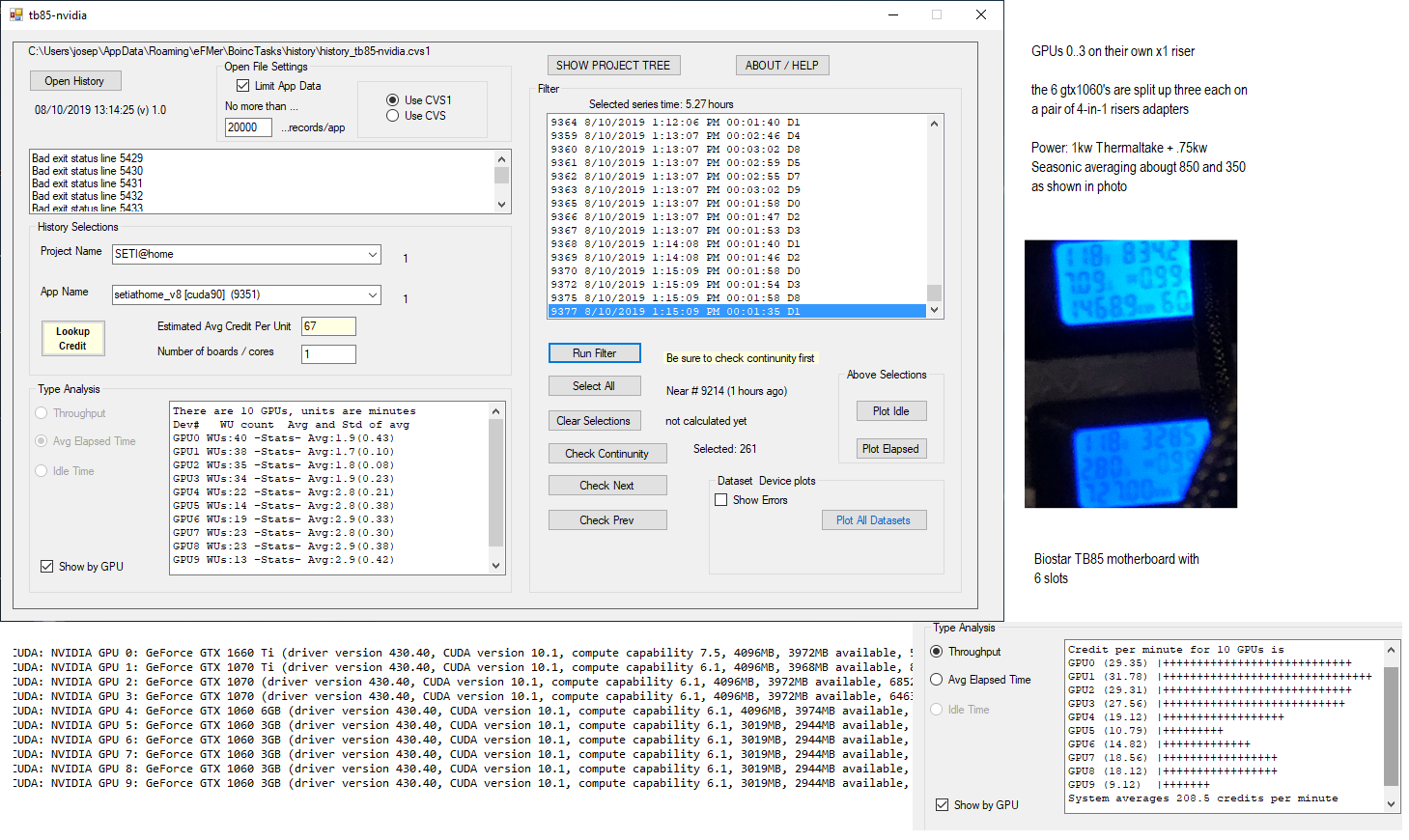

I added a feature to compare GPU boards. Useful if you got a bunch and are using boinctasks. As shown below, There are 10 assorted nVidia boards recognized by BOINC. The system TB85-nvidia is running the Linux app "cuda90" and shows 9351 work units completed successfully. The "Type Analysis" shows the GPU option and the last 5.27 hours were analyzed and organized by GPU#. Note that the gtx1070 Ti had the best performance with the GTX1060's slower. The display is elapsed time in minutes. Source to build the app (windows c#) and links to the executables as well as instructions for running the program are in the Boinctasks History Analyzer & Project performance post. Feel free to email or PM me any questions, bugs, suggestions, etc. I assume you can also post there. [EDIT] Not everyone can run this app. I can do an analysis (depending on my free time) for anyone but they would have to upload the history file AND any analysis would be mine to use in any future study. Pm me if interested. Typically the files are over a meg unzipped and your ISP may have restrictions on binary attachments.  |

Keith Myers Keith Myers Send message Joined: 29 Apr 01 Posts: 13164 Credit: 1,160,866,277 RAC: 1,873

|

Interesting project producing some great data for analysis. Your http://stateson.net/HostProjectStats/ calculator is great for a quick and dirty analysis and cruncher performance on a varied mix of work that might be in your validated task list. Seti@Home classic workunits:20,676 CPU time:74,226 hours   A proud member of the OFA (Old Farts Association) |

Joseph Stateson Joseph Stateson  Send message Joined: 27 May 99 Posts: 309 Credit: 70,759,933 RAC: 3

|

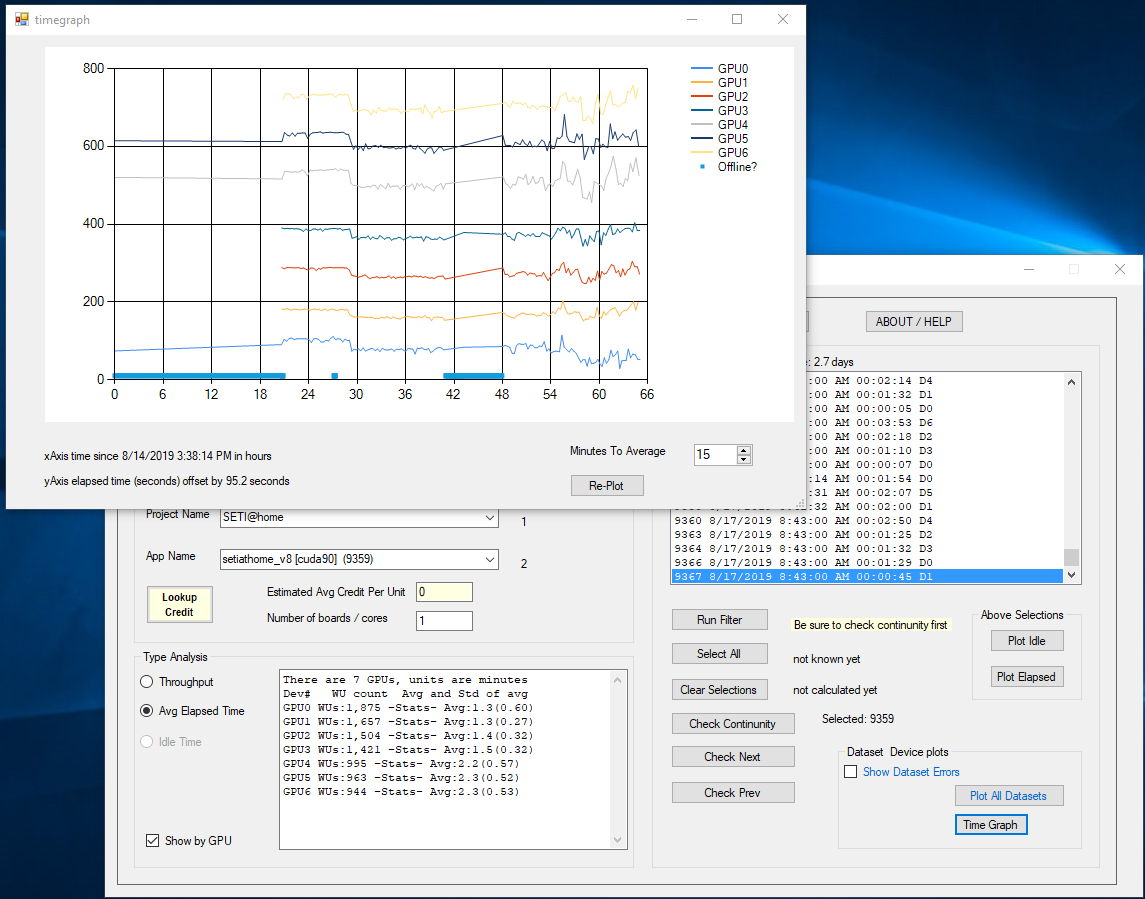

Seeing strange SETI stats: My system? Diverse Work Units? I added graphs of wall time -vs- elapsed time of each SETI work unit for each GPU and saw some strange results. In the graph below I added GPU6 and powered the rig back up near the 20 hour mark. Just before the 30 hour mark I rebooted after adjusting fans to %100 as I had failed to notice it was too hot. I also set -nobs as a command line parameter. There is a noticeable immediate improvement as the elapsed time on each GPU (the drop). The ones that show the biggest drops are the gtx1060s (gpu: 4,5,6). I assume this decrease in WU elapsed time is due to the GPU cooling off and that -nobs parameter but it could be a coincidence of a change in work units. Starting at 48 hours I entered the WOW event and there seems to be a lot of diverse work units with wildly varying completion times from that point on. The value of 95.2 is the average completion time (elapsed) for all work units and that was used to offset each GPU plot to make them visible. I wish it was possible to plot actual credit instead elapsed time. Maybe if the project could download "expected credit" at the time of the upload that value could be used (even if wrong) for a rough approximation of credit performance. Right now, if one sees a drop in elapsed time it could be due to a performance change or simply a lot of work units that are "easy".  |

|

Grant (SSSF) Send message Joined: 19 Aug 99 Posts: 13918 Credit: 208,696,464 RAC: 304

|

I wish it was possible to plot actual credit instead elapsed time. Maybe if the project could download "expected credit" at the time of the upload that value could be used (even if wrong) for a rough approximation of credit performance.Given that the amount of credit is variable depending on your OS, your wingman's OS, your application, your wingman's application, the type of WU being processed, the hardware you use to process the WU & the hardware your Wingman used to process the WU then any such use of Credit would be of absolutely no value at all. And all of those things being equal, there is still a smoothing out of Credit to allow for the large variation in runtimes of some WUs that have very similar FLOPS estimates. The Runtime of a WU is the only valid indicator of performance, but given that different WUs have different run times, the comparison can only be made between runs of a given WU. if the project could download "expected credit" at the time of the uploadIf you're really keen you can do that yourself using the definition of a Cobblestone, however due to the way Credit New works, actual Credit for a WU will be nothing even remotely close to the amount of Credit it should get according the Cobblestone definition. Highlights from the BOINC Wiki A BOINC project gives you credit for the computations your computers perform for it. BOINC's unit of credit, the Cobblestone (named after Jeff Cobb of SETI@home), is 1/200 day of CPU time on a reference computer that does 1,000 MFLOPS based on the Whetstone benchmark.All you need are the Estimated FLOPS for a given WU to determine what Credit it should receive, but since you are running anonymous platform, the Estimated FLOPS for your WUs isn't the true value as it's been modified by the server when allocating you the WU in order for the estimated run time to be correct. Grant Darwin NT |

Joseph Stateson Joseph Stateson  Send message Joined: 27 May 99 Posts: 309 Credit: 70,759,933 RAC: 3

|

Thanks, I appreciate the time & effort for this reply. I think "credit new" came out in 2011. I recall there were complaints about "double credit Tuesdays" and double credit fundraisers and maybe that was supposed to level the playing field. I think bitcoin utopia followed the cobblestone formula exactly and one could get 12 million credits in 65 seconds with the right equipment. That was in 2016, about 5 years into the "credit new" method. I had the belief that each credit had an intrinsic value but clearly when solving a science problem (for example) the algorithm might converge faster on certain computers or never even converge at all (timeout) due to a certain set of initial random numbers in a Monte Carlo method. Let me read this again.... All you need are the Estimated FLOPS for a given WU to determine what Credit it should receive, but since you are running anonymous platform, the Estimated FLOPS for your WUs isn't the true value as it's been modified by the server when allocating you the WU in order for the estimated run time to be correct This sounds like the scheme back in 2010 where one had a 'requested credit" and after the project thought it over and compared to wingman, one received a "granted" credit. If I understand what you wrote, then if I calculate the FLOPS correctly I should get "X". If my wingman does the same and gets "Y" then the lower of the two X or Y is the value that is granted. It seems to me that one could game this by spoofing the platform. However, the only way that would work is if the wingman was also spoofing else the lower of the two credit values would be granted. I still would like to plot credit -vs- wall time for each GPU using actual granted (or even requested credit). I am curious if the plot would be smooth. That would indicate an accurate assignment of credit to the work unit would it not? Last week I was looking at TBar's plot here and that gave me the idea of graphing my own rig's performance but I did not know how to get actual credits like, it seems, TBar did. |

|

Ian&Steve C. Send message Joined: 28 Sep 99 Posts: 4267 Credit: 1,282,604,591 RAC: 6,640

|

I dont think Tbar made that plot. from the posts, it sounds like he just fixed the [img] bbcode that Spartana didnt get right. I think Spartana made that plot. but Spartana was analyzing completed validated WUs, not in progress WUs, so the runtime and credit awarded were already known. just look at yours or any other public hosts valid tasks on the website. you'll see everything about it. I'm assuming Spartana wrote some kind of script to scrub through the validated tasks list on the website. Credit/runtime = credit/second metric. Seti@Home classic workunits: 29,492 CPU time: 134,419 hours

|

|

Grant (SSSF) Send message Joined: 19 Aug 99 Posts: 13918 Credit: 208,696,464 RAC: 304

|

I think bitcoin utopia followed the cobblestone formula exactly and one could get 12 million credits in 65 seconds with the right equipment. That was in 2016, about 5 years into the "credit new" method.If they did actually adhere to the Cobblestone definition, then that would have been a valid amount of Credit (I suspect that they didn't- an inflated estimate of FLOPS with a good application/hardware processing the actual number of FLOPS required would result in such an amount of Credit for a such a short period of processing). I had the belief that each credit had an intrinsic value but clearly when solving a science problem (for example) the algorithm might converge faster on certain computers or never even converge at all (timeout) due to a certain set of initial random numbers in a Monte Carlo method.According to the definition of the Cobblestone, it does. However, due to the design of Credit New, that is no longer true. The thing to keep in mind is how a Cobblestone was defined. 1 GigaFLOP machine, running full time, produces 200 units of credit in 1 day (hence the cobblestone_scale being 200/86400e9 (ie 200 credits over a 60*60*24 second period)). That theoretical 1 GFLOP processor wouldn't have any MMX, SSSE or AVX instructions. The application it runs wouldn't have any algorithms to reduce the number of computations required. If a WU required 500 GFLOPS to process, then the reference processor would have to do all 500 GFLOPS of them. With better hardware (ie MMX, SSSE, AVX instructions), better software (algorithms that mean not each & every single FLOP need be processed), as long as the final result is correct (ie provides the same final result as processing each & every individual FLOP) then it doesn't matter how long it takes to process. If the reference systems takes 5 days to process the WU, and the super cruncher can do it in 5 seconds, or your mobile phone takes a week, the Credit granted should be the same- they have all processed the same WU and produced a Valid result so the Credit should be the same. According to the definition of a Cobblestone. Unfortunately, the reality is a long, long way from that. Let me read this again....Part of Credit New's design was to combat that sort of cheating, the fudging of the FLOPS estimates. In this case, due to the design of the system, Seti needs to fudge the estimated FLOPS in order for WU processing times (and also cache settings etc) to match with reality. What matters is the Real estimated FLOPS value for a given WU (and I think that is the value actually used here at Seti when granting Credit for anonymous platform hosts- the real value is kept on the server, as it is the one the hosts running stock get sent with their WUs). I still would like to plot credit -vs- wall time for each GPU using actual granted (or even requested credit). I am curious if the plot would be smooth. That would indicate an accurate assignment of credit to the work unit would it not?It would be interesting to see the result of that- particularly the comparison between what Credit is granted, and what should be granted according to the actual definition of a Cobblestone for that particular WU. There is some scaling (as I mentioned in my earlier post) to account for certain WUs that have very different runtimes, although their actual FLOPS number are very close, to smooth out the Credit given over the range of WUs from fastest to the slowest to process (I think this dates back to the very original Credit system). Here are the gory details of Credit New. If you're a drinking person, you might want to have one (or ten) with you as you go through it as it will give you a headache. Basically, the original Credit system was extremely flawed- granted credit was all over the place (even more than it is now). And that was just for CPU processed WUs. C = H.whetstone * J.cpu_time H.whetstone = Hosts Whetstone benchmark value. J.cpu_time= CPU processing time for the WU The second (and considered the best by most) still had issues, but nothing like that which is Credit New. Credit was granted according to the number of FLOPS actually processed. So the application, host, hardware, OS etc, etc that processed the WU had no impact on the amount of Credit given (so it was more in accordance with the original definition of a Cobblestone). Then there was Credit is no longer granted according to the definition of the Cobblestone, but is varied depending on the perceived efficiency of the application/hardware being used, and other factors. Grant Darwin NT |

juan BFP  Send message Joined: 16 Mar 07 Posts: 9786 Credit: 572,710,851 RAC: 3,799

|

Then there was I don't know why everybody complains about Credit Screw. It always give you less credit for the same work. That forces you to update your host to a more powerful cruncher to achieve the same credit. So at the end you process a lot more data to receive the same credit, so more science is done. It's sure a marvelous idea. LOL <Edit> Sure some old crunchers remember when a single AP paid 700-900 credits or a MB 150-200 Credits. Look Now. We need to crunch 2-3x more to receive the same credit. Marvelous NO?

|

Wiggo Wiggo Send message Joined: 24 Jan 00 Posts: 37868 Credit: 261,360,520 RAC: 489

|

<Edit> Sure some old crunchers remember when a single AP paid 700-900 credits or a MB 150-200 Credits. Look Now. We need to crunch 2-3x more to receive the same credit. Marvelous NO?I remember back when an AP was worth 1344 credits and no matter what the MB's was worth back then you still received basically the same amount of credits over the same time that it took to do an AP in. ;-) Cheers. |

|

Spartana Send message Joined: 24 Apr 16 Posts: 99 Credit: 41,712,387 RAC: 25

|

I dont think Tbar made that plot. from the posts, it sounds like he just fixed the [img] bbcode that Spartana didnt get right. I think Spartana made that plot. I just scraped the html and then sorted it manually for each device...hence the reason for only 100 samples. Very crude. Working on a python script to automate the process, and allow it's use across all (or hopefully at least most) of the apps and platforms. The Setiathome site doesn't make this easy with the way they (don't) organize the data. |

|

Ian&Steve C. Send message Joined: 28 Sep 99 Posts: 4267 Credit: 1,282,604,591 RAC: 6,640

|

yeah, I figured that's what you did. I've done similar things just playing around testing out python scripts to pull data off the site for stats and whatnot but haven't put much time or effort into it. but the HTML is a mess on this site lol. the stats page is a single table cell with an entire table in it LMAO. plays havoc with BeautifulSoup. Seti@Home classic workunits: 29,492 CPU time: 134,419 hours

|

Joseph Stateson Joseph Stateson  Send message Joined: 27 May 99 Posts: 309 Credit: 70,759,933 RAC: 3

|

Connected 1kw XFX power supply to 220 volt. Power usage dropped from averaging 930 watts to just over 800. I did not expect that large of a drop. The main purpose I did the conversion was to balance the A/C load . This was a 7 gpu rig. When on 110v it was 920 +/- about 20 watts. The 240 v wattmeter uses a magnetic coil pickup unlike the 110v one and varies wildly. about the 800 mark. I wired up my own cable. [edit]was not Thermaltake  |

Keith Myers Keith Myers Send message Joined: 29 Apr 01 Posts: 13164 Credit: 1,160,866,277 RAC: 1,873

|

Yes you get efficiencies in power conversion when upping the voltage that produces a drop in current. An amp clamp is pretty accurate as long as the shunt is reasonably calibrated and within the normal temp range. Seti@Home classic workunits:20,676 CPU time:74,226 hours   A proud member of the OFA (Old Farts Association) |

©2025 University of California

SETI@home and Astropulse are funded by grants from the National Science Foundation, NASA, and donations from SETI@home volunteers. AstroPulse is funded in part by the NSF through grant AST-0307956.