I have set No New Tasks...

Message boards :

Number crunching :

I have set No New Tasks...

Message board moderation

| Author | Message |

|---|---|

Pappa Pappa Send message Joined: 9 Jan 00 Posts: 2562 Credit: 12,301,681 RAC: 0

|

It is time to put things in a proper perspective and gain a bit more understanding of is happening. I have set No New Tasks. As a function of listening to the Seti Staff (there will be periods where there is no work (Outages etc). I have looked at other projects for their merit ages ago. Due to invlovement in things like Boinc Alpha have ran multiple CPU and GPU projects for ages while testing the Boinc Core Clients that you rely on (you are welcome). There are many Seti Users here help test those same Boinc Clients in Boinc Alpha. Being a realist and somewhat frowned at when I mention the words that Seti Users are creating the additional stress on the servers that many do not want to believe. That does not make it any less true. The evidence can still be seen that during the extended outage that "uploads" equaled 3.5 megabits/sec to both the Scheduler and the Upload server. Those were only "connection packets" not Data packets which are shorter. A "connection" packet is about 120 bits long. You can do the math. Now during that period If can believe any Statistics site there are 240,816 active hosts (CPU, MultiCore, CPU/GPU Multicore/GPU). The Boinc core will backoff on uploads and downloads. There are conditions that even though UL/DN are waiting a Scheduler Request will happen for additional work as soon as a Workunit is completed and the upload goes into backoff. If you look at the Router Interface right now you can see that the requests are a bit higher (about 5 megabits/sec). A couple of years ago there were from 125000 to 180000 users with roughly the same 230000-260000 computers. Most of those were single core CPU's. Users go up and down according to the time of year, the economy and loss of interest. Users upgrade computers to newer faster machines. Seti increacred the Science performed which basically doubled the time to do all the science. Things held at a same balance. nVidia states they have a GPU that is as powereful or more powerful than many existing CPU's an Ports/writes the code for Seti to prove it. Because the Boinc Core was not designed to handle Multiple Resources (CPU/GPU) DCF goes wacky and the server cannot provide enough work to keep the machine fully occupied. The users in response increase the cache size to account for the shortfall. Becaueuse of outageas induced by resource exhaustion, user once again increase the cache size to account for outages. Boinc starts to adjust the server to account for Multiple Resources, and in the process creates more stress on the servers (including Ghosts)... Is this starting sound like a death spiral? Mork was 24 cores to handle placing information into the Database and other silly things like the forums, stats, looking at pending credits, active workunits, results and more. Jocelyn is only 8 cores. Mork was not enugh to handle all of these functions and some were handed off to the Backup Server (Jocelyn). Think about it. The answer of uploads to the Schedler and Upload Server was equal to roughly 30000 requests per second. This "sorta" includes queries to the Boinc Database that were Forum requests, or a User looking that what is happening with their pendings, results etc. So the next time you push that Update Button, ask yourself if you are going to be the straw that broke the camels back? You are welcome to comment with what "facts" that you can find to disprove what I have stated. You are welcome ask questions about how we got here. Other things that are not on topic are not welcome. If you want a starting reference that does not cost you money, What is a packet? IF you have money that you want to spend then a good bookstore O'Rielly's TCP/IP on network protocols. Or TCP/IP Bind same author (unless you are a techy both will probably put the averags user to sleep {so it you have a sleeping problem that might help}. Regards Please consider a Donation to the Seti Project. |

S@NL - Eesger - www.knoop.nl S@NL - Eesger - www.knoop.nl Send message Joined: 7 Oct 01 Posts: 385 Credit: 50,200,038 RAC: 0

|

Pappa, You are making a good point, Are the requests towards the 'userw' URL also of impact for the stability at Berkeley at this point? If so, when it is of impact, I could shut down (or severely slow down) our "zero-day-stats"? Your thoughts please. The SETI@Home Gauntlet 2012 april 16 - 30| info / chat | STATS |

soft^spirit soft^spirit Send message Joined: 18 May 99 Posts: 6497 Credit: 34,134,168 RAC: 0

|

it is quite obvious things will be "lean" for quite a while here until the server situation is resolved. Short term, no new tasks or just do not expect many will be the norm. Combine this with people lashing out at each other. The physics (other than the actual server "deaths" occuring) of the situation is, computers got faster and faster. Servers met dual cores with dual cores, quad cores with quad cores/multi cpu. As the GPU's came in with massive multi-cores, the servers have not moved functionality over to the same. Can they? I do not know. Next step is CPU's are going to be massive cores. When they have 80+ cores per chip, we should equalize again. But new servers will still need called in. I think this is going to require a lot more than 2 new ones to re-balance things. The load on the bandwidth needs to be reduced. There are two ways that can be approached: Reduce the number of channels that can access it at once, or get a bigger pipe. Computer hardware will always become obsolete. Nothing is forever. And we have growing pains. We have a lot of unfilled caches coming. So get comfy. Janice |

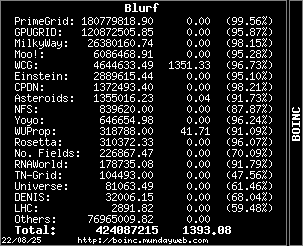

Blurf Blurf Send message Joined: 2 Sep 06 Posts: 8964 Credit: 12,678,685 RAC: 0

|

Then as I suggested in the past why not totally shut down uploads/downloads until the new servers arrive and officially state the project is down until the new hardware arrives? I think people are more frustrated by the "We may be or may not be up" which is really giving false hope that they will successfully upload/download. Not a criticism but just the way I'm hearing it and reading in PM's.   |

soft^spirit soft^spirit Send message Joined: 18 May 99 Posts: 6497 Credit: 34,134,168 RAC: 0

|

my suggestion remains.. shut down most if not all splitters. This might be a good time to catch up, work on re-issues, and generally clean the pipes. We are short on work anyway, why not do something constructive while we are at it? Janice |

Pappa Pappa Send message Joined: 9 Jan 00 Posts: 2562 Credit: 12,301,681 RAC: 0

|

Pappa, Any or all stats are a Query against the Master Science Database. Normally stats are dumped (http://setiathome.berkeley.edu/stats/) for use of the Statistics sites, then it becomes an FTP session. Using the userw query is a direct hit to the Master DB. As far as using it to pole for 5 users or 15 users if they are spread out enough would not normally kill the DB. Yes I know that you have worked with the Seti Staff to insure you have the minumal impact. That said I would say to watch the process closely, if it appears that the queries are talking longer suspend them. Regards Please consider a Donation to the Seti Project. |

|

Josef W. Segur Send message Joined: 30 Oct 99 Posts: 4504 Credit: 1,414,761 RAC: 0

|

... If you looked at the Packet rate on the Router Interface for the same time, it was showing around 1000 packets per second. IOW, the average packet size was ~5000 bits or 625 bytes. The upload server was enabled, so IMO that reflects slower hosts completing tasks and uploading result files. You can now see what happened when Jeff disabled the upload server around 6 am Berkeley time. ... The packet rate has peaked at slightly over 12000 during the last month, but only when downloading was enabled. That's serious enough, but indicates considerably less than 30000 requests/sec. I agree that it is sensible for users to avoid stressing the servers now. Wednesday I allowed work fetch attempts in the requests which were reporting finished tasks, that's the most efficient situation because the host, user, and team lookups have to be done anyway to handle the reported work. When the reports were accepted but the reply indicated the Feeder slots were empty I set No New Tasks rather than allow additional requests only for work fetch. When next the server status indicates plenty of "Results ready to send" and the project is configured to send work but the download rate is not saturated I'll probably allow some requests. Joe |

S@NL - Eesger - www.knoop.nl S@NL - Eesger - www.knoop.nl Send message Joined: 7 Oct 01 Posts: 385 Credit: 50,200,038 RAC: 0

|

As far as using it to pole for 5 users or 15 users if they are spread out enough would not normally kill the DB. Yes I know that you have worked with the Seti Staff to insure you have the minumal impact. I have kept an eye on it, if annything the results seem to come faster (if at all, then the script aborts and tries again later on). But good point, I'll keep keping an eye on it.. ;) The SETI@Home Gauntlet 2012 april 16 - 30| info / chat | STATS |

kittyman  Send message Joined: 9 Jul 00 Posts: 51478 Credit: 1,018,363,574 RAC: 1,004

|

I don't wanna talk to Pappa right now....... "Time is simply the mechanism that keeps everything from happening all at once."

|

Fred J. Verster Fred J. Verster Send message Joined: 21 Apr 04 Posts: 3252 Credit: 31,903,643 RAC: 0

|

|

Gundolf Jahn Gundolf Jahn Send message Joined: 19 Sep 00 Posts: 3184 Credit: 446,358 RAC: 0

|

Tasks will still be reported when NNT is set, after 24 hours at latest, but the upload server can't accept reporting requests. That's what the scheduler is needed for. The upload server does only that: accept uploads. Gruß, Gundolf Computer sind nicht alles im Leben. (Kleiner Scherz)  SETI@home classic workunits 3,758 SETI@home classic CPU time 66,520 hours |

©2024 University of California

SETI@home and Astropulse are funded by grants from the National Science Foundation, NASA, and donations from SETI@home volunteers. AstroPulse is funded in part by the NSF through grant AST-0307956.