Running (Jul 01 2008)

Message boards :

Technical News :

Running (Jul 01 2008)

Message board moderation

| Author | Message |

|---|---|

Matt Lebofsky Matt Lebofsky Send message Joined: 1 Mar 99 Posts: 1444 Credit: 957,058 RAC: 0

|

Today's Tuesday, which means we went through the usual database cleanup/backup outage. That went smoothly. As I may have already noted before, the replica mysql server has been regularly failing when actually writing the dump to disk. Our suspicion was that this server was having difficulty reaching the NAS via NFS - and mysql has been ultra-sensitive to any NFS issues. The master server doesn't have this problem, but maybe that's because it's attached to the NAS via a single switch (as opposed to the replica, which is going through at least three switches). Anyway.. we dumped the replica database locally and it worked fine. Our theory was strengthened, though not 100% confirmed. While the project was down we plucked out and old (and pretty much unused) serial console server from the closet. That saves us an IP address (we get charged per IP address per month as part of university overhead - which is another reason I try to keep our server pool lean and trim). I also cleaned up our current Hurricane Electric network IP address inventory and realized and cleaned up some old, dead entries in the DNS maps. Not sure if this is what has been causing lingering scheduler-connection problems. We shall see. Noted in the previous tech news thread, the science status page has been continually showing Alfa (the receiver from which we currently collect data) as "not running" for a while now. This was lost in the noise as Alfa actually hasn't been running much recently, but is still should have been shown as "running" every so often as data trickles in here and there. Looking back at the logs there has been a problem for some time now. We get the telescope specific data (pointing information, what receivers are on, etc.) every few seconds as they are broadcast to all the projects around the observatory. Perhaps the timing/format of these broadcasts have changed? In any case, I'm finding our script that reads these broadcasts is occasionally missing information, so I made it more insistent. We'll see if that helps. - Matt -- BOINC/SETI@home network/web/science/development person -- "Any idiot can have a good idea. What is hard is to do it." - Jeanne-Claude |

Dr. C.E.T.I. Dr. C.E.T.I. Send message Joined: 29 Feb 00 Posts: 16019 Credit: 794,685 RAC: 0

|

Today's Tuesday, which means we went through the usual database cleanup/backup outage. That went smoothly. As I may have already noted before, the replica mysql server has been regularly failing when actually writing the dump to disk. Our suspicion was that this server was having difficulty reaching the NAS via NFS - and mysql has been ultra-sensitive to any NFS issues. The master server doesn't have this problem, but maybe that's because it's attached to the NAS via a single switch (as opposed to the replica, which is going through at least three switches). Anyway.. we dumped the replica database locally and it worked fine. Our theory was strengthened, though not 100% confirmed. . . . Good Work there Berkeley - Thanks for the Posting Matt  BOINC Wiki . . . BOINC Wiki . . .Science Status Page . . . |

joenova joenova Send message Joined: 19 Feb 04 Posts: 3 Credit: 40,754 RAC: 0

|

Today's Tuesday, which means we went through the usual database cleanup/backup outage. That went smoothly. As I may have already noted before, the replica mysql server has been regularly failing when actually writing the dump to disk. Our suspicion was that this server was having difficulty reaching the NAS via NFS - and mysql has been ultra-sensitive to any NFS issues. The master server doesn't have this problem, but maybe that's because it's attached to the NAS via a single switch (as opposed to the replica, which is going through at least three switches). Anyway.. we dumped the replica database locally and it worked fine. Our theory was strengthened, though not 100% confirmed. Hi, I'd just like to drop a line and mention that I thoroughly enjoy reading these technical reports. I'm an IT junkie and I love the frequent glimpse into the SETI back-end systems. I used to run SETI classic, but somehow got out of the habit of installing it when doing a fresh OS. Glad to be back on the project. Thanks for the great tech info Matt! "Education: that which reveals to the wise, and conceals from the stupid, the vast limits of their knowledge." -- Mark Twain |

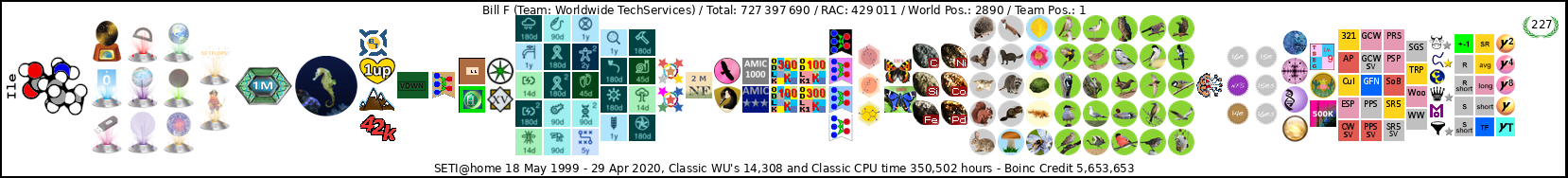

Bill F Bill F Send message Joined: 18 May 99 Posts: 42 Credit: 5,653,653 RAC: 2

|

Today's Tuesday, which means we went through the usual database cleanup/backup outage. That went smoothly. As I may have already noted before, the replica mysql server has been regularly failing when actually writing the dump to disk. Our suspicion was that this server was having difficulty reaching the NAS via NFS - and mysql has been ultra-sensitive to any NFS issues. The master server doesn't have this problem, but maybe that's because it's attached to the NAS via a single switch (as opposed to the replica, which is going through at least three switches). Anyway.. we dumped the replica database locally and it worked fine. Our theory was strengthened, though not 100% confirmed. Matt, On the science page for Alfa status could you post a since time stamp for the current status ? Off line since (date/time) or On Line since (date/time) That way we would know that it is cycling. Thanks Bill Freauff

|

Mr. Majestic Mr. Majestic Send message Joined: 26 Nov 07 Posts: 4752 Credit: 258,845 RAC: 0

|

|

©2024 University of California

SETI@home and Astropulse are funded by grants from the National Science Foundation, NASA, and donations from SETI@home volunteers. AstroPulse is funded in part by the NSF through grant AST-0307956.