Ups and Downs (Sep 04 2007)

Message boards :

Technical News :

Ups and Downs (Sep 04 2007)

Message board moderation

| Author | Message |

|---|---|

Matt Lebofsky Matt Lebofsky Send message Joined: 1 Mar 99 Posts: 1444 Credit: 957,058 RAC: 0

|

There were periods of feast or famine over the long holiday weekend. In short, we pretty much proved the main bottleneck in our work creation/distribution system is our workunit file server. This hasn't always been the case, but our system is so much different than, say, six months ago. More linux machines than solaris (which mount the NAS file server differently?), faster splitters clogging the pipes (as opposed to the old splitters running on solaris which weren't so "bursty?"), different kinds of workunits (more overflows?), less redundancy (leading to more random access and therefore less cache efficiency?)... the list goes on. There is talk about moving the workunits onto direct attached storage sometime in the near future, and what it would take to make this happen (we have the hardware - it's a matter of time/effort/outage management). Pretty much for several days in a row the download server was choked as splitters were struggling to create extra work to fill the results-to-send queue. Once the queue was full, they'd simmer down for an hour or two. With less restricted access to the file server the download server throughput would temporarily double. Adding to the wacky shapeof the traffic graph we had another "lost mount" problem on the splitter machine so new work was being created throughout the evening last night. We had the splitters off a bit this morning as Jeff cleaned that up. We did the usual BOINC database outage today during which we took the time to also reboot thumper (to check that new volumes survived a reboot) and switch over some of our media converters (which carry packets to/from our Hurricane Electric ISP) - you may have noticed the web site disappearing completely for a minute or two. - Matt -- BOINC/SETI@home network/web/science/development person -- "Any idiot can have a good idea. What is hard is to do it." - Jeanne-Claude |

Neil Blaikie Neil Blaikie Send message Joined: 17 May 99 Posts: 143 Credit: 6,652,341 RAC: 0

|

Good job guys. You guys working there should get more recognition for the impressive amount of work you do to keep "us" all happy and things running smoothly. Keep up the good work and hopefully that "signal" will arrive soon

|

Dr. C.E.T.I. Dr. C.E.T.I. Send message Joined: 29 Feb 00 Posts: 16019 Credit: 794,685 RAC: 0

|

Thanks to All of You @ Berkeley for a job well done . . . and Thanks for the Post Matt |

Sir Ulli Sir Ulli Send message Joined: 21 Oct 99 Posts: 2246 Credit: 6,136,250 RAC: 0

|

|

Dr. C.E.T.I. Dr. C.E.T.I. Send message Joined: 29 Feb 00 Posts: 16019 Credit: 794,685 RAC: 0

|

from Berkeley: 07:45 PM East Coast Time Results ready to send 10 1m Current result creation rate 8.97/sec 21m Results in progress 1,405,216 1m Workunits waiting for validation 6 1m Workunits waiting for assimilation 16 1m Workunit files waiting for deletion 3,855 1m Result files waiting for deletion 1,893 1m Workunits waiting for db purging 360,144 1m Results waiting for db purging 803,185 1m Transitioner backlog (hours) 0 11m . . . starting to climb Sir Ulli |

sunmines sunmines Send message Joined: 12 Feb 02 Posts: 3 Credit: 605,435 RAC: 1

|

Great work. It is a thrill to be able to do work you really love. Keep up the great work. |

Kenn Benoît-Hutchins Kenn Benoît-Hutchins Send message Joined: 24 Aug 99 Posts: 46 Credit: 18,091,320 RAC: 31

|

I have getting Work Units all along (lucky I guess), but I have noticed that when downloaded they have overly long 'to completion' times. It has varied from 35 hours up to 65 hours. Although in most cases the work is done is less then ten percent of that listed to completion time. One can see the time to completion tick off much more quickly then the CPU running time for that particular work unit (ratio of a minute to three seconds of CPU time) . This obviously reduces the number of work units downloaded when a cache of work is requested in preferences to 'maintain enough work for an additional' time. So my question is fourfold. Is this done intentionally to reduce the stress on the servers? Is this an error created by the server thus fooling it into not producing as many work units? Is this something that has slipped by the staff and can be corrected, thus making the servers more efficient and to stop the unwarranted blathering of some few volunteer computer owners? Is this unique to my computer or my type of my computer? I am using the latest application, and am in possession of iMac Core 2 Duo. Kenn Kenn What is left unsaid is neither heard, nor heeded. Ce qui est laissé inexprimé ni n'est entendu, ni est observé. |

|

Dave Stegner Send message Joined: 20 Oct 04 Posts: 540 Credit: 65,583,328 RAC: 27

|

Not a complaint, but rather a statement the you are not the only one seeing this. I have been seeing it for the last few days. Dave |

|

1mp0£173 Send message Joined: 3 Apr 99 Posts: 8423 Credit: 356,897 RAC: 0

|

I have getting Work Units all along (lucky I guess), but I have noticed that when downloaded they have overly long 'to completion' times. It has varied from 35 hours up to 65 hours. Although in most cases the work is done is less then ten percent of that listed to completion time. There is a variable called "duration correction factor" that keeps track of the predicted vs. actual processing time on work units. Sounds like the value has gone a little wacky. You can fix it manually, or you can just let it be and it'll correct by itself. |

Richard Haselgrove  Send message Joined: 4 Jul 99 Posts: 14690 Credit: 200,643,578 RAC: 874

|

There is a variable called "duration correction factor" that keeps track of the predicted vs. actual processing time on work units. Exactly so - it'll have been thrown by one of the faulty WUs a week ago - result 601249419. As Ned says, it'll slowly correct itself over time. So in answer to Kenn's questions: No No Yes, partly - it happened three weeks ago and was corrected in 48 hours, but the effects are still with us. No |

KWSN THE Holy Hand Grenade! KWSN THE Holy Hand Grenade! Send message Joined: 20 Dec 05 Posts: 3187 Credit: 57,163,290 RAC: 0

|

There is a variable called "duration correction factor" that keeps track of the predicted vs. actual processing time on work units. You also see this effect if you switch from the stock app to an optimized app - for the first two days or so you'll be downloading as though the stock app were still going, even though your optimized app is running 1.5-2x faster. The effect gradually wears away after that... (takes about two weeks, total - in my experience.) You also get this effect if you switch back to a stock app from an optimized app, only in reverse! .  Hello, from Albany, CA!... |

Kenn Benoît-Hutchins Kenn Benoît-Hutchins Send message Joined: 24 Aug 99 Posts: 46 Credit: 18,091,320 RAC: 31

|

A thank you for all those who responded. Kenn aka The Reinman There is a variable called "duration correction factor" that keeps track of the predicted vs. actual processing time on work units. Kenn What is left unsaid is neither heard, nor heeded. Ce qui est laissé inexprimé ni n'est entendu, ni est observé. |

|

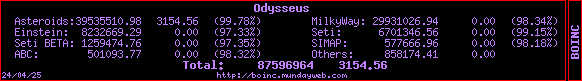

Odysseus Send message Joined: 26 Jul 99 Posts: 1808 Credit: 6,701,347 RAC: 6

|

You also get this effect if you switch back to a stock app from an optimized app, only in reverse! And sooner: RDCF rises faster (when tasks take longer than expected) than it falls (when they go quicker).  |

©2025 University of California

SETI@home and Astropulse are funded by grants from the National Science Foundation, NASA, and donations from SETI@home volunteers. AstroPulse is funded in part by the NSF through grant AST-0307956.