No work from project....

Message boards :

Number crunching :

No work from project....

Message board moderation

| Author | Message |

|---|---|

kittyman  Send message Joined: 9 Jul 00 Posts: 51542 Credit: 1,018,363,574 RAC: 1,004

|

The kitties are getting hungry again. The splitters appear to be having trouble keeping up since the server crash. Scotty!! (Matt, Eric).....fire up another warp drive, we need more power! "Time is simply the mechanism that keeps everything from happening all at once."

|

|

n7rfa Send message Joined: 13 Apr 04 Posts: 370 Credit: 9,058,599 RAC: 0

|

The kitties are getting hungry again. In my opinion, one of the problems is that they lowered the Results Ready to Send to a maximum of 200k. With 160k+ active users, it doesn't take any time at all to run through the queue for a normal outage.

|

kittyman  Send message Joined: 9 Jul 00 Posts: 51542 Credit: 1,018,363,574 RAC: 1,004

|

The kitties are getting hungry again. That may be true, but with enough splitter power online (More dilithium crystals, Scotty!!!!), that cache of ready to send would be adequate. This may be a temporary problem as indicated by part of Matt's technical news post from yesterday.... "However, things are still operating at a crawl (to put it mildly). This may be due to missing indexes (that weren't on the replica so they didn't get recreated on the master). Expect some turbulence over the next 24 hours as we recover from this minor mishap." Hopefully they will get all the warp drives online today. "Time is simply the mechanism that keeps everything from happening all at once."

|

|

rassm Send message Joined: 23 Jul 00 Posts: 14 Credit: 2,244,969 RAC: 0

|

The kitties are getting hungry again. I have not beeen getting significant units since the crash. I have reload/reboot my system. I can't get any more units that maybe 1 or 2 every couple of hours. |

|

rassm Send message Joined: 23 Jul 00 Posts: 14 Credit: 2,244,969 RAC: 0

|

The kitties are getting hungry again. I neglected to mention that the message I get is always the same Wed Aug 8 22:10:22 2007|SETI@home|Reason: no work from project |

W-K 666  Send message Joined: 18 May 99 Posts: 19724 Credit: 40,757,560 RAC: 67

|

I just removed app_info, so I would not use optimised. And immediately downloaded 5.27 app and new units. Andy |

Gary Charpentier Gary Charpentier  Send message Joined: 25 Dec 00 Posts: 31368 Credit: 53,134,872 RAC: 32

|

The kitties are getting hungry again. Results ready to send ZERO I think you need to clean the pipes Of course taking some debugging stats before you do might be a wise idea.

|

|

n7rfa Send message Joined: 13 Apr 04 Posts: 370 Credit: 9,058,599 RAC: 0

|

The kitties are getting hungry again. Keep in mind that (unless the information is mislabeled) the actual work abailable has dropped faster than the "Results Ready to Send" would indicate. Initially, 4 WU were created for each Result and the RRtS was 500k. This provided a queue of 2,000,000 WUs. (~12.5 WUs/Active User) Then the initial WUs were dropped to 3 and the queue dropped to 1,500,000. (~9.3 WUs/AU) Next the RRtS was dropped from a maximum of 500,000 to 200,000. Now the queue is 600,000. (3.7 WUs/AU - This is where we started seeing more "No work from project" issues.) Since the last database problem, the initial WUs created for each Result has been dropped to 2. Now the maximum number of WUs in the queue has dropped to 400,000. (~2.5 WU/AU) This is an overall drop of 80% in the size of the WU queue. (Again, this assumes that "Results Ready to Send" is a count of Results and not Work Units.)

|

Richard Haselgrove  Send message Joined: 4 Jul 99 Posts: 14690 Credit: 200,643,578 RAC: 874

|

Keep in mind that (unless the information is mislabeled) the actual work abailable has dropped faster than the "Results Ready to Send" would indicate. Actually, I think it's the other way round. If the labelling is accurate, then the number of results ready to send is - well, the number of results ready to send. It would only be if the figure we see on the server status page was actually the number of WUs available that the figures would nose-dive as you suggest. Remember that in project-speak the WU is the (single) datafile split from the 'tape': the results are the (multiple set of) crunching instructions derived from that single WU. It's only us sloppy crunchers that refer to our particular result as a WU (shorter to type!). On the other hand, I would imagine that the time-consuming part of the splitter's work is reading and re-writing the datafile, done once per WU. Generating the results from that WU will be much quicker. So dropping the initial replication from 4 to 3, and now to 2, will have halved the theoretical maximum rate of result production per splitter. I suspect that's why the 'results ready to send' figure has remained stubbornly at or near zero overnight. |

kittyman  Send message Joined: 9 Jul 00 Posts: 51542 Credit: 1,018,363,574 RAC: 1,004

|

We need more splitting power, Scotty!!!! "Time is simply the mechanism that keeps everything from happening all at once."

|

![View the profile of [B^S] madmac Profile](https://setiathome.berkeley.edu/img/head_20.png) [B^S] madmac [B^S] madmac Send message Joined: 9 Feb 04 Posts: 1175 Credit: 4,754,897 RAC: 0

|

|

kittyman  Send message Joined: 9 Jul 00 Posts: 51542 Credit: 1,018,363,574 RAC: 1,004

|

This may have something to do with the initial replication apparently having been dropped to 2 per WU. Not a bad thing, but probably requires that more splitting capacity be put online. I'm sure Matt and Eric are monitoring the situation, and I know they are working on the new MB splitter. I hope it ramps up before the kitties run out of work. They are starting to pace about a bit. "Time is simply the mechanism that keeps everything from happening all at once."

|

kittyman  Send message Joined: 9 Jul 00 Posts: 51542 Credit: 1,018,363,574 RAC: 1,004

|

Hmmmm....Current splitter rate down to 5.88/sec. Going down instead of up. This is not good. And the kitties are getting hungrier! MEOW! "Time is simply the mechanism that keeps everything from happening all at once."

|

Anthony Q. Bachler Anthony Q. Bachler Send message Joined: 3 Jul 07 Posts: 29 Credit: 608,463 RAC: 0

|

Processing the last 2 WU on my system as we speak. 40 minutes until my babies run our of work. (luckily I had a weeks worth of WU downloaded). Guess Ill be switching projects until they get the splitters back up and running. Gonna put off deploying boinc on the cluster til they fix this issue. |

Ghery S. Pettit Ghery S. Pettit Send message Joined: 7 Nov 99 Posts: 325 Credit: 28,109,066 RAC: 82

|

My prime machine has been without work since sometime last night. My slow machines are still working through their queues, but they (combined) only provide 1/3 of my output. Need...... More..... Fuel.....

|

|

Josef W. Segur Send message Joined: 30 Oct 99 Posts: 4504 Credit: 1,414,761 RAC: 0

|

A little rough math.... Four splitters were able to produce something like 9 results per second when each WU gave 3 results. That was enough to keep up with or get ahead of demand when the work being split was of the longer-running variety. The 5.27 application completes work in roughly 3/4 of the time the 5.15 app. There would need to be 12 (4/3 x 9) results per second to keep up with the new demand level. The change from 3 to 2 Initial Replication means four splitters are able to produce about 6 results per second. Joe |

Richard Haselgrove  Send message Joined: 4 Jul 99 Posts: 14690 Credit: 200,643,578 RAC: 874

|

A little rough math.... But wasn't one of the problems that they had only developed 'line-feed' splitters on the Sun/Solaris platform? As they ran out of working Sun hardware, they had to press every available (old, slow) Sun workstation into service to split WUs. But, IIRC, the new multi-beam splitters have been ported across to Linux, so they should run on newer, faster, and potentially more plentiful kit. I don't think they'll have too much difficulty getting up to 12 results/second. |

Fuzzy Hollynoodles Fuzzy Hollynoodles Send message Joined: 3 Apr 99 Posts: 9659 Credit: 251,998 RAC: 0 |

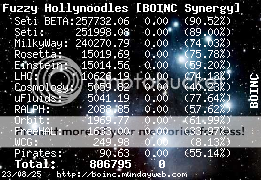

Hmmmm....Current splitter rate down to 5.88/sec. Going down instead of up. This is not good. Take away the chicken broth from the kitties' diet and you'll soon be able to serve real MB tuna for them. Just downloaded:  Click the pic  "I'm trying to maintain a shred of dignity in this world." - Me

|

|

Josef W. Segur Send message Joined: 30 Oct 99 Posts: 4504 Credit: 1,414,761 RAC: 0

|

A little rough math.... True, and even calling on some Solaris systems which aren't usually in the system they only got up to six splitters a couple of weeks ago. At this time they're down to three listed as running, Kang seems to be down. But the rate is 7.15 results per second. But, IIRC, the new multi-beam splitters have been ported across to Linux, so they should run on newer, faster, and potentially more plentiful kit. I don't think they'll have too much difficulty getting up to 12 results/second. Yes, though I'm not sure how much quicker the MB splitter code actually runs. If I had a system similar to theirs I'd do some timing running 32K forward FFTs followed by 8K inverse FFTs on 256 MB of data. I think indications are the splitter processes are more compute bound than I/O bound. In addition, MB data is inherently quicker to crunch due to the smaller beam width. I estimate that about 16 results per second will be needed once all work is MB, and considerably more if the data being split is Very High Angle Range (the "quick WUs"). But perhaps I'm getting into the zone of "The project never promised there would always be enough work available". None of this should be taken as criticism of the project. Their prime motivation is to get the work done as effeiciently as possible, and I'm in full agreement. I do worry that some of theses transitions may drive some participants away, but have no way of judging the amount of that effect. Certainly the group who contribute to these forums is a small fraction of the total user base. Lurkers may or may not expand that fraction much. Joe |

|

Ingleside Send message Joined: 4 Feb 03 Posts: 1546 Credit: 15,832,022 RAC: 13

|

True, and even calling on some Solaris systems which aren't usually in the system they only got up to six splitters a couple of weeks ago. At this time they're down to three listed as running, Kang seems to be down. But the rate is 7.15 results per second. It's over 7 results/second due to atleast one multibeam-splitter is running, even it's not yet listed on status-page. Yes, though I'm not sure how much quicker the MB splitter code actually runs. If I had a system similar to theirs I'd do some timing running 32K forward FFTs followed by 8K inverse FFTs on 256 MB of data. I think indications are the splitter processes are more compute bound than I/O bound. According to status-page, 1 "block" is 1.7 seconds, and 48 "blocks" (81.6 s) gives 256 wu's. Meaning, 256 wu / 81.6 s = 3.137 wu/s or 6.27 results/second is the supply of "single-beam" splitter. Any more "single-beam" is a "bonus". Multi-beam-supply on the other hand is 14x, meaning 43.92 wu/second or 87.84 results/second. If each wu is 350 KB, this means splitters will write 15.7 MB/s to disk, or 126 Mbit/s. Outgoing bandwidth is 252 Mbit/s. If not mis-remembers, it's been mentioned that the download-array is maxed-out around 60 Mbit/s... "I make so many mistakes. But then just think of all the mistakes I don't make, although I might." |

©2025 University of California

SETI@home and Astropulse are funded by grants from the National Science Foundation, NASA, and donations from SETI@home volunteers. AstroPulse is funded in part by the NSF through grant AST-0307956.