1080 underclocking

Message boards :

Number crunching :

1080 underclocking

Message board moderation

Previous · 1 . . . 3 · 4 · 5 · 6 · 7 · 8 · 9 . . . 11 · Next

| Author | Message |

|---|---|

jason_gee jason_gee Send message Joined: 24 Nov 06 Posts: 7489 Credit: 91,093,184 RAC: 0

|

Can you stick the hybrid's pump and radiator fan on another power source ? "Living by the wisdom of computer science doesn't sound so bad after all. And unlike most advice, it's backed up by proofs." -- Algorithms to live by: The computer science of human decisions. |

Zalster Zalster Send message Joined: 27 May 99 Posts: 5517 Credit: 528,817,460 RAC: 242

|

Ha, just encountered the issue with EVGA's Precision OC not being happy with my 980 in place. It was OK until I swapped the cards around. Dr. Grey, you have to exit boinc and stop crunching. Open Nvidia inspector and click show overclocking, you will get a warning, click ok. You will see all GPU listed on the left side of the panel, on the right you will see the setting. From the pull down select P2 and change the values there to match what they are in P0 (the default on the pull down). After you do that you must go to the right lower corner click on the "apply clock & voltage" This will save the changes until a reboot. Then go to the left side, select a different GPU and repeat. Do this for all GPUs. Once you are finished restart BONIC and see if the values took

|

Al  Send message Joined: 3 Apr 99 Posts: 1682 Credit: 477,343,364 RAC: 482

|

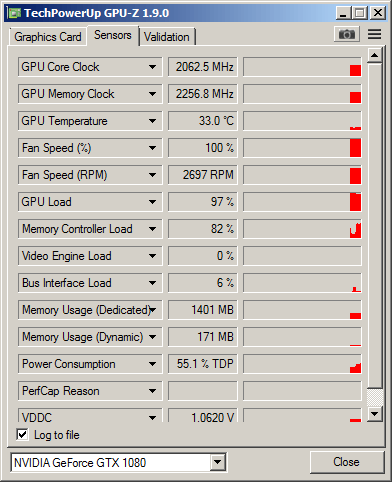

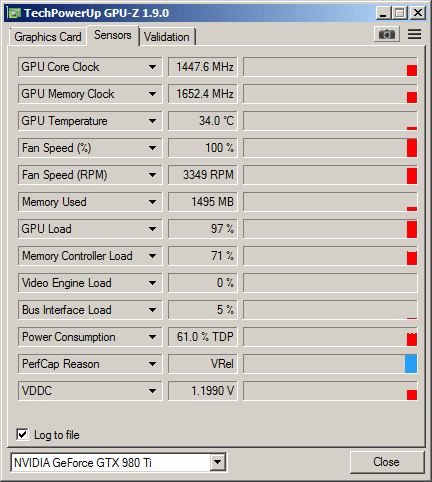

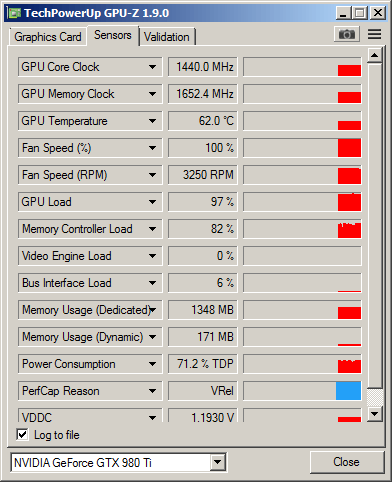

Now this is weird, on this screen, is says the GPU core clock is running at 2062.5, and the memory clock at 2256, still with a temp around 30 at 100% fan. Well, I am running 4 tasks concurrently, running one core per task, what do you suggest for parameters to set the card for? And are you running Precision X OC or 16, are you mixing series in your system as well? I couldn't find a way to get the new version to be happy with the 9 series cards, and EVGA was useless. Suggestions are appreciated. 980Ti that I Hybrid'ized, and one that is still running on air:   Do these appear that they are working up to their full potential? I don't want to take anything to the bleeding edge, but do want them all to be working and not loafing.. ;-) *edit* Also replaced the screenshot of the 1080 with another one that is sized smaller, and watched it for a little bit. It bounces between the high 30's and the mid 50s in TDP.

|

petri33 petri33 Send message Joined: 6 Jun 02 Posts: 1668 Credit: 623,086,772 RAC: 156

|

Now this is weird, on this screen, is says the GPU core clock is running at 2062.5, and the memory clock at 2256, still with a temp around 30 at 100% fan. These pictures and the needed command line options are from the windows world. The last two pictures seem ok. 60-70% TDP reveals that they are running quite good. I'll leave it up to Mike or another windows guru to help you. To overcome Heisenbergs: "You can't always get what you want / but if you try sometimes you just might find / you get what you need." -- Rolling Stones |

jason_gee jason_gee Send message Joined: 24 Nov 06 Posts: 7489 Credit: 91,093,184 RAC: 0

|

Do these appear that they are working up to their full potential? I don't want to take anything to the bleeding edge, but do want them all to be working and not loafing.. ;-) ~70-75% of TDP for me usually results in satisfactory throughput versus heat/balls-to-the-wall. 'Full potential' is a shifting target right now. "Living by the wisdom of computer science doesn't sound so bad after all. And unlike most advice, it's backed up by proofs." -- Algorithms to live by: The computer science of human decisions. |

Zalster Zalster Send message Joined: 27 May 99 Posts: 5517 Credit: 528,817,460 RAC: 242

|

Can you stick the hybrid's pump and radiator fan on another power source ? I removed the radiator fan, before Jayz said he did it (lol), and put a Mag lift 120 from Corsair on there and connected it to the mobo. So the card only had the stock fan and pump motor on the fan pins of the GPU, still underclocked. Can I remove the pump power pin from the card and put it on the motor board? maybe if there was a fan pin close by but usually those are near the GPU PCI slot. Probably better to go for a molex with a 3 pin ( I haven't found a molex to 4 pin) That might work, but I'm thinking, it might not be worth it. I think the take away message is 8 pin 10x0s shouldn't be modified for hybrids as they lack sufficient power (which surprises me since EVGA now sells a 1080/1070 hybrid kit) to support those. EVGA on their own decided to make their "official" hybrids from the FTW GPU. Which has 8+8 pin connectors. Why??? why not their founders or gamer's edition like they have for the 900s? Again, I think they realized it was a power issue (I'm guessing there but it makes sense) Ok, I'll get off my soap box Zalster

|

Zalster Zalster Send message Joined: 27 May 99 Posts: 5517 Credit: 528,817,460 RAC: 242

|

Al are you running stock, petri or SoG? That will help in figuring out how many to run |

jason_gee jason_gee Send message Joined: 24 Nov 06 Posts: 7489 Credit: 91,093,184 RAC: 0

|

Looks like SoG on his 1080 "Living by the wisdom of computer science doesn't sound so bad after all. And unlike most advice, it's backed up by proofs." -- Algorithms to live by: The computer science of human decisions. |

Zalster Zalster Send message Joined: 27 May 99 Posts: 5517 Credit: 528,817,460 RAC: 242

|

Looks like SoG on his 1080 Ah, yes I see it now... 16 minutes per work unit or 4 minutes if 4 at a time. That's pretty good considering he's not using any commandlines. He might be able to squeeze out more with a better commandline, but that would require testing.. |

Dr Grey Dr Grey Send message Joined: 27 May 99 Posts: 154 Credit: 104,147,344 RAC: 21

|

Ha, just encountered the issue with EVGA's Precision OC not being happy with my 980 in place. It was OK until I swapped the cards around. Thanks Zalster. I tried it but it had no effect on the value shown in GPU-Z. So I opened up PrecisionX16 and dialled up the memory from there and it seems to have taken. |

Al  Send message Joined: 3 Apr 99 Posts: 1682 Credit: 477,343,364 RAC: 482

|

SoG, yep.

|

jason_gee jason_gee Send message Joined: 24 Nov 06 Posts: 7489 Credit: 91,093,184 RAC: 0

|

lmao, that's the exact opposite of traditional behaviour, where precisionX affects the p0 state and the p2 state needs deeper digging. Someone got guesses/explanation for that ? maybe 10 series they put applications back to p0 state ? Maybe precisionX now affects p2 state ? "Living by the wisdom of computer science doesn't sound so bad after all. And unlike most advice, it's backed up by proofs." -- Algorithms to live by: The computer science of human decisions. |

Al  Send message Joined: 3 Apr 99 Posts: 1682 Credit: 477,343,364 RAC: 482

|

Looks like SoG on his 1080 I would be happy to run command lines, but I would need a bit of hand holding to do so: What to put in, where to put it, etc. More than happy to try it, just not sure the specifics.

|

Zalster Zalster Send message Joined: 27 May 99 Posts: 5517 Credit: 528,817,460 RAC: 242

|

Al Is that 16 minute per task or 16 minutes for 4 tasks? How did you come up with that number |

Keith Myers Keith Myers Send message Joined: 29 Apr 01 Posts: 13164 Credit: 1,160,866,277 RAC: 1,873

|

Looks like SoG on his 1080 AL, it looks like you are running SoG on the 1080 but CUDA on the 1060. So a bit different tuning line for each type of app. Richard convinced me it is simpler to add the commandline parameters to the app_config file instead of the app specific text file. That way your settings are preserved against app or Lunatics updates. For example this is my app_config.xml AP command line: <cmdline>-unroll 18 -oclFFT_plan 256 16 256 -ffa_block 16384 -ffa_block_fetch 8192 -tune 1 64 8 1 -tune 2 64 8 1</cmdline> For example this is my app_config.xml MB SoG command line: <cmdline>-sbs 512 -instance_per_device 2 -period_iterations_num 5 -spike_fft_thresh 4096 -tune 1 64 1 4 -oclfft_tune_gr 256 -oclfft_tune_lr 16 -oclfft_tune_wg 256 -oclfft_tune_ls 512 -oclfft_tune_bn 64 -oclfft_tune_cw</cmdline> I am not doing CUDA anymore so I don't have the parameters for that now. You would have to adjust the MB command line for instance_per_device if you wanted to run more than two tasks per card and you would have to play around with period_interations_num line to reduce your desktop lags. The sbs and tune settings would work well for your 1080 as they do for my 1070. To figure out what is appropriate go to the /Docs folder in the Seti main folder where Lunatics installed it and read through the AP and MB text files to see the preferred tuning parameters for the card types and generations. The listed settings are more conservative for the general public which is expected to just install Lunatics and run it stock. My settings are more appropriate or aggressive for dedicated crunchers. Seti@Home classic workunits:20,676 CPU time:74,226 hours   A proud member of the OFA (Old Farts Association) |

Keith Myers Keith Myers Send message Joined: 29 Apr 01 Posts: 13164 Credit: 1,160,866,277 RAC: 1,873

|

Hi, Just stumbled onto this news story PCIe 4.0 will make auxiliary power cables for GPUs obsolete I didn't believe the story title. This new PCIe 4.0 spec is going to allow at least 300 Watts through the slot connector and maybe as much as 500 Watts. WOW!. That will take some engineering I think. The slot pins are no way capable of passing 30-40 amps per card that the latest GPUs require with the current PCIe 3.0 spec. That would mean a heckuva lot of paralleled pins, certainly more than the 5 currently passing power to the GPUs. Seti@Home classic workunits:20,676 CPU time:74,226 hours   A proud member of the OFA (Old Farts Association) |

|

Grant (SSSF) Send message Joined: 19 Aug 99 Posts: 13736 Credit: 208,696,464 RAC: 304

|

Looks like SoG on his 1080 I found with my GTX 750Ti & GTX 1070 running the current SoG version in the Lunatics Beta v4 installer that running 1 at a time using the sample "Super clocked x50TI / x60TI" command line gave a huge boost to my output. Running 2 or 3 at a time gave a very slight boost in output, so slight I decided it wasn't worth it, especially after getting a "Finish file present too long" error for the first time while running 3 at a time. Running with the "High end cards x8x x80TI Titan / Titan Z" command line would hopefully give even better output from my GTX 1070, but with the GTX 750Ti in the system with it, that's not possible at this stage. Grant Darwin NT |

|

Grant (SSSF) Send message Joined: 19 Aug 99 Posts: 13736 Credit: 208,696,464 RAC: 304

|

I would be happy to run command lines, but I would need a bit of hand holding to do so: What to put in, where to put it, etc. More than happy to try it, just not sure the specifics. As mentioned, sample files can be found in the C:\ProgramData\BOINC\projects\setiathome.berkeley.edu\docs folder in the ReadMe_MultiBeam_OpenCL_NV_SoG.txt file. Using this one with my GTX 750Ti/GTX 1070 Super clocked x50TI / x60TI -sbs 256 -spike_fft_thresh 2048 -tune 1 64 1 4 -oclfft_tune_gr 256 -oclfft_tune_lr 16 -oclfft_tune_wg 256 -oclfft_tune_ls 512 -oclfft_tune_bn 32 -oclfft_tune_cw 32 (*requires testing) Would like to use this one, but I doubt the GTX 750TI would appreaciate it. High end cards x8x x80TI Titan / Titan Z -sbs 384 -spike_fft_thresh 4096 -tune 1 64 1 4 -oclfft_tune_gr 256 -oclfft_tune_lr 16 -oclfft_tune_wg 256 -oclfft_tune_ls 512 -oclfft_tune_bn 64 -oclfft_tune_cw 64 (*requires testing) I just copied the command line into the mb_cmdline_win_x86_SSE3_OpenCL_NV_SoG.txt file in the setiathome.berkeley.edu folder & the next time the application starts, it uses those values. Although I like the idea of settings that don't change when you re-run the Lunatics installer that Keith is using. Grant Darwin NT |

Zalster Zalster Send message Joined: 27 May 99 Posts: 5517 Credit: 528,817,460 RAC: 242

|

I would be happy to run command lines, but I would need a bit of hand holding to do so: What to put in, where to put it, etc. More than happy to try it, just not sure the specifics. Grant, if you look at the 2 commandlines, the only major difference looks like the -sbs and the -oclfft_tune_cw Why not try increasing the -sbs to 384 in your 750Ti and see how it does. Like they say, 1 step at a time. Z |

|

Grant (SSSF) Send message Joined: 19 Aug 99 Posts: 13736 Credit: 208,696,464 RAC: 304

|

Why not try increasing the -sbs to 384 in your 750Ti and see how it does. Might give it a go at some stage when i'm 100% percent again. Not sure what it is but i'm definitely not firing on all cylinders for that last day or 2. And I have been enjoying just seeing how fast the cars go through the Arecibo work; roughly 4min 30s for the GTX 1070 & 13min 30s for the GTX 750Ti on an average WU. Would be good to be able to make full use of the extra 10 Compute Units the 1070 has over the 750Ti. Grant Darwin NT |

©2024 University of California

SETI@home and Astropulse are funded by grants from the National Science Foundation, NASA, and donations from SETI@home volunteers. AstroPulse is funded in part by the NSF through grant AST-0307956.