SETI Farm deployment

Message boards :

Number crunching :

SETI Farm deployment

Message board moderation

Previous · 1 · 2

| Author | Message |

|---|---|

MikeSW17 MikeSW17 Send message Joined: 3 Apr 99 Posts: 1603 Credit: 2,700,523 RAC: 0

|

If I have 100 hosts, of which none are returning work ... why do I need to have more? This is back to the "bad" hosts and ill behaved people consuming resources ... if my 100 hosts are all returning work, well tomorrow, let me add another 100 ... if they all return work ... and so on. Ah, but no one said anything about 'how many hosts you can register in a period' Yes, I can see some sense in capping the number of hosts registered per day perhaps. Yet it would also be pretty simple for Berkely to automatically purge all hosts registered say two weeks before that have not returned a single result.

|

Paul D. Buck Paul D. Buck Send message Joined: 19 Jul 00 Posts: 3898 Credit: 1,158,042 RAC: 0

|

The point is that if they consume the number space we have to do odd things to "recycle" the numbers. So, if you have hosts that are gobbling up the IDs, soon you have to scan for IDs that were never used with a result so you can make a list of those that can be reused. But that opens you up to the possibilities that you may make a mistake and essentially "taint" your data because you don't have perfect traceability. So, it is best to limit the number you can generate by accident. |

MikeSW17 MikeSW17 Send message Joined: 3 Apr 99 Posts: 1603 Credit: 2,700,523 RAC: 0

|

The point is that if they consume the number space we have to do odd things to "recycle" the numbers. So, if you have hosts that are gobbling up the IDs, soon you have to scan for IDs that were never used with a result so you can make a list of those that can be reused. But that opens you up to the possibilities that you may make a mistake and essentially "taint" your data because you don't have perfect traceability. Oh please don't tell me that there is an artificially imposed number space limit? For c**** sake even a simple datatype like a long integer would provide for 2,147,483,648 hosts, hundreds of times what classic ever had.

|

Prognatus Prognatus Send message Joined: 6 Jul 99 Posts: 1600 Credit: 391,546 RAC: 0

|

IMO, the best and logical way is to verify the new host is a genuin and unique machine. If this isn't possible, I support Falconfly's idea about deleting non-productive hosts. I also support Paul D. Buck's idea about a max number of hosts per account - providing that big companies/organizations may apply for a larger quota. Cheaters should also be hunted down more agressively, IMO. And punishment for cheating more severe. No compromise and no second chance. |

|

John McLeod VII Send message Joined: 15 Jul 99 Posts: 24806 Credit: 790,712 RAC: 0

|

Oh please don't tell me that there is an artificially imposed number space limit? True, but some users are having difficulties and burning through host IDs at the quota rate of WUs / day for each host that they have. This could exhaust 2^32 (max unsigned long = 4,294,967,296) if maintained. Of course if the size of the number were increased to 2^128, we would never run out.   BOINC WIKI |

|

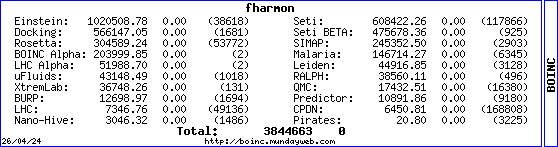

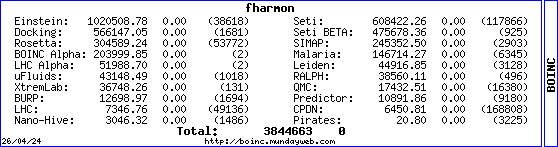

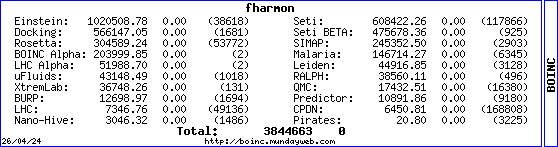

SURVEYOR Send message Joined: 19 Oct 02 Posts: 375 Credit: 608,422 RAC: 0

|

How about a limit of say 100 +/- host per account? Fred BOINC Alpha, BOINC Beta, LHC Alpha, Einstein Alpha

|

MikeSW17 MikeSW17 Send message Joined: 3 Apr 99 Posts: 1603 Credit: 2,700,523 RAC: 0

|

How about a limit of say 100 +/- host per account? Not enough, IMO. The OP already indicated he had 350+ hosts. I've already cited two classic accounts with ~1000 hosts each.

|

|

Berserker Send message Joined: 2 Jun 99 Posts: 105 Credit: 5,440,087 RAC: 0

|

The current highest host number reported in the XML stats (as of yesterday) is 1,132,249 so we're some considerable way off consuming the number space (assuming it's 2^31 or 2^32). However, the more hosts you have in a database, the bigger the tables get, so it's a database problem rather than a number space problem. Hard-limiting an account to a fixed number of hosts (especially if that number is as low as 100) is a bad idea. While this would not affect the vast majority of users, this would certainly alienate some of the bigger school/company farms that may want to participate in these projects now or in the future. Limiting the number of new hosts per day is more reasonable, as that would put a control on the runaway creation of new hosts (either accidental or deliberate), without preventing the bigger 'farms'. The key there is balancing the limit to stop runaway creation, while at the same time limiting the impact on big farms adding lots of hosts (e.g. using one of the remote deployment scripts above, or technologies such as SMS for deployment). This could be combined with purging of hosts that had not returned a single result within a reasonable time (e.g. double the workunit deadline). Also, just FYI, Ministry of Serendipity have been accused of cheating numerous times, and as a result were checked out thoroughly (and given a clean bill of health) by Berkeley, at a time when several less reputable 'participants' were getting their accounts reset. Whether we will ever see the likes of them moving to BOINC is questionable, but for the benefit of the science (and a little boost to the stats too), I certainly hope so. Stats site - http://www.teamocuk.co.uk - still alive and (just about) kicking. |

|

SURVEYOR Send message Joined: 19 Oct 02 Posts: 375 Credit: 608,422 RAC: 0

|

Maybe a limit to how many host cane be added in a day Fred BOINC Alpha, BOINC Beta, LHC Alpha, Einstein Alpha

|

|

bobb2 Send message Joined: 5 Feb 00 Posts: 53 Credit: 380,595 RAC: 0

|

Thanks for all the discussion. It has been very edifying. Here are some current stats: The max id for host is at ~1.2M. The number of hosts that have returned any workunits is around 315K. I removed over 300K 'inactive' hosts from 2 users and the total number of registered hosts for seti_boinc was reduced from >800K to ~590K. There are a bunch of users with >200 inactive hosts, 4 with >1000 and 2 with >10000. The issue is that in the future when we have around 1M hosts that are scanned by various queries it would be better not the scan 40% nusance host entries (it was around this value prior to the deletions), it would impact valid users. I know what I would like the db response time for queries to be and would hate to have any degradation due to wasteful entries. (That is why I said that in the future it could impact us). I feel that tuning the db to handle waste as a general rule is not what we are about, and so we may want to deal with this in a more automatic way than we did with cheaters or potential DoS in classic. That is why I asked for input. Thanks, Bob B |

Paul D. Buck Paul D. Buck Send message Joined: 19 Jul 00 Posts: 3898 Credit: 1,158,042 RAC: 0

|

I guess one of my questions is this, are you preserving the host data as you move data off the BOINC Database to the scinece database? If not, then a more aggressive stand on whacking out inactive hosts can be tolerated. But, I see no reason that a daily cap, and to be honest, I would also suggest that for MOST situations a number like 10 is far more realistic ... For those situations where we do have a large group coming over from classic, well, first question are they going to move over or not. If not, not a problem, if so, then, I am sure tht they could be accomidated. |

|

SURVEYOR Send message Joined: 19 Oct 02 Posts: 375 Credit: 608,422 RAC: 0

|

bobb2 thanks for the return info Fred BOINC Alpha, BOINC Beta, LHC Alpha, Einstein Alpha

|

|

Berserker Send message Joined: 2 Jun 99 Posts: 105 Credit: 5,440,087 RAC: 0

|

I guess one of my questions is this, are you preserving the host data as you move data off the BOINC Database to the scinece database? I don't see any reason for doing that personally. SETI Classic certainly didn't. You'd want to know who returned a result in case it's the 'wow!' signal and you want to give them credit, but which PC they used to return it isn't very useful (by the time you get around to realising it's the 'wow!' signal, that PC has probably gone anyway). If not, then a more aggressive stand on whacking out inactive hosts can be tolerated. But, I see no reason that a daily cap, and to be honest, I would also suggest that for MOST situations a number like 10 is far more realistic ... Whacking out old hosts happens anyway. On a typical day, about 250 hosts currently disappear due to merging. That's not a big number, but since it happens anyway, whacking out a few more isn't going to do any harm. I agree with you that 10 hosts/day is fine for the average user, but a surprising number of users do not qualify as average. Of the users who don't hide their hosts (which is likely to be quite a few of the bigger farms due to security concerns, valid or not), 581 users have 10 or more active hosts (active = received credit in the last 14 days, other definitions may vary). 17 users have 50 or more active hosts, and 5 have 100 or more. For those situations where we do have a large group coming over from classic, well, first question are they going to move over or not. If not, not a problem, if so, then, I am sure tht they could be accomidated. True, but given the numbers above (which are likely to grow significantly when Classic finally shuts down), the 'accomodation' needs to be reasonably unobtrusive, and if that happens, what's to stop someone abusing that system? Stats site - http://www.teamocuk.co.uk - still alive and (just about) kicking. |

|

ric Send message Joined: 16 Jun 03 Posts: 482 Credit: 666,047 RAC: 0

|

Boinc is only running on my privat farm. M$ based OS hosts. It happen that when I don't want to support project x, because the reason of xyz or simple because the project y is offering work, I detach the farm (not a single host) from the project. Only recent client are offering "no more work" Later, depending the mood, It happen, I reattach the "farm" to project x. It's not forbidden to detach/reattach from a project, it's part of the design of boinc. A limitation of new attached hosts (per day) why not, if it is resonable. When I have to wait "several days" until the last host of the farm can be attached, I will think it's to complicated, to Userunfriendly so it's better to not support a perhaps "paranoid" project. The other thing is, it happen when a major service pack is out, the half farm is updated/patched with this OS "product upgrade". Usualy a major service pack is also "creating" new host entries. Only because I follow the recommended OS updates, I will be punished by the project? Everything can be done. A wise person would include the thinking what would happen when this or that is done/implemented. A solution restrincting a majority of "normal" users to narrow abusement of really minor amount of "outside normal" running users, is IMHO a bad solution. I hope, any kind of solution, sanity is included. |

|

John McLeod VII Send message Joined: 15 Jul 99 Posts: 24806 Credit: 790,712 RAC: 0

|

One possibility would to base a client record on the Host CPID. You don't get a new record unless the Host CPID reported is different. This might cut down on the large number of new hosts in the database. Note that I am not referring to the user CPID.   BOINC WIKI |

|

Berserker Send message Joined: 2 Jun 99 Posts: 105 Credit: 5,440,087 RAC: 0

|

I'm not sure on what basis the host CPID is produced, but that would seem on the surface to be a reasonable approach. The only issue I can see with that is how the host CPID is generated and stored. For example, if I took a 'standard' pre-configured BOINC installation and deployed it using one of the remote deployment scripts, I wouldn't want all the hosts to end up with the same CPID. Also, while that would significantly reduce the chances of accidentally generating large numbers of hosts, it would do little to prevent someone determined to attempt a DoS-like attack on a project. Stats site - http://www.teamocuk.co.uk - still alive and (just about) kicking. |

MikeSW17 MikeSW17 Send message Joined: 3 Apr 99 Posts: 1603 Credit: 2,700,523 RAC: 0

|

Is the such a thing as a host CPID? I can't see one, where is it?

|

|

Berserker Send message Joined: 2 Jun 99 Posts: 105 Credit: 5,440,087 RAC: 0

|

Yes, there is, although it's not well advertised. Most projects should support it by now, so providing you have a 4.4x client, it'll be in your client_state.xml Stats site - http://www.teamocuk.co.uk - still alive and (just about) kicking. |

©2024 University of California

SETI@home and Astropulse are funded by grants from the National Science Foundation, NASA, and donations from SETI@home volunteers. AstroPulse is funded in part by the NSF through grant AST-0307956.