2nd: Credit for Clients (Credit errors)

Message boards :

Number crunching :

2nd: Credit for Clients (Credit errors)

Message board moderation

| Author | Message |

|---|---|

ML1 ML1 Send message Joined: 25 Nov 01 Posts: 21773 Credit: 7,508,002 RAC: 20

|

This follows on from Credit for Optimised Clients and looks to be very much in discussion still across various threads! In summary: The present benchmark run by boinc can be badly inaccurate for some systems. Or rather, it may well accurately run the benchmark, but the results are not accurate for awarding credit for real project WUs. So the same WU will get very different claimed credit depending on what machine is used. Users complain about this. And yes, boinc should be all for the science. However, the credits are an incentive to many users and there has to be a sense of 'fair play'! A sense of 'competition' will always sway people to 'push the game' a little. For example: Here's how to Get More Credit. From another thread, there was very strong sentiment that SCIENCE done rather than CPU WORK done should be credited. Hence, optimised clients should get the same credit for a WU as do unoptimised clients that take twice as long to run the same WU. Possible fixes (as discussed elsewhere and my comments) 1: Make the benchmark code more accurate I believe this is difficult and not practical. I'll explain later. 2: Use an 'averaging factor' that adjusts claimed credit as estimated by the benchmark to match the awarded credit. Possible and simple but this would be a kludge fix. Also, different types of WU would cause the 'averaging factor' to hunt between a number of values. 3: Add accounting code into the clients to directly add up the 'SCIENCE done'. Messy, expensive and error prone! 4: Run a short reference WU to characterise the host system to accurately benchmark (and validate) that system. This is the more thorough way to assess how much credit to grant for the science done. However, there would need to be a shortened reference WU for each project, and care taken that the WU still exercised the host system in a representative way. For s@h, I believe this could be easily done. (Use a noisy WU that exceeds the output limits after just one full set of FFTs and a gaussian check.) Why is the present benchmark so unrepresentative for s@h? Well, my guess is that it exercises only a very small part of a host system in one specific type of work only. Processing s@h WUs is dependant on aspects of the CPU architecture and host system that the benchmark does not measure. So a real WU is then processed and the performance of the FPU, pipelining, CPU cache(s), memory, client compilation options, and others all come into play in ways that the benchmark never measured. My vote goes with option '4' because it makes a direct measurement and the reference WU can also be used as an immediate system test to check against host system corruptions. Option '2' is an indirect but simple fix that patches over the symptoms rather than fixing the cause. It might give a reliable fix, or it might just cause more confusion... The real question is what is being discussed amongst the developers. Or is this all forgotten on the back burner?? Any developers out there to comment? (Or has this already been fixed? :) ) Regards, Martin See new freedom: Mageia Linux Take a look for yourself: Linux Format The Future is what We all make IT (GPLv3) |

W-K 666  Send message Joined: 18 May 99 Posts: 19730 Credit: 40,757,560 RAC: 67

|

There was, recently, an answer to the short WU option, and the answer was that it is not possible to have shorter seti units, and again would not test the computer system as the benchmark needs to take into account the L2 cache size and use of main memory if needed with a full size unit. i.e. on a computer with a smallish L2 cache the shorten WU may fit into the L2 cache but a full size one wouldn't, and the full unit would take longer that indicated by this benchmark. But a variation? on 2, why can't they just record how long in takes each computer to complete all the units it proccesses and calculate the average for that computer. Then compare that average with the coblestone computer to do any further calculations. Andy |

|

John McLeod VII Send message Joined: 15 Jul 99 Posts: 24806 Credit: 790,712 RAC: 0

|

But a variation? on 2, why can't they just record how long in takes each computer to complete all the units it proccesses and calculate the average for that computer. Then compare that average with the coblestone computer to do any further calculations. Because WUs come in different sizes even for the same project and application. LHC has three different size WUs that are completely indistinguishable, but they are a factor of 10 different in crunch time each step from smallest to middle, and from middle to largest. For a factor of 100 total between the smallest and largest. Add to this that any of those could end early, and you have a factor of about 1000 between shortest and largest. S@H WUs already take different processing times (from a 5 minute noise WU) through full crunch time on high and low angle WUs. S@H is going to add AstroPulse, and Parkes observations both of which will take different times. One of the Shh projects has hundreds of different processing times and deadlines that they know about in advance. And while that one is an aberation, there are at least two others that have very different processing times that they don't know how to identify in advance. Averages across WUs for the same project and application can be quite meaningless.   BOINC WIKI |

ML1 ML1 Send message Joined: 25 Nov 01 Posts: 21773 Credit: 7,508,002 RAC: 20

|

There was, recently, an answer to the short WU option, and the answer was that it is not possible to have shorter seti units, and again would not test the computer system as the benchmark needs to take into account the L2 cache size and use of main memory if needed with a full size unit... Sorry, I was too brief in my description. By 'short WU' I mean a completely normal full size s@h WU, but one specially chosen so that enough spikes, pulses and gaussians are output so that the WU completes in a short time. This deliberately chosen noisy WU is then used as the reference WU. Boinc runs the s@h client to process the reference WU as normal. Hence, the system is characterised by the real work example. So systems with small CPU caches and slow memory get a correctly lower rating than they do at present. Conversely, optimised systems and optimised clients get a respective higher rating. Taking an average credit claim as in option '2' indirectly is loosely doing what the reference WU directly measures. (And option '2' only works properly if you can assume the WUs to be of the same type.) Regards, Martin See new freedom: Mageia Linux Take a look for yourself: Linux Format The Future is what We all make IT (GPLv3) |

MikeSW17 MikeSW17 Send message Joined: 3 Apr 99 Posts: 1603 Credit: 2,700,523 RAC: 0

|

There was, recently, an answer to the short WU option, and the answer was that it is not possible to have shorter seti units, and again would not test the computer system as the benchmark needs to take into account the L2 cache size and use of main memory if needed with a full size unit... The problem I have with that is, that unless it is a reasonably long WU it won't test the true processing ability of a system. No way am I going to allocate 1 to 2 hours of CPU every week or two weeks as a benchmark, for no credit, or for credit but for work that I know has been run tens of thousands of times already. Now, if I have a proplem with 1 or 2 hours, what the heck will guys doing 12-24 hr WUs on PIIIs have to say? It probably won't be very polite... The maximum benchmark I guess that most users would accept every week or two, would be 10 minutes - on a PIII 350MHz that is. Ah, wait a minute.... that translates to the ~1min I have to run now.

|

ML1 ML1 Send message Joined: 25 Nov 01 Posts: 21773 Credit: 7,508,002 RAC: 20

|

By 'short WU' I mean a completely normal full size s@h WU, but one specially chosen so that enough spikes, pulses and gaussians are output so that the WU completes in a short time... Note that we are only interested in accurately characterising how a user's system processes a WU. All that is needed is to run a representative sample of the processing. For s@h, we have the same data repeatedly checked at different chirps. Running a noisy WU that completes after just one chirp run should exercise enough of the processing to give a reliable measure. Just the one chirp run should complete quickly enough. And a rerun should only be necessary when something is noticed to have changed. And so, the reference WU calibration and check run should not be too onerous. It may well even pay for itself in quickly alerting users whether or not their systems give accurate good results. (Could this be a new competitor to the prime95 torture test? :) ) Regards, Martin See new freedom: Mageia Linux Take a look for yourself: Linux Format The Future is what We all make IT (GPLv3) |

Paul D. Buck Paul D. Buck Send message Joined: 19 Jul 00 Posts: 3898 Credit: 1,158,042 RAC: 0

|

Why not. If: 1. you get credit for doing the work, and 2. it properly calibrates your system, and 3. proves that the system is working correctly would you have an objection? Running the Reference Work Unit every two weeks or once a month is a small price to pay. Heck, it is still a small price if it is run every week. But, if it is run once a month, the other times comparison with the other returned results would allow minor adjustments or detection of a change in the system. I mean, if you should have completed a work unit in 2 hours, but it took 4, then it should be obvious that something bad happened. |

W-K 666  Send message Joined: 18 May 99 Posts: 19730 Credit: 40,757,560 RAC: 67

|

Sorry its been a long time until this reply, but would this noisy normal length unit still test the system. Surely in processors with a smallish L2 cache during the processing of a 'normal' unit there has to be a pauses whilst the cpu accesses main memory, or HDD for the more of the data, this could happen many times on a celeron and the noisy unit would not test for this. Andy |

|

Babyface uk Send message Joined: 28 May 03 Posts: 86 Credit: 1,972,184 RAC: 0

|

feel free to blow this idea out the water but why not just base credit on the amount of time it took to do the WU, so faster computers get less credit but more often and slower computers get more credit but less often, sorts out the hyperthreading issues with credit, you just get 2 x how ever much time it took to do the 2 WU's, I can understand the idea of benching computers to work out there power but as I said above more powerfull machines will cruch quicker anyway so less time - less credit, also loose the middle of 3 results credit and just give credit to each computer as it returns the wu's when you get the 3 matching results, As I said feel free to pick holes in anything you read above, babyface uk |

ML1 ML1 Send message Joined: 25 Nov 01 Posts: 21773 Credit: 7,508,002 RAC: 20

|

Paul, EXCELLENT idea! Even though we are calibrating and validating the user's system, indeed why not award credit for however the benchmarking is done? This also makes sense to avoid biasing against, or for, other projects that will have different reference WU run times. I think very few people would begrudge proper benchmarking if: 1: It validates a user's system and so gives greater confidence of good results; 2: It makes for more accurate scheduling (see here); 3: It makes for a fairer credits calculation. Regards, Martin See new freedom: Mageia Linux Take a look for yourself: Linux Format The Future is what We all make IT (GPLv3) |

ML1 ML1 Send message Joined: 25 Nov 01 Posts: 21773 Credit: 7,508,002 RAC: 20

|

Sorry, I was too brief in my description. Yes exactly regarding the CPU pauses whilst main memory is accessed. All that is needed of the reference WU is that it runs through at least once for all the client's major processing routines. For s@h, this happens at least once per chirp rate. Hence, we can get an accurate characterisation for a normal (noisy) WU that completes very quickly. Aside: The L2 cache killer is the large FFTs that are run by the s@h client. We just choose a WU whereby the cache gets hit in proportion to that for a normal non-noisy WU. (There's lots to choose from. A database query or two should list good reference candidates.) Regards, Martin See new freedom: Mageia Linux Take a look for yourself: Linux Format The Future is what We all make IT (GPLv3) |

ML1 ML1 Send message Joined: 25 Nov 01 Posts: 21773 Credit: 7,508,002 RAC: 20

|

... why not just base credit on the amount of time it took to do the WU, so faster computers get less credit but more often and slower computers get more credit but less often, ... Sorry but that oversimplifies things enough to become unfair. If all WUs required the same compute resource, and if all hosts were the same architecture, then that might work. However, we have WUs that vary in size and vary in the CPU effort required to process them. We have users that have a mix of poor performance crippled CPU cache systems through to highly optimised super-systems. Also in the mix, more recently we now have optimised s@h clients that process the SCIENCE more efficiently. Either we have accurate credits (and accurate scheduling). Or we abandon the credits idea. I think getting the credits right will keep most people happy and provide the greatest incentives. Aside: The old s@h classic system of awarding fixed credit regardless of whether you got a 'easy' or 'hard' WU lead to some very creative 'strategies' to boost a user's scores. So much so that it could have distorted the science if left unchecked. (And hey, it's always good to ask :) ) Regards, Martin See new freedom: Mageia Linux Take a look for yourself: Linux Format The Future is what We all make IT (GPLv3) |

ML1 ML1 Send message Joined: 25 Nov 01 Posts: 21773 Credit: 7,508,002 RAC: 20

|

... However, we have WUs that vary in size and vary in the CPU effort required to process them. We have users that have a mix of poor performance crippled CPU cache systems through to highly optimised super-systems. Also in the mix, more recently we now have optimised s@h clients that process the SCIENCE more efficiently. Upon further thoughts and reflection, I think that running a reference WU specific to each project to characterise the host performance for that project for the SCIENCE contribution is the way to go. 'Benchmarking' in this way by characterising the host system, you are directly measuring the performance of the user's hardware running the client software for real WU data for returning a known amount of science results. This will give a far more accurate measure than the existing much more limited artificial FLOPS/IOPS benchmarking. Directly measuring the performance for the particular project also measures any improvements gained by using optimised clients or other system optimisations not measured by the existing benchmark. Accurate benchmarking or characterisation can more fairly calculate what (science) credit should be awarded. Also, it will help the scheduler to more accurately schedule work and deadlines. Are there any arguments or comments AGAINST (or for) the characterisation (reference WU) idea over the existing benchmarking? (What have I missed??) Regards, Martin See new freedom: Mageia Linux Take a look for yourself: Linux Format The Future is what We all make IT (GPLv3) |

MikeSW17 MikeSW17 Send message Joined: 3 Apr 99 Posts: 1603 Credit: 2,700,523 RAC: 0

|

Paul, I think would still object. I build my own desktop systems and extensively test them as part of the build. Any subsequent behavior changes of my systems would become easily aparant. I don't overclock my systems - not because I have anything against OC'ing, but because I quickly realised (as a novice OC'er) that I was losing more time squeesing a few percent than I was likely to gain through OC'ing. Add to that the potential future losses due to OC induced failure, it really wasn't worth it. Also, I feel that my point earlier about what I would accept as benchmark-time per week is more significant than I thought when I wrote it. While I might accept 10 minutes a week of benchmark time, on a Pentium M 2.0GHz, what is that going to equate to on a PIII 400MHz? An hour or more a week. I don't see being acceptable to participants with lower-end systems. Besides, I still feel that Berkeley (and the other projects) get back from each host; the number of results, the CPU time taken, and the platform. Surely with this data, the projects could come-up with a better platform based 'average' WU credit calculation than any individual host can ever do.

|

W-K 666  Send message Joined: 18 May 99 Posts: 19730 Credit: 40,757,560 RAC: 67

|

.... Besides, I still feel that Berkeley (and the other projects) get back from each host; the number of results, the CPU time taken, and the platform. Surely with this data, the projects could come-up with a better platform based 'average' WU credit calculation than any individual host can ever do. The idea of averages is appealing but I don't know that you could base it on platforms, I assume by platform you mean processor, there are just too many variations on other componets to even contemplate whole systems. Paul showed in a post several weeks ago the differences he is getting with 2 supposedly identical computers, on inspection he found the computers had different motherboards. Andy |

|

TPR_Mojo Send message Joined: 18 Apr 00 Posts: 323 Credit: 7,001,052 RAC: 0

|

And they all calculate RAC based on recent performance. If this routine in the server code was changed to come up with a per-project per-node "calibration factor" surely this would be fairer/more equitable/more accurate than now (whilst still imperfect)? I believe this routine already calculates factors for each CPU e.g. average turnaround time, quota */-..... So basically under my scheme benchmarks would be run once per machine, when BOINC was installed. That gives the machine a yardstick. Successful results then modify this yardstick by comparing to actual performance, giving sensible benchmark performance, claimed and granted credit etc. The appeal of this method is that, as Paul and others rightly point out, the best benchmark is the science performace of the machine. No compiler issues, platform issues,nothing. Just a rolling measure of how much work the machine is doing, translated to a credit system which is then by definition pan-project and fair/equitable.

|

|

TPR_Mojo Send message Joined: 18 Apr 00 Posts: 323 Credit: 7,001,052 RAC: 0

|

The more I think about it, the more this makes sense. At the moment we have float and integer performace on each host record to compare it to a "cobble computer". As far as I know that's the only reason they are there. And again, as I understand it, these figures are then used by the client to generate a "claimed credit" figure, which is a calculation of benchmarks x seconds spent processing x project fudge factor (constant). So if I take an XP1600+ and an XP3200+ - we'll say for simplicity the 3200 is exactly twice as performant as the 1600. Install boinc, run benchmarks. Calculate how many "cobble computers" each is worth, again for simplicity we'll say the 1600 is 1 and the 3200 2. Both return a unit. Compare time spent against estimated "cobble computer" time, the XP3200 is now worth 1.8, the 1600 0.9. Claimed credit would be therefore calculated machine factor x theoretical processing time x actual time x project fudge factor. So the claimed credit is based on the calculated efficency of each machine to the same yardstick, multiplied by project constants and seconds spent processing. In other words, if we assume the 3200 takes exactly half the time to process the unit, they would claim exactly the same credit. Calculate and store this factor during the RAC calculation, and it will continue getting more accurate, and using the same statistical base as RAC should smooth out any wild variations. It doesn't matter now what I do. I can upgrade the CPUs, have large/small project workunits, change operating system or science client to a more optimised version, I'll still be comparing apples with apples. Not apples with aardvarks, which is what the current system does. If I then attach both to Predictor (or Einstein,Burp.....) we repeat the process, and store the factors by project. C'mon, somebody shoot a hole in it :)

|

Paul D. Buck Paul D. Buck Send message Joined: 19 Jul 00 Posts: 3898 Credit: 1,158,042 RAC: 0

|

Just some notes for several posts. I knew that the computers had different motherboards as I built the systems. What I was surprised with is the HUGE difference in processing times. That was one of the reasons I characteerized it and captured the data. The down side was that I could not buy a second MB for several months. With the use of the Actual work being used to characterize the computer, the hardware and software combination would be characterized and would more correctly specify the system against the theoretical Cobble-Computer. So, those people using optimized client programs would, in fact, be correctly rewarded for that use. RAC is hugely dependent on the time of the return of the result and the award of credit. It is not your regular average. As far as running the benchmarks for characterization as often as we do I am not sure that it is that necessary. With additional work the expectation of the processing time can be derived from the other returned results. In other words, you would return your processing time like now, and when the validator was processing the results it would compare each of the results processing times and calculate if any were "off". If your computer was returning results with processing times outside of the "window" a correction figure could be sent to change your Cobble-Score. The reason that this is reasonable is that it is unlikely that all four computers returning results would all change at the same time. So, jsut more thoughts ... The whole point of my origianal proposal is that the current system is worse than broken ... |

|

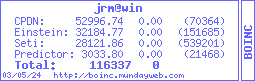

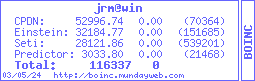

megaman@win Send message Joined: 25 Jan 03 Posts: 55 Credit: 28,122 RAC: 0

|

I agree with Martin (ML1). Option 4 is the most sound for me, but not the short WU, I say make them turn in a full-sized benchmark WU. In this way, SETI/BOINC would also make sure that optimized clients will be returning valid and acceptable results. If you compile your own client, you're going to have to do this anyway, so why not do it and get your client benchmarked? I say full WU.. it's only one, c'mon... perhaps even a normal WU would do, since it will be processed by other 3 clients, you could be benchmarked against the other 3 (given that they have already been benchmarked). By this I mean: I have my brand new compiled client, but since I compiled my own, benchmarks are even less appropriate to measure my whole system (so that fair is fair regarding credit). I then tell BOINC, or BOINC automagically finds out a benchmark is in order, so when downloading a WU for S@H, it tells the server to get it a 'special' WU. This 'special' WU must be sent to other 3 clients, but CLIENTS THAT HAVE ALREADY BEEN BENCHMARKED, nothing that special. After everyone hands out their result, the benchmark for my compiled system can be obtained, and also my client could be checked as whether it is returning valid results. Say the WU represents 8460 seconds of work for the 'base' system. (I'll explain this 'base system' it in just a second). Say Computer 1 takes about 1.1 times as much as the base system, this means it should finish this WU in about 9,306 seconds. Computer 2 takes about 1.2 times as much as the base system, this gives 10,152. Computer 3 takes about 1.5 times as much as the base system, this gives 12,690. Of course that Computer1, Computer2 and Computer3 won't finish in exactly that time, but lets say Computer1 takes about 9,350 secs, Computer2 takes about 10,200 secs, and Computer 3 takes about 12,500 secs. C1time/C1factor = 9350/1.1 = 8500, C2time/C2factor = 8500, C3time/C3factor = 8333.33. Averaging, this particular WU time equals 8444.44.... obviously, 8444 isn't 8460, but it is pretty close. [I'll return in 4 hours and will finish this, I promise.]

|

|

TPR_Mojo Send message Joined: 18 Apr 00 Posts: 323 Credit: 7,001,052 RAC: 0

|

Agreed, but if this calculation were done each time a WU was returned or trickled I think the approach is still valid As far as running the benchmarks for characterization as often as we do I am not sure that it is that necessary. With additional work the expectation of the processing time can be derived from the other returned results. In other words, you would return your processing time like now, and when the validator was processing the results it would compare each of the results processing times and calculate if any were "off". If your computer was returning results with processing times outside of the "window" a correction figure could be sent to change your Cobble-Score. The reason that this is reasonable is that it is unlikely that all four computers returning results would all change at the same time. Nice idea :) Cuts some of the overhead out

Amen to that.

|

©2025 University of California

SETI@home and Astropulse are funded by grants from the National Science Foundation, NASA, and donations from SETI@home volunteers. AstroPulse is funded in part by the NSF through grant AST-0307956.