Credit for Optimised Clients

Message boards :

Number crunching :

Credit for Optimised Clients

Message board moderation

| Author | Message |

|---|---|

ML1 ML1 Send message Joined: 25 Nov 01 Posts: 21731 Credit: 7,508,002 RAC: 20

|

<blockquote>In reply to this thread Angus is correct here. Credit theoretically equals FLOPs (times some scalar). Thus two people, one using an unoptimized client and one an optimized, should get the same credit for the same WU. However, the person with the optimized client will be able to do each WU in less time, and so his credit should increase at a faster rate. ... Something is wrong though if the optimized client is claiming LESS credit for a single WU than the [un]optimized one. ... Puffy</blockquote> The Cobblestones are a measure of compute resources donated by a user. With optimised clients, you have two optimisations: 1: More efficient compilation that makes better use of the CPU/FPU and so in effect gives a higher Cobblestones/second rating; 2: More efficient algorithms for getting the job done so that fewer Cobblestones are required per WU (via benher's & others programming optimisations). To confuse things further, the benchmarking code cannot be optimised and so does not take into account what client optimisations you're running! The first question I think is whether to award people for their compute resources donated or for "WUs done". My first thought is that we should keep it simple and award for donated resource. Its up to Berkeley to follow the work by Benher, Ned, Metod, & TMR and officially release the plethora of better clients. Meanwhile, we can take advantage and kudos for gaining more science per Cobblestone for our efforts. (Even though this means in effect that we get awarded fewer Cobblestones per WU. However, the median scoring system means that we get yanked up in the awards by the slower clients confirming our WUs (:-O)) Good work! Regards, Martin See new freedom: Mageia Linux Take a look for yourself: Linux Format The Future is what We all make IT (GPLv3) |

FloridaBear FloridaBear Send message Joined: 28 Mar 02 Posts: 117 Credit: 6,480,773 RAC: 0

|

Just my two cents here. As more people begin using the optimized clients (why wouldn't they), more work units will have the lower requested credit numbers. The big problem that I see is that those still running the standard client are going to start to be awarded less credit, as soon as 2 of the 3 or 4 WU's were crunched with optimized clients. This is going to make some of those users a bit upset. By contrast, since there aren't many running the optimized clients, I can request 12 credits for a WU completed with an optimized client and sometimes receive 30, since I'm almost always the lowest requested credit and therefore am not included in the calculation. As for the "correct" amount of credit, well, I'm not actually doing any more work than I was before; I'm just doing it more efficiently. If the goal was to award credit based on floating point operations, I should get exactly the same credit as I did before, since I'm not doing any more floating point operations--it just takes fewer FLOPS to finish a WU with the new client. In my opinion, the toughest part of this will be the inequity in credit awarded during the transition from the standard to the optimized client.

|

AndyK AndyK Send message Joined: 3 Apr 99 Posts: 280 Credit: 305,079 RAC: 0

|

I took a look at my last workunits from the original client and some of the wus processed by the optimized one. AMD XP 2000 original: ~13,6000 s/wu with 33.1 claimed credits => ~6.35 wu/day => ~210.28 claimed credits/day (cc/day) optimized: ~10,300 s/wu with 28.58 claimed credits => ~8.39 wu/day => ~239.74 cc/day This does not confirm with your opinion FloridaBear. It should be both near equal cc/day, but they aren't In fact I get more cc/day with the optimized client and the optimized boinc core. If I leave the original boinc core and only change the client, I would get lot less cc/day as the benchmark results are better with the optimized boinc core, too. Andy PS: Here my values for my AMD 64 3000+ original: ~9,900 s/wu with 28.75 claimed credits => ~8.73 wu/day => ~250.91 cc/day optimized: ~6,300 s/wu with 20.6 claimed credits => ~13.71 wu/day => ~282.51 cc/day Want to know your pending credit? The biggest bug is sitting 10 inch in front of the screen. |

FloridaBear FloridaBear Send message Joined: 28 Mar 02 Posts: 117 Credit: 6,480,773 RAC: 0

|

<blockquote>I took a look at my last workunits from the original client and some of the wus processed by the optimized one. </blockquote> Well, the issue is of far less concern on AMD processors--my requests are lower, but not much. On Pentiums however, it's a much bigger issue. The benchark is about 20% faster, but the WUs complete 40-50% faster. My requests are typically 11-14 credits now, vs 18-24 before.

|

|

1mp0£173 Send message Joined: 3 Apr 99 Posts: 8423 Credit: 356,897 RAC: 0

|

<blockquote>Just my two cents here. As more people begin using the optimized clients (why wouldn't they), more work units will have the lower requested credit numbers. The big problem that I see is that those still running the standard client are going to start to be awarded less credit, as soon as 2 of the 3 or 4 WU's were crunched with optimized clients. This is going to make some of those users a bit upset.</blockquote> This is why you do not want an optimized BOINC client. The benchmarks need to be the same on every machine -- or at least as close as we can get them. An "optimized" benchmark is one that completes in fewer machine cycles, and that is not an accurate representation of the machine performance. On the other hand, an optimized SETI client (or Einstein client, or whatever project) does more work for a given number of machine cycles, and should be rewarded. |

Benher Benher Send message Joined: 25 Jul 99 Posts: 517 Credit: 465,152 RAC: 0

|

Had a long email conversation with Ingleside about these issues over on developer's mailing list ;) Wrote a solution for myself back when I optimized seti and was getting different "claims" on different host platforms...P3, P4, P2 Celeron, Duron, etc. |

JigPu JigPu Send message Joined: 16 Feb 00 Posts: 99 Credit: 2,513,738 RAC: 0 |

<blockquote>This is why you do not want an optimized BOINC client. The benchmarks need to be the same on every machine -- or at least as close as we can get them. An "optimized" benchmark is one that completes in fewer machine cycles, and that is not an accurate representation of the machine performance. On the other hand, an optimized SETI client (or Einstein client, or whatever project) does more work for a given number of machine cycles, and should be rewarded.</blockquote> I was actually thinking about this a little while ago as well. While an optimized client sounds good at first (and actually makes sense for a limited point of view), the end result is that things will just get mucked up with credit claimings. Then again, with unoptimized clients, credit is still technically "mucked up" depending on your point of view. If the BOINC client isn't optimized but the SETI application is, then claimed credit will (or rather, should) drop. Remember the equation for claimed credit: claimed credit = ([whetstone]+[dhrystone]) * wu_cpu_time / 1728000 If Whetstone and Dhrystone (which come from the benchmarks) don't change, but your WU time decreases from an optimization, your client will request less credit. Because of the validator, this isn't a huge concern until a reasonable number of people begin to use the optimized client. Once this happens, less credit is claimed by multiple people for a WU, and so less credit is granted. The math is technically doing the right thing as far as what it measures is concerned (less work is being done because of optimized algorithms, so less credit should be awarded), though it's not doing the right thing as far as what it idealy should be measuring (the same amount of science is being done, so the same amount of credit should be awarded). Therein likes the "mucking up" from running an unoptimized client. One WU ran on two machines should always claim the same credit since they're both of the same value to Berkeley -- an unoptimized client claims less credit, which isn't in line with the ideal. Optimizing the client dosen't help things either though as you pointed out Ned. Optimizing the client will (should) bring the claimed credit back to what other clients are claiming. The same WU on two machines (even if one is running an optimized client) will recieve the same credit, which is precicely what the guys at Berkeley wanted with this whole benchmarking system. It seems perfect on the surface, until the multi-project nature of BOINC is brought to light. So, now we have a benchmark that is 2x as high to counteract SETI's 1/2 CPU time. But we ALSO have a benchmark that is twice as high for a LHC/Einstein/Predictor/CPDN application's SAME CPU time! The result? The same problem seen with an unoptimized client, only instead of effecting SETI's claimed credit, it effects the claimed credit all your OTHER projects. I haven't seen the effect yet so I can't be certian, though I'm going to be monitoring the amount of credit I recieve on LHC (the other project I'm signed up for) to see if I do end up claiming far less than what is normal because of the benchmark scores. Ideally, per-project benchmarks would be used, where whenever an application change/upgrade is detected, a reference WU is downloaded (or, a reference WU is always kept on hand when a user first attaches to a project). This would isolate optimizations to JUST your application. If you download an optimized client, your benchmarks for that project will increase, and the benchmarks for your other projects would remain uneffected. I can't wait for Berkeley to implement a system like this (are they even working on such a thing?) so that the problems of optimized clients become a thing of the past... Puffy |

jimmyhua jimmyhua Send message Joined: 16 Apr 05 Posts: 97 Credit: 369,588 RAC: 0

|

<blockquote> Ideally, per-project benchmarks would be used, where whenever an application change/upgrade is detected, a reference WU is downloaded (or, a reference WU is always kept on hand when a user first attaches to a project). This would isolate optimizations to JUST your application. If you download an optimized client, your benchmarks for that project will increase, and the benchmarks for your other projects would remain uneffected. I can't wait for Berkeley to implement a system like this (are they even working on such a thing?) so that the problems of optimized clients become a thing of the past... Puffy</blockquote> Another side benefit of a "reference WU," is since the results are known, and it takes several hours to crunch. It is also a good way of validating that your machine is A-OK. Without having to wait days and days for a "quorum." It'll definitely helpout the overclocker crowd at least. Jimmy

|

|

Metod, S56RKO Send message Joined: 27 Sep 02 Posts: 309 Credit: 113,221,277 RAC: 9

|

I think we should step back and remember why on earth are we actually running benchmarks. The reason is that not all WUs require the same amount of work (even on the very same hardware) and we really want to grant credits on the amount of work done rather on number of WUs crunched. The amount of work done is the same for any given WU regardless of level of app optimization and/or hardware platform. Example 1: we all are running official clients. However, one person is running Windows/intel, another one linux/intel and yet another one OS-X/mac. For sure clients are differently optimized for different OS/HW combination, depending on quality of compilers used. And all persons should get the same credit for the same WU crunched. As they will all claim different credits, but will be granted the same. Example 2: I'm running whatever client. I get different WUs which take different CPU cycles to finish. I should claim different credits depending on amount of resources consumed. Example 3a: I'm running official client on a given machine. Now I upgrade machine to higher speed. My benchmarks improve and CPU time drops. I'm claiming the same credit for WUs done as previously. This is correct. Example 3b: I'm running official client on a given machine. Now I start to use optimized client and boinc CC (hopefully the optimization levels match). My benchmarks improve and CPU time drops. I'm claiming the same credit as before. Correct again. Ecample 3c: I'm running official client on a given machine. Now I start to use optimized client but not boinc CC (say because no benchmark performance boost is possible on my particular HW). My benchmarks remain the same, CPU time drops. I claim less credit for the same (at least science-wise) work. Not correct IMHO. Example 4: I'm running official software. Due to OS that I run, all benchmarks are waay different than the other OS on same hardware. Science app runs with only slightly different speed. I'm claiming waay different credits for the same work done. Not correct IMHO. Most examples are more or less covered by benchmarking and validation process. Indeed the way benchmarks are done currently suck big time. However, this is the only way to at least try to grant the same credit for the science-wise same work done. Note that not all projects rely on benchmarks for crediting purpose. For example, all CPDN WUs are worth 6805.26 cobble stones and that's it. So the benchmarking stuff is not an issue there. Metod ...

|

ML1 ML1 Send message Joined: 25 Nov 01 Posts: 21731 Credit: 7,508,002 RAC: 20

|

<blockquote>I think we should step back and remember why on earth are we actually running benchmarks. The reason is that not all WUs require the same amount of work (even on the very same hardware) [...] Indeed the way benchmarks are done currently suck big time. However, this is the only way to at least try to grant the same credit for the science-wise same work done. Note that not all projects rely on benchmarks for crediting purpose. For example, all CPDN WUs are worth 6805.26 cobble stones and that's it. So the benchmarking stuff is not an issue there.</blockquote> Remember the days of s@h classic and the differential between low-angle-range and high-angle-range WUs and the tricks encouraged by this so that fanatics could boost their WU counts?... Across the boinc projects, the time taken to work through a 'WU' is very different. Even within the same project, WUs can require different times to complete. So, do we try to give credit for: 1: WUs done? (And encourage cheating to select 'easy' WUs?) 2: Science done? (How can we add up the 'science value' of a WU?!) 3: Or effort (CPU resource) put into working through whatever is given? I strongly feel that "3" is indeed the right way to go to give maximum freedom to supply whatever WUs and let people claim due credit for how much CPU effort was required to work through that WU. Rewarding 'effort' rather than WUs also eliminates one of the cheater's temptations. Optimising the clients is certainly good for the science. That you also work through WUs faster (and more easily) rightly does claim less credit for the CPU effort required per WU. Remember: Do we reward (directly) effort or instead some arbitrary 'statistic'? We have a strange situation at the moment with a few of us that have taken the trouble to jump ahead onto optimised clients. We already get some extra rewards in that our credits are pulled up by others' slower clients. Hopefully, Berkeley will release optimised clients to ALL so that everyone oncemore gets roughly equal credits per similar WU and equally fast science done. Aside 1: The benchmarking should NOT be optimised! It should accurately measure the Cobblestones measure. Aside 2: Testing 'Aside 1' on a test WU for speed and accuracy is a Very Good Idea. Regards, Martin See new freedom: Mageia Linux Take a look for yourself: Linux Format The Future is what We all make IT (GPLv3) |

ML1 ML1 Send message Joined: 25 Nov 01 Posts: 21731 Credit: 7,508,002 RAC: 20

|

<blockquote>Optimising the clients is certainly good for the science. That you also work through WUs faster (and more easily) rightly does claim less credit for the CPU effort required per WU. Remember: Do we reward (directly) effort, or instead some arbitrary [indirect for the value of the science done] 'statistic'?</blockquote> Rereading and rethinking: I think this point about awarding Cobblestones for 'effort done' rather than a 'WU count' is always going to cause confusion! Happy crunchin', Martin See new freedom: Mageia Linux Take a look for yourself: Linux Format The Future is what We all make IT (GPLv3) |

|

Metod, S56RKO Send message Joined: 27 Sep 02 Posts: 309 Credit: 113,221,277 RAC: 9

|

<blockquote> So, do we try to give credit for: 1: WUs done? (And encourage cheating to select 'easy' WUs?) 2: Science done? (How can we add up the 'science value' of a WU?!) 3: Or effort (CPU resource) put into working through whatever is given? </blockquote> IMHO, the way to do is "2". I think we all agree that "1" is not an option. If you (over)simplify "3", you'll be giving credit per CPU time. If I put up slower machine than you, should I get the same credit as you are? Indeed we would give more credits to all victims of non-optimized clients, but then again we would punnish all owners of fast putters. At the end of the day, it's all about science. For the project, the only thing that matters is that the WU is done. No matter how long it takes on a particular computer, it has to be done. Some projects have WUs that require (almost) constant CPU work to be done (eg. CPDN). They are more than justified to grant the same credit to any cruncher. The rest of projects need to have some way to measure (approximate) the work needed per WU. One (may be the only one?) way is via benchmarks. The best way would be to measure client/CPU speed through real-life reference WUs (have some of them to avoid temptation of looking for them and sending back pre-computed results). The next-best way is to creeate some artificial benchmarks which ideally show the real value of given CPU. <blockquote> Hopefully, Berkeley will release optimised clients to ALL so that everyone oncemore gets roughly equal credits per similar WU and equally fast science done. </blockquote> With current system, all of crunchers crunching the same WU will get the exactly the same credit, no matter what client they run. You are right, however, when saying that another bunch of crunchers will get different credit if given the same WU. But that's just the problem with in-acurate benchmarks. However this problem is a general problem, not the problem of optimized boinc CC/seti client. <blockquote> Aside 1: The benchmarking should NOT be optimised! It should accurately measure the Cobblestones measure. </blockquote> Cobblestone measurement is based on a hypotetic computer. You are supposed to run a hypotetic client coupled with hypotetical boinc benchmarks. We do not have hypotetic computers. We have real computers with real compilers. Is it my fault that real linux compiler (gcc) makes code which is less liked by my real (Intel P4 Willamette) computer than the code made by real windows (MS VC) compiler especially when it comes to real seti science app (and not so much when it comes to benchmarks)? My suggestion is that until we have some really relevant benchmarking (made inside science app done by the very same code as doing real work) we just leave things as they are (eg. running optimized CC with optimized science app). Metod ...

|

|

Metod, S56RKO Send message Joined: 27 Sep 02 Posts: 309 Credit: 113,221,277 RAC: 9

|

Another consideration is cross-project comparison of credits earned. This is a real problem and I don't have any good idea about how to solve it. It's an example of how differently different projects use up the resources as well as how good some projects optimize their science applications. I think I'm a good example about how things are not going smoothly. If you compare RAC of the 3 projects I run, you would guess that I'm running them with resource shares of say 55:20:25 (CPDN:SETI:Einstein). This is not true, my settings are 40:20:40 (except for some slooow machines). If I was only into credits, I'd just run CPDN (or Seti) and would clearly avoid running Einstein. Metod ...

|

|

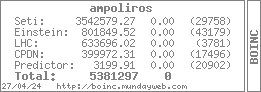

ampoliros Send message Joined: 24 Sep 99 Posts: 152 Credit: 3,542,579 RAC: 5

|

A lot has been said about a reference WU to determine real-world computing power. But if that gets implemented, who's WU should be referenced? Should it be a SETI unit, an LHC, an Einstein, CPDN (God-forbid)? As Metod just pointed out, they all use different processes; different processors and setups may favor one over the others. Maybe each project issues it's own reference WU? Nope, because BOINC can currently only keep one set of benchmarks... Suddenly we're back to only one project per computer defeating the whole point of BOINC. The BOINC CC is supposed be independant of the projects run on it, so do we use a "BOINC Reference Unit"? Well isn't that the same thing we have now in the benchmarks? There may not be a "fair" and "cheater-proof" answer to this. Every suggestion has problems (some minor, some major) and I think we may have to resign ourselves to that fact that it may never be perfect. (That doesn't mean we shouldn't try, though.) Berkeley has gotten a good system off the ground, lets not forget that. Whatever problems it has are only secondary to the science it provides. /i got nothin'  7,049 S@H Classic Credits |

Paul D. Buck Paul D. Buck Send message Joined: 19 Jul 00 Posts: 3898 Credit: 1,158,042 RAC: 0

|

<blockquote>A lot has been said about a reference WU to determine real-world computing power. But if that gets implemented, who's WU should be referenced? Should it be a SETI unit, an LHC, an Einstein, CPDN (God-forbid)? As Metod just pointed out, they all use different processes; different processors and setups may favor one over the others. Maybe each project issues it's own reference WU? Nope, because BOINC can currently only keep one set of benchmarks... Suddenly we're back to only one project per computer defeating the whole point of BOINC. </blockquote> Yes, but I would now argue that we are using two benchmarks in roughly the same way. We have a benchmark system which also matches no project well, but is being used as the standard. <blockquote> The BOINC CC is supposed be independant of the projects run on it, so do we use a "BOINC Reference Unit"? Well isn't that the same thing we have now in the benchmarks? </blockquote> No. The benchmarks are a "synthetic" benchmark and therefor match nothing except themselves. And they are notorious for being imprecise predictors of performance. Almost all synthetic benchmarks are just a vague nod in the direction of a predictor of performance. In specific, the Whetstone is a double precision benchmark and SETI@Home, for example, uses little to no double precision calculations. We created a imaginary machine, used a pair of benchmarks and say that they model the machine and then begin to base everything on that. Yet, it is all built on clouds. <blockquote> There may not be a "fair" and "cheater-proof" answer to this. Every suggestion has problems (some minor, some major) and I think we may have to resign ourselves to that fact that it may never be perfect. (That doesn't mean we shouldn't try, though.) </blockquote> Yes. <blockquote> Berkeley has gotten a good system off the ground, lets not forget that. Whatever problems it has are only secondary to the science it provides. </blockquote> Agreed, so far, everything proposed has been worse, including some things I suggested in the past. However, I may have something now ... |

Paul D. Buck Paul D. Buck Send message Joined: 19 Jul 00 Posts: 3898 Credit: 1,158,042 RAC: 0

|

Drat! Ok, you guys started it ... :) I have been thinking about the whole benchmark issue (again) in the last month because of several things that have come up in various places, including Dr. Anderson's challenge to find a new benchmark. So, Here are my thoughts, for what they are worth. Intents/goals of the BOINC Benchmark: 1) Determine system speed 2) Project independent 3) Be the basis for determining effort Bad points: 1) Results unstable, successive runs return wide variation for no apparent reason 2) Results do not adequately test critical performance parameters 3) Multi-CPU systems seem to be penalized by artificially low scores 4) Scores vary depending on the OS to a degree higher than warranted by actual performance 5) Theoretical system is incomplete in definition, i.e.: cache size, memory bandwidth, etc. 6) It is a blend of two "synthetic" benchmarks, neither of which is famous for predicting performance well 7) Positive changes in the tested system should result in higher scores and lead to greater awards of credit 8) Changes to the benchmarks change the scores As an example, I looked at one work unit, and saw this: 61078954 629798 9 May 2005 12:28:01 UTC 10 May 2005 14:48:24 UTC Over Success Done 18,993.14 45.68 15.90 61078955 835923 9 May 2005 12:28:00 UTC 9 May 2005 16:05:44 UTC Over Success Done 11,616.50 15.22 15.90 61078956 715569 9 May 2005 12:27:55 UTC 10 May 2005 1:12:41 UTC Over Success Done 8,227.00 15.90 15.90 61078957 666988 9 May 2005 12:28:00 UTC 9 May 2005 22:03:07 UTC Over Success Done 8,081.81 25.25 15.90 Where the spread between the claims runs from a high of 45.68 to a low of 15.22 with the award being 15.90, most likely due to the creation of a Quorum of Results early. Since the Cobblestone is based on a theoretical computer there really is little to stop us from a re-definition of how this performance is derived. For example, our measurement of Mixed MIPS is based on the algebraic addition of the FP-Ops and Int-Ops which effectively indicates that they take identical times to perform. But the real flaw is that we are using the FP-Ops definition of the Whetstone Benchmark and saying that this is the definition of the Cobble-Computer, and the same for the Int-Ops. Yet, for measurements of the time of processing, the time is a straight-line extrapolation of the FP-Ops times a constant indicating the average number of operations assumed for the Work Unit as shown here: Einstein@Home: 40,000,000,000,000.000000 LHC@Home: 6,000,000,000,000.000000 Predictor@Home: 10,000,000,000,000.000000 SETI@Home: 27,924,800,000,000.000000 Ok, now, the point raised earlier is that the change of executable screws up the system. Though the results are returned faster, the credit score also shrinks. But this is contrary to expectations. The reason for this is, of course, because we have incorrectly defined performance. Our performance metric is based on an incomplete definition of a hardware system. Yet, our performance should be based on the system as a whole, including the performance of the software executed. If you complete more work per unit time, you would expect that your credit score should rise, regardless of how that increase in performance is obtained. So, if we accept the premise that the "best" benchmark is the applications themselves, why have we not used that instead? The traditional argument is that each and every project would need to make an instrumented application. I would now argue that this may not be completely necessary. We are already using "SWAGs" (Scientific Wild ...) to make our measurements. What is one more? What we want to do is to establish a baseline of performance and from that extrapolate into a actual measurement. If we took the SETI@Home Testing Work Unit, and actually measured the number of loops by altering the code to include loop counters, we can make this measurement. This Work Unit then becomes the way that we identify what we say a Cobble-Computer can do in Unit time. From that we can derive, by testing, a "Calibration" factor that establishes the ratio of performance of a particular machine to the Cobble-Computer. For example, say that the number of iterations above is accurate, from that: 1,000 Million FP-Ops (MIPS) 1,000 Million Int-Ops (MIPS) ===== 2,000 Million Mixed-Ops 2,000 * 86400 seconds in a day = 172,800,000 Million Mixed-Ops per day 864 Seconds of time on Cobble-Computer = 1 Cobblestone (1/100 of a day) 1,728,000 Million Mixed-Ops = 1 Cobblestone 27,924,800,000,000.000000 Mixed-Ops per Average SETI@Home WU ÷ 1,728,000,000,000.0 = 16.16 Cobblestones Which should take 13,950.144 Seconds on the Cobble-Computer (16.16% of a day). Now, if my computer takes 27,900.288 Seconds, I have a 1/5 of a Cobble-Computer system. If, on the other hand, I do that same work unit in 6975.072 Seconds, I have a machine that is twice as fast as the Cobble-Computer. In either case, I should earn 16.16 Cobblestones for the work. If I add a faster processor, the credit should still be 16.16 Cobblestones, I will just complete that work faster. So, what I have derived is a Calibration measure for "Correcting" the speed of my system in terms of the Cobble-Computer. Now, unfortunately, we come to some of the hard points. With this: 1) Each project will need its own calibration factor, though a "Standard" value can be used, because of potential optimization of the software by a project - such as what we see here at SETI@Home. 2) Some, if not all, computers will have to run the calibration work unit. I would argue that we may only need to run the calibration on some sub-set of the population of computers, propagating the factor to be used via the Hosts table. 3) System changes would be detected via an imbalance between credit claims. Since the claim and the run times should be easily determined, a change indicates a change in the processing system. Obviously, I may have missed something. Feel free to chastise me appropriately ... I have this feeling that it should be possible to do some of this with uglier math that is just beyond my capability to figure out (simultaneous solution of multiple equations). Also, the more instrumented Work Units we can use to measure (from different projects of course) the more accurate our definition might be. We can also just start with the assumption of the current system as far as measurement and use that to derive the initial calibration factors on the standard executables. In the example data above, for example, my computer, ID 715569, does this work unit in 9.5% of a day; and if this were the standard work unit, I have a computer that is faster than the Cobble-Computer. This would give me a correction number greater than 1 (1.69?), but, still only 16.16 Cobblestones. |

JavaPersona JavaPersona Send message Joined: 4 Jun 99 Posts: 112 Credit: 471,529 RAC: 0

|

A lot of good points mentioned here. I would only add a few points. Many of us are interested in completing as much science as possible and to that end have installed the optimized clients. Most BOINC users will not because they are not interested in making the effort. Also, I doubt that BOINC developers want to confuse people with several options for installation because casual users will be easily turned off by less than "install-and-forget" type of installations. Further requiring optimized versions for each project, based on hardware, would also chase away the less sophisticated crunchers. The objectives of creating the best method for awarding credit should not get in the way of the overall goal of encouraging as many people as possible to participate in BOINC. I agree with Metod that credit be granted for "science done" and that somehow this be applied for all current and future projects. Is this possible? If SETI applied this system would we even need a quorum system? WU's could be sent a few times, just to check that results returned were accurate, but not as a basis for determining credit.

|

JigPu JigPu Send message Joined: 16 Feb 00 Posts: 99 Credit: 2,513,738 RAC: 0 |

<blockquote>A lot has been said about a reference WU to determine real-world computing power. But if that gets implemented, who's WU should be referenced? Should it be a SETI unit, an LHC, an Einstein, CPDN (God-forbid)? As Metod just pointed out, they all use different processes; different processors and setups may favor one over the others. Maybe each project issues it's own reference WU? Nope, because BOINC can currently only keep one set of benchmarks... Suddenly we're back to only one project per computer defeating the whole point of BOINC.</blockquote> Just to clairify what I meant by reference WUs -- A single reference WU for the entirety of BOINC indeed makes as much sense (or less) as the single synthetic benchmark being ran currently. If the reference WU was SETI, we'd have an excelent indicator of how long a SETI unit should take, but probably a very poor indication of the other projects. What I was advocating was per-project reference WUs. SETI would have one (presumably the reference WU that they ship with the source code), LHC would have one (probably a 100k turn one so it dosen't take ages on old machines), CPDN (probably only a single reference trickle given their huge completion times), etc. No, this cannot be implemented in BOINC as is since it does indeed use a single benchmark number. However, BOINC is constantly growing and evolving, so there is a possibility of this being changed in the future should Berkeley like the idea. As to how it would work, if your client completes the reference SETI WU in 3 hours, then your machine would claim 30 (or however many credits the reference WU is worth) credits for every 3 hours of CPU time on SETI. If a new version of the application appears, the change will be detected and the benchmark re-ran. If the new version completes the reference WU in 2:30, then the BOINC client will set 2:30 as the time to claim that amount of credit. You would also have a reference unit for LHC, Einstein, CPDN, etc, which would be run if an application change is detected (not all projects' reference units would be re-ran, only the unit for the project with the changed application). Each application would have a set CPU time (equal to that application's reference unit CPU time) that would be worth X credits. If a "longer" unit is ran, more credit is claimed, and if a "shorter" unit is ran less is claimed. There are, of course, problems even with this kind of system. For example, what happens if you replace the reference WU with a different one (if the reference units are downloaded once)? Should the application "know" the WU (say, through an MD5 hash or digital signiture) and be able to detect a non-reference stand-in? What about the increased size of the download? Will it significantly increase the time to attach to a project now that a reference unit has to be downloaded? How does one determine how much credit the reference unit is worth? Should the reference unit be ran through a "traditional" credit claiming algorithm, where it depends on CPU time and synthetic benchmarks, or should the developers spend the time to actually count the number of FLOPs in that reference unit? Puffy |

W-K 666  Send message Joined: 18 May 99 Posts: 19696 Credit: 40,757,560 RAC: 67

|

Although the idea of a reference unit has its attractions, the system would have its flaws if the reference unit could be recognised. You only need look at the games market and the 'polishing of the benchmarks' there by the graphics card manufacturers. Plus a reference unit would take time to process, unlike the present system. I think a few minutes to benchmark is acceptable, don't forget some participants take over 20 hours to process a seti unit. And for a reference unit the project would have to award credit for no science done. Andy |

Paul D. Buck Paul D. Buck Send message Joined: 19 Jul 00 Posts: 3898 Credit: 1,158,042 RAC: 0

|

<blockquote> A single reference WU for the entirety of BOINC indeed makes as much sense (or less) as the single synthetic benchmark being ran currently. If the reference WU was SETI, we'd have an excelent indicator of how long a SETI unit should take, but probably a very poor indication of the other projects. </blockquote> Which is my point. The current system is a poor model for all projects. Use of the "reference" units, even if it was only the SETI@Home one, would be no worse than what we have. At least, if nothing else, we would have an accurate metric for one project. I did ask the question on the developer's mailing list (with no reply as yet), which asked if the other projects have a "test"/"reference" work unit that could be used. Anyway, I just thought I would make this suggestion. |

©2025 University of California

SETI@home and Astropulse are funded by grants from the National Science Foundation, NASA, and donations from SETI@home volunteers. AstroPulse is funded in part by the NSF through grant AST-0307956.