Tips for more effective use of computers to crunch data - is a CPU per GPU needed?

Message boards :

Number crunching :

Tips for more effective use of computers to crunch data - is a CPU per GPU needed?

Message board moderation

| Author | Message |

|---|---|

Darrell Wilcox  Send message Joined: 11 Nov 99 Posts: 303 Credit: 180,954,940 RAC: 118

|

A bit of history for the newbies In a place not so long ago and not so far away, a project called the Search for ExtraTerrestrial Intelligence (SETI) was started. It processed data from the Arecibo radio telescope using CPUs only. The SETI application was slow, taking 196 hours on a 133Mhz PC. As CPUs got faster, SETI increased the amount of crunching each WU had to do. Later, the Berkeley Open Infrastructure for Network Computing (BOINC) was developed for SETI and made available to the world so other projects could also use networked computers easily in their crunching. GPUs were used for graphical processing and not data crunching in general. Then some very smart people developed the means to use GPUs to assist in the processing of data, and GPU applications were developed to make better use of those computers that had GPUs installed. This greatly speeded up the processing, allowing more work per WU to be done in less time, but a CPU was needed full-time to supply the GPU with data and receive the results. Volunteers got together to optimize the application code and algorithms thus further increasing the processing speed. Today, SETI has both CPU-only and GPU applications to process their data, and the applications are very optimized and tunable to some degree. This allows new users to immediately start crunching data with very little effort or knowledge. Other projects may also have both types of applications (CPU and GPU), or only a single type. For those people reading this, I suspect you are not the typical “install and run†type of person, and you want to get more from your computer(s). If this is not you, stop reading here. Also, if you do not have a reasonably recent GPU or chose not to use it for crunching SETI, there is no need to read further. Only for the advanced user (and wanna be's) with graphic card(s) The following is written from a Windows user perspective and BOINC Manager 7.6.33, but other systems have equivalent capabilities. I am going to assume here that you have not installed the application using an optimized package, you do not run GPU intensive games (or if you do, you know how to use the BOINC Manager -> Options -> Exclusive applications), and your CPU is mid-range or faster (e.g., Intel I5). To further increase the effectiveness of your computer(s), you are going to have to open the lid and make a few changes. By the way, effectiveness is doing something very well, which may or may not be efficient. This posting is primarily about effectiveness. A hydrogen bomb may be used to kill a house fly, but it is not efficient. The common fly swatter, while less effective, is much more efficient. The first thing to do is look in the folder where BOINC stores the project information. The usual location is “drive letter:\ProgramData\BOINC†If a file named “cc_config.xml†is not present, create it using Notepad or similar as: <cc_config> <log_flags> <unparsed_xml>1</unparsed_xml> </log_flags> <options> <process_priority_special>4</process_priority_special> </options> </cc_config> BE CAREFUL THAT THE FILETYPE IS “XML†EVEN THOUGH IT IS A TEXT FILE. This will help you debug any errors you might make later, and increases the priority of the SETI GPU application to “High priorityâ€. (more on priority later) If your GPU has at least 2Mb RAM, you can run two WUs per GPU. The following will cause this to happen and for SETI GPU application to run at “High†priority: in “drive letter: \ProgramData\BOINC\projects\setiathome.berkeley.edu†create a new or modify the file named “app_config.xml†with the following entries: <app_config> <app> <name>setiathome_v8</name> <gpu_versions> <gpu_usage>0.5</gpu_usage> <cpu_usage>0.125</cpu_usage> </gpu_versions> </app> </app_config> BE CAREFUL THAT THE FILETYPE IS “XML†EVEN THOUGH IT IS A TEXT FILE. This will cause two WUs per GPU to be run, and won’t tell the BOINC Manager to restrict CPU applications until 8 WUs are running on the GPU (more on CPU time later). For more on these parameters, see the BOINC wiki Client Configuration. In that same folder, there is a file named “mb_cmdline-8.22_windows_intel__opencl_nvidia_SoG.txtâ€. Edit this file using Notepad or similar, and enter data appropriate for YOUR GPU. You may need to search the forums to find one like you have, but here is the set I use: -use_sleep_ex 60 -sleep_quantum 2 -high_prec_timer -high_perf -tune 1 64 1 4 -tune 2 64 1 4 -period_iterations_num 5 -sbs 384 -spike_fft_thresh 4096 -oclfft_tune_gr 256 -oclfft_tune_lr 16 -oclfft_tune_wg 256 -oclfft_tune_ls 512 -oclfft_tune_bn 64 -oclfft_tune_cw 64 NOTE WELL: this is all on one line, and is appropriate for the GTX750Ti GPU. Use the BOINC Manager -> Options -> read config files to get them loaded. Check BOINC Manager -> tools -> event log to check for any errors, correct if needed and repeat. If you experience lag or missed characters when typing, increase period_iterations_num 5, perhaps to 10. The faster your GPU, the smaller this number can be, and vice versa. This will cause the SETI GPU application to release the CPU after a service is performed for the GPU. The default behavior without these parameters is to loop in the CPU (AKA “spin-loopâ€), testing for the GPU to complete the data processing of a small amount of data. This looping amounts to about 4 or more times the actual amount of time needed to support the GPU, and requires a CPU core to itself. Thus a single mid-CPU core WITH these parameters can support 4 WUs or more. Does this actually work? Yes. Look at the screen captures of one of my systems: BOINC Manager view This shows 8 SETI V8 WUs on the 4 GPUs while running 7 compute-intensive Rosetta WUs. This computer’s CPU is 4 core with hyper-threading clocked at 4.0Ghz and 4.4Ghz turbo. But are they really compute-intensive? Task Manager view Task Manager is showing 7 CPU compute-intensive tasks, 8 SETI V8 tasks, and still has 3% idle CPU But … how about the GPUs? Aren’t they starved for service? Process Hacker view Apparently not! The average of the 4 GTX750Ti cards is 99%+ and the CPU is 97%+. For the “I run SETI only†person, those 7 available CPUs could be adding to your RAC! Or processing another project as mine do. Since the GPUs are already at 99% busy, I don’t know how I can get them any faster AND have 7 CPUs available for other work. What about the additional priority information you mentioned above? The way the Windows scheduler works is to give a slice of time from any available (not busy) CPU to the highest priority task that is ready to use it. If the task becomes not-ready before the end of its slice, it loses the rest of the slice, and the next highest priority ready task is given a slice. At the end of the slice, a slice is given to a ready task at a higher priority, or if none, the next ready task at the same priority, or if none, then a ready task at a lower priority. If no task is ready, the CPU is idled. This means that non-compute-intensive tasks running at priorities higher than compute-intensive tasks will still process quite nicely. Further, the more CPUs in the computer, the more frequently a time slice will end for one of them (hyper-threading is good)! Finally the end I have read many posts here proselytizing the “leave a core free per GPU†advice, but I think a little time and work will yield benefits to anyone who tries these suggestions. Leaving a CPU core free is not bad, per se, but it is not as effective a use of the computer as using the spin-loop cycles for productive work. I have 5 computers with GPUs, but I only run SETI on 4 of them. Einstein gets 1 for its GPU application, and is my “backup†project on all the computers. I also run Rosetta and LHC, which do not have GPU applications, on all of them. I am very open to constructive suggestions for improvements in the effective use of my computers. I read these forums often, or a private email can be used. I wish to thank the many authors from whose posts I have taken snips and bits to make these tuning files. I especially wish to thank the volunteer programmers and testers, host providers, forum moderators, and anyone else involved in the development and promulgation of these incredible applications. And finally, to Carl Sagan, may he rest in peace. |

Jim_S Jim_S Send message Joined: 23 Feb 00 Posts: 4705 Credit: 64,560,357 RAC: 31

|

How much in Heat Increase with that “app_config.xml� TIA :)  I Desire Peace and Justice, Jim Scott (Mod-Ret.) |

Darrell Wilcox  Send message Joined: 11 Nov 99 Posts: 303 Credit: 180,954,940 RAC: 118

|

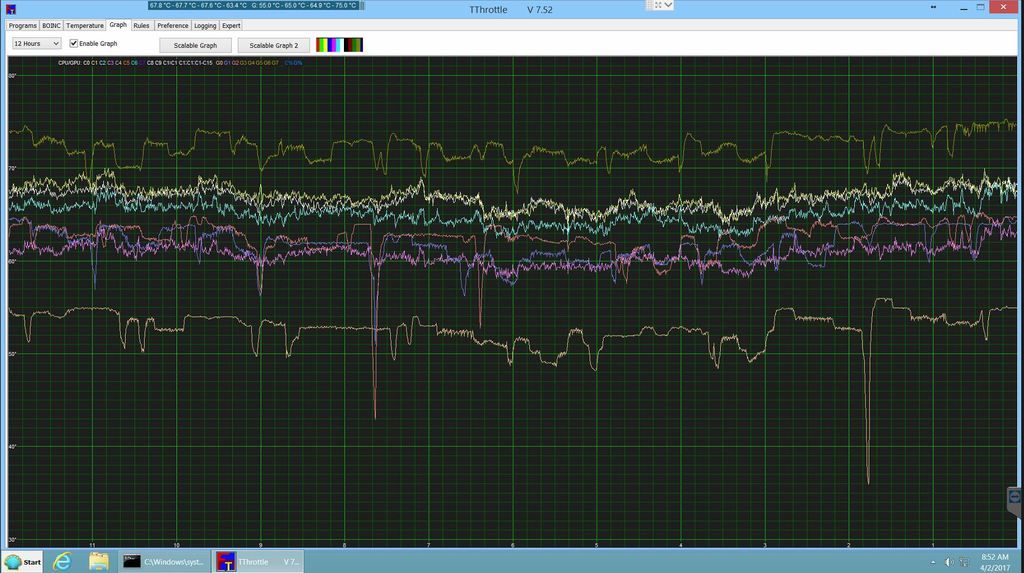

@ Jim_S: How much in Heat Increase with that “app_config.xml� TIA :)I don't really know how much the heat increased since I didn't measure it before I started. Here is a snapshot of what it is here this morning (near Ho Chi Minh, Vietnam). The air temp is around 30C, and I have one stuck fan on a two fan GPU, so it is running hot. I guess I should go fix that ...  |

Jim_S Jim_S Send message Joined: 23 Feb 00 Posts: 4705 Credit: 64,560,357 RAC: 31

|

Thanks Darrell, I may have to give that one a try. Jim_S |

|

wykinger Send message Joined: 6 Jan 17 Posts: 67 Credit: 8,068,825 RAC: 0

|

The "normal" boinc is 7.6.33, can i edit this the same way ? or must i download a modified client ( from where ? ). |

Darrell Wilcox  Send message Joined: 11 Nov 99 Posts: 303 Credit: 180,954,940 RAC: 118

|

@ wykinger: I am running the stock BOINC Manager (version 7.6.33) and SETI application for GPU (version V8 8.22). I am not modifying the software, only "tuning" the mix and priorities a bit since I use the CPU time for two projects that do not have a GPU version of their applications. |

|

wykinger Send message Joined: 6 Jan 17 Posts: 67 Credit: 8,068,825 RAC: 0

|

OK i modified the first 2 files without putting anything into the mb_cmdline-8.22_windows_intel__opencl_nvidia_SoG.txt. All running fine, 4 times 0.5 GPU-Tasks are running on Systems with 2 Graphics cards. to run 2 GPU´s my cc_config.xml looks this (also works with more than 2 cards, i also tested it on cpu with integrated Intel HD graphics + 2* GeForce710 (PCI-E16) and 1* Quadro NVS 290 (PCI-E1) in one PC) : <cc_config> <log_flags> <unparsed_xml>1</unparsed_xml> </log_flags> <options> <process_priority_special>4</process_priority_special> <use_all_gpus>1</use_all_gpus> </options> </cc_config> |

|

Andrew Scharbarth Send message Joined: 29 May 07 Posts: 40 Credit: 5,984,436 RAC: 0

|

Command line recommendations for a couple 1080's? |

|

Grant (SSSF) Send message Joined: 19 Aug 99 Posts: 14010 Credit: 208,696,464 RAC: 304

|

Command line recommendations for a couple 1080's? -tt 1500 -hp -period_iterations_num 1 -high_perf -sbs 2048 -spike_fft_thresh 4096 -tune 1 64 1 4 -oclfft_tune_gr 256 -oclfft_tune_lr 16 -oclfft_tune_wg 256 -oclfft_tune_ls 512 -oclfft_tune_bn 64 -oclfft_tune_cw 64 I'd suggest just running 1WU at a time & reserve 1 CPU core per WU and see how things go for a while. Grant Darwin NT |

|

Andrew Scharbarth Send message Joined: 29 May 07 Posts: 40 Credit: 5,984,436 RAC: 0

|

The cards were running at about 80% load on 1:1 with your switches so I upped it to 1.5 and that seems to have done the trick. This seems to have also reduced the completion time from 4-8 minutes per unit to 2-4. |

Darrell Darrell Send message Joined: 14 Mar 03 Posts: 267 Credit: 1,418,681 RAC: 0

|

My experiences with multiple projects on the GPU with my new RX480. Currently attached to seven GPU projects: Collatz, Einstein, Milkyway, MooWrapper, Seti, Seti_beta, and PrimeGrid. The 480 is being fed by a dual-core Athlon II (Phenom II six-core should be here next week). Due to the way the GPU scheduler in Boinc is programmed, it will not give you two tasks from one project at a time, it gives you one task from one project and a task from another project (Note: My cache size is set to zero to see how Boinc responds to changes in settings). The Collatz app does not share a core well other projects, so it gets the full GPU and one core, but the lowest resource share setting (135%). PrimeGrid shares the core fairly well, but due to some of its subprojects having long runtimes and you since never know which subproject you are going to get, it gets the full GPU and one core, and because I like it, its resource share setting is (175%). MooWapper shares a core well with other projects but because it stresses the GPU, it gets the full GPU and a core with a resource share of (140%). Einstein, Milkyway, Seti, and Seti_beta share a core well, so they are set at half a GPU and half a core. The resource share for Einstein and Milkyway are set at (150%) and Seti and Seti_beta are set at (160%). The scheduler uses Milkyway with it short runtimes as a filler between the other projects. The other core of the Athlon is running the non-GPU projects all with their resource shares set at (100%). |

©2026 University of California

SETI@home and Astropulse are funded by grants from the National Science Foundation, NASA, and donations from SETI@home volunteers. AstroPulse is funded in part by the NSF through grant AST-0307956.