Observation of CreditNew Impact

Message boards :

Number crunching :

Observation of CreditNew Impact

Message board moderation

Previous · 1 . . . 8 · 9 · 10 · 11 · 12 · 13 · 14 . . . 15 · Next

| Author | Message |

|---|---|

Eric Korpela  Send message Joined: 3 Apr 99 Posts: 1382 Credit: 54,506,847 RAC: 60

|

I suppose I should make a couple points. First, "CreditNew" has been implemented at SETI@home for a year now, so this reduction in credit is not due to implementing "CreditNew" since it was implemented ages ago. Second, how credits are normalized hasn't changed between v6 and v7 under CreditNew. Since our results are mostly stock Windows and CUDA under Windows, those results set the normalization. Based upon the archives, the S@H 6 windows_intel app was generating an average of 0.00556 credits per elapsed second. The current S@H 7 windows_intel app is generating 0.00514 credits per elapsed second, or about 7% less. Astropulse 6 is currently generating 0.00491 credits per elapsed second or about 12% less than S@H v6. I'm hoping the Astropulse issue is resolving itself. (It appears to be slowly coming back to normal.) There aren't a whole lot of knobs I can turn to get that 7% back. I've bumped the estimated GPU efficiency by 20% in hopes that that would help, but thus far I haven't seen a change. I'm going to try increasing the workunit work estimates slowly, but I think that change will get normalized out. Yes, there are projects that offer more credit. A number of projects have chosen to detach their credits from any measure of actual processing, usually by pretending they are getting 100% efficiency out of GPUs. I fought that battle for years, and lost. Those projects have entirely devalued the BOINC credit system. @SETIEric@qoto.org (Mastodon)

|

|

Alinator Send message Joined: 19 Apr 05 Posts: 4178 Credit: 4,647,982 RAC: 0

|

Awwwwww...... Eric, You should know better than to try and cloud these credit 'discussions' with logic and rationality. That takes all the sport and comic relief out of them when they erupt! :-D |

kittyman  Send message Joined: 9 Jul 00 Posts: 51470 Credit: 1,018,363,574 RAC: 1,004

|

Thanks for the insights, Eric. My RAC appears to be trying some sort of soft recovery, but it would have to do an awful lot of climbing to get even close to where it was before. No matter....where ever it shakes out is OK for the kitties. "Time is simply the mechanism that keeps everything from happening all at once."

|

tullio tullio Send message Joined: 9 Apr 04 Posts: 8797 Credit: 2,930,782 RAC: 1

|

Much ado about nothing. This is my opinion. Tullio |

|

ExchangeMan Send message Joined: 9 Jan 00 Posts: 115 Credit: 157,719,104 RAC: 0

|

According to the statistics tab in the Boinc Manager, the RAC for my big cruncher bottomed out 7 days ago and is in a slow, but steady climb. Where it will top out, I don't know. But I would be very skeptical that it would come within 7% of my peak RAC ever achieved for this host, but you never know. I would be very pleased if that happened, however. I believe that one poster mentioned in some thread about the number of work units achieving over 100 credits. Back with MB V6, getting over 100 credits was very common. Now it's perhaps 1 work unit in 20. With V6 it was about 50%. The credits are all relative since we're all crunching work units from the same pool, but it does make for interesting crunching and a way to compare hosts and configurations over time. |

|

bill Send message Joined: 16 Jun 99 Posts: 861 Credit: 29,352,955 RAC: 0

|

Thank You! Thank You! Thank You! It's nice to see that actions are being taken by somebody to put some balm on those terribly wounded RACs. |

|

Sleepy Send message Joined: 21 May 99 Posts: 219 Credit: 98,947,784 RAC: 28,360

|

Dear Eric, thank you very much for devoting some precious time to this (minor) issue. Just a question: when you talk about S@H V6 and V7, are you talking about stock applications? Because here almost everybody was on optimised applications on V6, which had about double throughput of stock applications. If now S@H V6 (stock) and V7 (stock, but abundantly optimised) get about the same credit/s, it means that for the same amount of work time we eventually get about half the credits. Which is exactly what we are experiencing. Of course it is good for the project that now everybody is running on what used to be optimised+some more scientific goodies, but nevertheless this would confirm that we are now getting about half the credit we did before. I hope I am not too wrong and not too much unclear. I myself had to read this several times and change some wordings because it would obscure for me as well... Open to explain better if necessary (and of course if not totally wrong!)! And in any case thank you very much for the insight and for explaining us how things are developing. And for your dedication to the project, proven in countless occasions! All the very best, Sleepy |

|

Grant (SSSF) Send message Joined: 19 Aug 99 Posts: 13797 Credit: 208,696,464 RAC: 304

|

Thanks for the insights, Eric. No sign of recovery here, although it does appear to be falling at a slower rate than it has been. Grant Darwin NT |

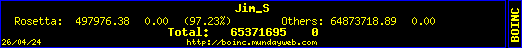

Jim_S Jim_S Send message Joined: 23 Feb 00 Posts: 4705 Credit: 64,560,357 RAC: 31

|

I'm Going Down With The Ship! And She's Still taking on water as We All slip deeper into the Abyss. ;-b....  I Desire Peace and Justice, Jim Scott (Mod-Ret.) |

ML1 ML1 Send message Joined: 25 Nov 01 Posts: 20637 Credit: 7,508,002 RAC: 20

|

I'm Going Down...! ...We All slip deeper into the Abyss. ;-b.... Fear not. It is all naught but a splash of statistics. The ripples will all settle as the "plop!" from the pebble of the new optimised app rolls by. The numbers will settle oncemore just as the disturbed mud at the bottom of the pond will oncemore settle. We should then find that the old machines will be pushed a little deeper into the mud as the newer machines bob and float upon the new level. Such is how "CreditNew" has been designed to work. We are all (vaguely) measured against the median average of all machines. Happy ever-faster crunchin'! Martin See new freedom: Mageia Linux Take a look for yourself: Linux Format The Future is what We all make IT (GPLv3) |

|

Ingleside Send message Joined: 4 Feb 03 Posts: 1546 Credit: 15,832,022 RAC: 13

|

Just a question: when you talk about S@H V6 and V7, are you talking about stock applications? Well, he did mention "Since our results are mostly stock Windows and CUDA under Windows, those results set the normalization.", before mentioning the results for the "windows_intel"-application, so you can take for granted it's the stock applications what's giving the credits. Because here almost everybody was on optimised applications on V6, which had about double throughput of stock applications. Yes, as expected the RAC-impact for v7 is similar to when FLOPS-counting was introduced, with roughly 10% real decrease and roughly 90% decrease due to optimized applications isn't 2x faster than stock applications any longer. "I make so many mistakes. But then just think of all the mistakes I don't make, although I might." |

shizaru shizaru Send message Joined: 14 Jun 04 Posts: 1130 Credit: 1,967,904 RAC: 0

|

[snip]...so you can take for granted it's the stock applications what's giving the credits. Part 1 - Reply to Ingleside At the risk of sounding like a broken record, the 7% drop likely shows that anonymous apps are actually factored in. As can be seen by anyone's stock CPU APR, V7 average credit should be showing a 10%-15% increase instead of a 7% drop. Factoring in that the bulk of S@H users who weren't running opti-apps (i.e. the majority), I guess a 7% drop in throughput across the board sounds about right. If true then no, it cannot be taken 'for granted it's the stock applications what's giving the credits'. In fact, if opti-apps are thrown in the pile with stock apps to create an average then there's a (slim) chance opti-app performance is also used as a benchmark to hand out credit across all applications. Part 2 - CreditNew is measuring app efficiency What I don't know (and what everybody here is unable to confirm since it involves an semi-obscure CreditNew mechanism) is if CreditNew recognizes and 'awards' a project's app efficiency. IOW, 'normalization' is a step further on down the credit granting path and therefore not necessarily tied-in with what happens to a new type of WU and its first day at school. I'm almost 100% sure that CreditNew does measure app efficiency (awards the app a proportional amount of credit in regards to theoretical 100% app efficiency) and uses that (most efficient) app as a benchmark to hand out credit to other apps. In fact, it appears do be: a) the whole point of using CreditNew instead of Eric's smoothly running (for the Seti project) "Actual-FLOPs-based" approach. b) If the 'master' app* is running at 100% efficiency and awards 100 credits for a WU, then if replaced with a (less efficient) master-app with 70% efficiency then that same workunit would be awarded 70 credits instead of 100. If your first reaction to this sub-paragraph is "that doesn't make sense" then chances are it's because you are thinking along the lines of the "Actual-FLOPs-based" approach instead of how CreditNew actually operates. c) This CreditNew feature is calculated by the formula PFC(J) = T * peak_flops(J) on the CreditNew page of Boinc's wiki. Is there a guru in the house that can confirm or deny Part 2 with emphasis on the 70 credit example under section b) ? *master app = the 'master app' is the one app to rule them all. It is responsible for letting all other applications get their credit (in an ideal world all apps would receive their credit independently). For Seti the 'master app' is the stock CPU app. (Or is it? See Part 3) Part 3 - Why I'm Flogging a Dead Horse or: How I Learned to Stop Worrying and Love Voodoo Economics While I'm confident in my understanding of CreditNew as to everything mentioned in Part 2 (though I'd still like to have it confirmed by a guru), here's what I have no way of knowing even if I have understood it correctly: The 'master app' would normally be the stock CPU app. This is implied under Cross-version normalization: •Version normalization addresses the common situation where an app's GPU version is much less efficient than the CPU version (i.e. the ratio of actual FLOPs to peak FLOPs is much less). To a certain extent, this mechanism shifts the system towards the "Actual FLOPs" philosophy, since credit is granted based on the most efficient app version. It's not exactly "Actual FLOPs", since the most efficient version may not be 100% efficient. It appears to replaceable though with an anonymous app (to use as the new 'master app') should someone choose to do so. So even though CreditNew claims: For each app, we periodically compute cpu_pfc (the weighted average of app_version.pfc over CPU app versions) and similarly gpu_pfc ...there is an asterisk under Anonymous platform that says: •In the current design, anonymous platform jobs don't contributed to app.min_avg_pfc, but it may be used to determine their credit. This may cause problems: e.g., suppose a project offers an inefficient version and volunteers make a much more efficient version and run it anonymous platform. They'd get an unfairly low amount of credit. This could be fixed by creating app_version records representing all anonymous platform apps of a given platform and resource type. So the million dollar question is: Were app_version records created for anonymous V6 apps? ...which then begs the question: Where anonymous V6 CPU apps used as a 'master app'? Anyone? Anyone? Bueller? Anyone? |

|

Sleepy Send message Joined: 21 May 99 Posts: 219 Credit: 98,947,784 RAC: 28,360

|

It is the fact that you are climbing a mountain at a certain pace, and all of a sudden something cuts your speed in half. You are going to get to the top not in the afternoon, but at deep night. The top might be anything: a fellow cruncher you want to overtake or a credit level. While your speed has been normalised, so has not been the height of the top. The Everest is still 8848 m high. Not normalised to 4424 m. THIS MAKES A DIFFERENCE. I do not know why many people here continue to say that everything is alright. It may still be alright (our lives and SETI itself have many more important issues. But we should be discussing about SETI as if it were our lives, when obviously it isn't.), and it mainly is. I am here still crunching, indeed. But it is not the same. And normalising (or the fact that we are all in the same boat) does not solve this fact. It maybe for the future, but not for what has been done so far globally. And also SETI credits on BOINC altogether will decrease its share of computation (at least nominally). Meaning that SETI is working worse? We know it is not (actually we are even doing better work). But for the "press" it may be not. This may be of some concern for project managers. Sleepy |

Raistmer Raistmer Send message Joined: 16 Jun 01 Posts: 6325 Credit: 106,370,077 RAC: 121

|

It's definitely not alright. In my understanding (just as Alex Storey pointed out) the better stock app is, the less credit project will generate per second of work. This optimization punishment is the worst part of this CreditNew system cause it really works agains us. SETI apps news We're not gonna fight them. We're gonna transcend them. |

|

Grant (SSSF) Send message Joined: 19 Aug 99 Posts: 13797 Credit: 208,696,464 RAC: 304

|

It's definitely not alright. Yeah, it doesn't make much sense. Ideally you'd get more credit for doing more work in a shorter period of time- ie the optimised applications. So by employing them in the stock application you're making all the processing more efficient. Yet you get less credit for having the more efficient processing. Grant Darwin NT |

|

Ingleside Send message Joined: 4 Feb 03 Posts: 1546 Credit: 15,832,022 RAC: 13

|

b) If the 'master' app* is running at 100% efficiency and awards 100 credits for a WU, then if replaced with a (less efficient) master-app with 70% efficiency then that same workunit would be awarded 70 credits instead of 100. If your first reaction to this sub-paragraph is "that doesn't make sense" then chances are it's because you are thinking along the lines of the "Actual-FLOPs-based" approach instead of how CreditNew actually operates. Trying to make much sence about CreditNew is very difficult, but atleast one of the four design-goals is: "different projects should grant about the same amount of credit per host-hour, averaged over hosts". So, if CreditNew follows this requirement, and also remembering the Wiki multiple times mentions separate statistics for "app_version", meaning the "100% efficiency" and "70% efficiency" must be different applications and should be treated as different statistics, where's two possibilities for granted credit to your 100-point-wu(*): 1: Less efficient means longer run-time, this means you'll get 143 points now since "same amount of credit per host-hour". 2: Due to "averaged over hosts", you'll get 100 points regardless of app_version. By looking at CreditNew, I can't really say which of these two will be the result and neither if CreditNew follows the 4 design-goals. (*): Since it's the "same wu" you'll get the exact same credit regardless of application-version, but has changed it to "similar wu". "I make so many mistakes. But then just think of all the mistakes I don't make, although I might." |

KWSN Ekky Ekky Ekky KWSN Ekky Ekky Ekky Send message Joined: 25 May 99 Posts: 944 Credit: 52,956,491 RAC: 67

|

All I know is that my credit is now running around 22% lower than it was 2 weeks ago :-(

|

juan BFP  Send message Joined: 16 Mar 07 Posts: 9786 Credit: 572,710,851 RAC: 3,799

|

All I know is that my credit is now running around 22% lower than it was 2 weeks ago :-( You are one of the lucky ones who drop only 22%, mine falls from 450k to less than 200K (>50%)

|

tullio tullio Send message Joined: 9 Apr 04 Posts: 8797 Credit: 2,930,782 RAC: 1

|

I have 5 units waiting for validation, one Astropulse running and no SETI@home new tasks. No wonder my RAC is plummeting. Tullio |

©2024 University of California

SETI@home and Astropulse are funded by grants from the National Science Foundation, NASA, and donations from SETI@home volunteers. AstroPulse is funded in part by the NSF through grant AST-0307956.