Going from GTX 470 to 500/600 series what model is best?

Message boards :

Number crunching :

Going from GTX 470 to 500/600 series what model is best?

Message board moderation

Previous · 1 · 2 · 3 · Next

| Author | Message |

|---|---|

|

Team kizb Send message Joined: 8 Mar 01 Posts: 219 Credit: 3,709,162 RAC: 0

|

Great information tbret, thanks for the data on the GTX 470. Looks like based on your data running my 3 470's @ 0.33 from a RAC/cost standpoint they would still put up some strong numbers compared to selling them and buy 600 series cards. My Computers: â–ˆ Blue Offline â–ˆ Green Offline â–ˆ Red Offline |

W-K 666  Send message Joined: 18 May 99 Posts: 19725 Credit: 40,757,560 RAC: 67

|

Great information tbret, thanks for the data on the GTX 470. Looks like based on your data running my 3 470's @ 0.33 from a RAC/cost standpoint they would still put up some strong numbers compared to selling them and buy 600 series cards. But you need to factor in the power consumption, the GTX470 uses about 100W more than the GTX670 at full load. No idea what your energy costs/kWh, but at 20 cents(US) per hour that would be approaching $200/year/card running 24/7. |

|

tbret Send message Joined: 28 May 99 Posts: 3380 Credit: 296,162,071 RAC: 40

|

There's all kinds of things you need to factor-in when you are choosing a card or cards. For instance, I don't play games. These are strictly crunchers. If I were worried about playing games, I would probably not have bought the 470s. In my case I bought the 470s as refurb units from EVGA and paid $119 for them. The 660Tis were (at the time) $350 each. I can run the 470s 24/7 for a long time before I chew-up the $460 difference in cost. But if I had needed a new $200 power supply to run the 470s, but I could have run the 660Tis with the one I already owned, I probably wouldn't have bought the 470s (they wouldn't have been as cheap to put to work). The 560Ti-448 was $149.00 after rebate. I could buy two for less than the price of one 660Ti. My point being: Everyone needs to figure-in their own set of circumstances and decide for themselves what is best for them to do. But to be able to do that they have to have at least a ballpark idea of what the crunching power of one card compared to another card really is. To calculate "pay-back" or "break-even" or "total cost of ownership" you have to know all kinds of things that a test of crunching-power can't tell you. 1) Will my existing hardware support it? 2) Can I cool it? 3) What is the purchase price? 4) What is my cost of electricity? 5) How long to I plan to own it? 6) Do I play games and if-so, what games? 7) Will I overclock the card? (changes power consumption assumptions) ...etc. We can't even start the calculation if we don't know the crunching speed of the cards.

|

|

Speedy Send message Joined: 26 Jun 04 Posts: 1647 Credit: 12,921,799 RAC: 89

|

Please find answers to the above questions of above and the quoted text. I ran breeds optimisation application version 1.7 it said the optimal number of tasks for my 470 was three units at a time. At present I'm going to run my card at one new unit at a time because I will not be here throughout the day to monitor the temperature. I ran a card with three units for about three minutes or so and the card temperature was around 85° and fan speed was on automatic it was over 3000 rpm the fan sounded like a jet engine I could hear it over the top of the CPU fan. Please see the results from Fred's optimised application below. Starting automatic test: (x41g) 17 December 2012 - 09:10:08 Start, devices: 1, device count: 1 (1.00) --------------------------------------------------------------------------- Results: Device: 0, device count: 1, average time / count: 167, average time on device: 167 Seconds (2 Minutes, 47 Seconds) Next :--------------------------------------------------------------------------- 17 December 2012 - 09:12:57 Start, devices: 1, device count: 2 (0.50) --------------------------------------------------------------------------- Results: Device: 0, device count: 2, average time / count: 278, average time on device: 139 Seconds (2 Minutes, 19 Seconds) Next :--------------------------------------------------------------------------- 17 December 2012 - 09:17:37 Start, devices: 1, device count: 3 (0.33) --------------------------------------------------------------------------- Results: Device: 0, device count: 3, average time / count: 407, average time on device: 135 Seconds (2 Minutes, 15 Seconds) Next :--------------------------------------------------------------------------- 17 December 2012 - 09:24:29 Start, devices: 1, device count: 4 (0.25) --------------------------------------------------------------------------- Results: Device: 0, device count: 4, average time / count: 543, average time on device: 135 Seconds (2 Minutes, 15 Seconds) >> The best average time found: 135 Seconds (2 Minutes, 15 Seconds), with count: 0.33 (3)

|

Snowmain Snowmain Send message Joined: 17 Nov 05 Posts: 75 Credit: 30,681,449 RAC: 83

|

I don't know where you got the idea that a GTX 660 is anywhere close to the speed of your GTX 570, but that's not even close to being right. I pulled the number from memory, apparently I remeber wrong. None the less the chart in my next post address's the $per crunch questions. ------------------------------------------------------------------------ OK thanks for details. I guess the question should be asked: What card gives the most RAC for the least power output? Rig runs round 12 hours a day I have removed this processor from my machine and replaced it with a quad core. It was an E8500 connected to a ZALMAN 9500led. Yup a 3.13GHz chip on air(ruffle 67 degree F ambient) running at 4.2GHz. 1.4v with the Zalman it would run around 60C. The chip and cooler are for sale. Unfortunately the quad wont go above 3.5GHz regardless of what I do to it. A little disappointing.

|

Snowmain Snowmain Send message Joined: 17 Nov 05 Posts: 75 Credit: 30,681,449 RAC: 83

|

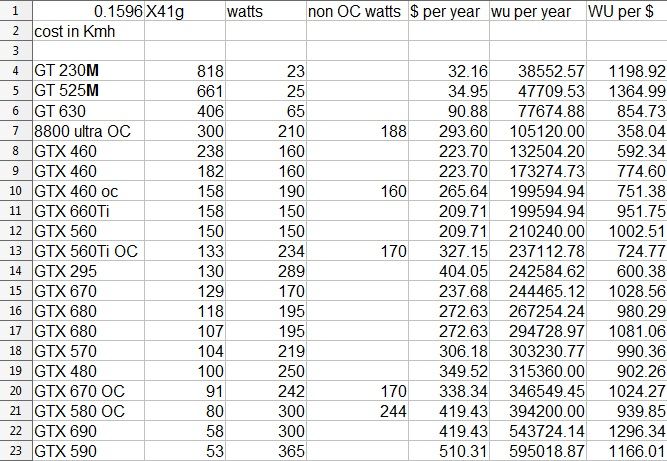

My OC GTX570classy pulled a 95 seconded on this test. It could easily be pushed another 150MHz with some volts. But I left the voltage stock. My OC is light. I run 3 units at once 64% fan 67degree F ambiant card @ 59C 99% load. I think this chart really gets down to the nitty grity on this subject. 990 vs 1028 thats not even a 4% increase in efficence. Unfortunaly I didn't have the GTX 660 when I made this chart. Hmm I wonder if the standard 660 would be north or south of that 1000 Wu per watt #? It is interesting how close most of these cards are. |

|

tbret Send message Joined: 28 May 99 Posts: 3380 Credit: 296,162,071 RAC: 40

|

Now you've put into a chart what I've been unhappy with NVIDIA about since I got my first 670. The numbering of the models is deceptive *because* we "tend" to compare a 670 with a 570 believing the "70"-part number is telling us something. With all of the pressure these companies face to make their products work on air, it is no wonder they try to make it look as though their new products are so much more efficient than their old. And frankly, for game-playing, I think some of the new technology and features may be working to reduce power-consumption. For us crunchers? Not-so-much.

You should stick the "normal" (read: reference) 560Ti numbers in there from this thread. The only number I doubt (I don't doubt your calculations, I doubt the comparison is valid) is the greater efficiency you're showing with the over-clocked GTX 670. If you pulled those from Fred's chart, I think Jason was using a CUDA version that was different than the one that tested the non-over-clocked 670. I suspect, strongly, that the efficiency gain we see there is due to the applications. I can't think of another example of anything, CPU or GPU, that gets more efficient as it is over-clocked and more energy goes to waste-heat.

|

Snowmain Snowmain Send message Joined: 17 Nov 05 Posts: 75 Credit: 30,681,449 RAC: 83

|

The OC 670 drops 4 wu per $ of power... That is to say it's not as efficient according to the chart. No change needed. But to the driver question look at the 2 680's......major increase in performance from 2 similar cards.

|

|

tbret Send message Joined: 28 May 99 Posts: 3380 Credit: 296,162,071 RAC: 40

|

The OC 670 drops 4 wu per $ of power... That is to say it's not as efficient according to the chart. No change needed. You're right, I read it backwards for some weird reason. EDIT: In fact, I'll go so far as to say, "Hey! That was stupid of me! I apologize!"

|

W-K 666  Send message Joined: 18 May 99 Posts: 19725 Credit: 40,757,560 RAC: 67

|

I think some of the new technology and features may be working to reduce power-consumption. That is exactly what all the new technology is about. Whether it be GPU's or CPU's the speeds have reached limits that cannot go any faster unless the heat, read power is reduced. Stock CPU frequencies have no risen since the days of the P4 so to do more wore they have reduced power and then increased the number of cores. And without a major, major redesign of the motherboard and case then the stock cooling of GPU's restricts the max performance of the GPU, so again they are going for heat reduction. This includes removing parts in the GPU that don't affect the games players. i.e. remove some of the physics processing that Seti likes. I am happy with my GTX 670's, fitted to three different computers, two on my account, one on youngest sons, they perform as expected and didn't require any other changes, like bigger power supplies. |

Snowmain Snowmain Send message Joined: 17 Nov 05 Posts: 75 Credit: 30,681,449 RAC: 83

|

No biggie. I wonder if the results of these test bare out in the real world. The 590 and 690 own! To bad they cost so much.

|

|

Speedy Send message Joined: 26 Jun 04 Posts: 1647 Credit: 12,921,799 RAC: 89

|

Snowmain, thank you for the chart very interesting indeed. For your 600 series cards were they running them in a PCIE 3 or 2 slot?

|

|

Grant (SSSF) Send message Joined: 19 Aug 99 Posts: 13960 Credit: 208,696,464 RAC: 304

|

For your 600 series cards were they running them in a PCIE 3 or 2 slot? It wouldn't make any difference. Grant Darwin NT |

Snowmain Snowmain Send message Joined: 17 Nov 05 Posts: 75 Credit: 30,681,449 RAC: 83

|

And what rate is electricity calculated at? Says it right there on te chart $.1596 per kilowatt hour. Which it my entire power bill divided by the KW/H.

|

Snowmain Snowmain Send message Joined: 17 Nov 05 Posts: 75 Credit: 30,681,449 RAC: 83

|

It's probably higher than that...whats your total bill divided by your total KWH? If 5 cents is your cost...WOW I wish we had your power company...I would buy another GTX570.

|

W-K 666  Send message Joined: 18 May 99 Posts: 19725 Credit: 40,757,560 RAC: 67

|

It's probably higher than that...whats your total bill divided by your total KWH? If 5 cents is your cost...WOW I wish we had your power company...I would buy another GTX570. Vic, you have extremely low rates, these are the figures from my last bill, and they have risen 6% since then. First nnn kWh x 23.623p Next xxx kWh x 10.501p and with the exchange rate at £1 = $1.62 Then UK rates are First nnn kWh x 23.623p * 1.62 = $0.38 Next xxx kWh x 10.501p * 1.62 = $0.17 |

|

Grant (SSSF) Send message Joined: 19 Aug 99 Posts: 13960 Credit: 208,696,464 RAC: 304

|

Vic, you have extremely low rates, these are the figures from my last bill, and they have risen 6% since then. Come Jan 1 the power here is going up 30%, Water 40% & Sewerage 25%. I think my pay's gone up by about 4% over the last 5 years. >:-/ Grant Darwin NT |

|

tbret Send message Joined: 28 May 99 Posts: 3380 Credit: 296,162,071 RAC: 40

|

I know everyone doesn't have the Kepler cards, but I edited Fred's Performance Tool and ran the latest x41zc on the 310.70 drivers. The numbers are dramatically different on this computer (this is the dual 660Ti and 670). I have not and may never run x41zc and 310.70 on my Fermis (or I may, eventually). The point is that I do not know if I would have equivalent "bumps" in the numbers like this from the Fermi cards. What I do know is that this combination of application and driver made a rather large difference (see way above): Starting test: (x41zc) 21 December 2012 - 03:40:31 Start, devices: 3, device count: 3 (0.33) --------------------------------------------------------------------------- Results: Device: 0, device count: 3, average time / count: 358, average time on device: 119 Seconds (1 Minutes, 59 Seconds) Device: 1, device count: 3, average time / count: 357, average time on device: 119 Seconds (1 Minutes, 59 Seconds) Device: 2, device count: 3, average time / count: 306, average time on device: 102 Seconds (1 Minutes, 42 Seconds) EDIT: Please resist the urge to make a new comparison between these new numbers and the old numbers on a different card / computer. These can only me legitimately compared to the same computer. The comparison to draw is between the x41g version of the applications and the x41zc version. Double-EDIT: To make the comparison easier: Below: Device 0 = NVIDIA reference GTX 660Ti Device 1 = NVIDIA reference GTX 660Ti Device 2 = 02G-P4-2670-KR EVGA GeForce GTX 670 Starting test: (x41g) 16 December 2012 - 03:26:36 Start, devices: 3, device count: 3 (0.33) --------------------------------------------------------------------------- Results: Device: 0, device count: 3, average time / count: 475, average time on device: 158 Seconds (2 Minutes, 38 Seconds) Device: 1, device count: 3, average time / count: 477, average time on device: 159 Seconds (2 Minutes, 39 Seconds) Device: 2, device count: 3, average time / count: 401, average time on device: 133 Seconds (2 Minutes, 13 Seconds)

|

|

tbret Send message Joined: 28 May 99 Posts: 3380 Credit: 296,162,071 RAC: 40

|

The EVGA Classified Ultra (factory overclock) 560Ti-448 under x41zc with driver 310.70 that reported 1:57 on x41g reported 1:45 on x41zc. That makes the stock clocked 670 two or so seconds faster than the 560Ti-448 with the new apps and driver running 3 WU at a time.

|

|

Speedy Send message Joined: 26 Jun 04 Posts: 1647 Credit: 12,921,799 RAC: 89

|

Very nice times. I'd be very interested to see times from a 680. If anyone can post times with the x41zc application I'd appreciate it.

|

©2025 University of California

SETI@home and Astropulse are funded by grants from the National Science Foundation, NASA, and donations from SETI@home volunteers. AstroPulse is funded in part by the NSF through grant AST-0307956.