boinc resource share

Message boards :

Number crunching :

boinc resource share

Message board moderation

Previous · 1 · 2 · 3 · 4 · 5 · 6 · Next

| Author | Message |

|---|---|

|

John McLeod VII Send message Joined: 15 Jul 99 Posts: 24806 Credit: 790,712 RAC: 0

|

Thanks for all the feedback. I think I will see if I have the patience to leave boinc alone and let it work through the existing queues. I'm puzzled why all the other computers seem to be operating 'normally' with the same settings as the quad, but it must have something to do with the large queue the quad acquired when I went to 10/10. There are two settings that will change what is happening: Connect ever X days and extra work. Make them very small, wait for the queues to empty and watch what happens. Increase extra work slowly and watch what happens. Please keep Connect every X set to approximate reality. The one thing we could do would be remove Connect Every X, and calculate it automatically. However, the code to detect when the network is available has not been implemented on all platforms at least until very recently.   BOINC WIKI |

|

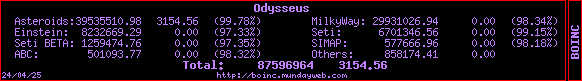

Odysseus Send message Joined: 26 Jul 99 Posts: 1808 Credit: 6,701,347 RAC: 6

|

I think part of the problem is that users have become used to managing their queues with the CI setting, which is something of an illogical kluge to start with. Now that it actually means what it says (to sufficiently recent BOINC clients, anyway), people have to get used to the idea …  |

|

Blu Dude Send message Joined: 28 Dec 07 Posts: 83 Credit: 34,940 RAC: 0

|

Two things from my crunchers, all with kind - of extreme resource sharing Boinc looks like it chooses projects to download from based on credit so it matches the share percentage (host individual credit, more specifically, but I could be wrong) It generally takes 1-3 weeks per core to balance out a resource share Just leave BOINC alone, it's a big kid now and can take care of itself. I'm a Prefectionist ;) |

|

John McLeod VII Send message Joined: 15 Jul 99 Posts: 24806 Credit: 790,712 RAC: 0

|

Two things from my crunchers, all with kind - of extreme resource sharing Download is based on Long Term Debt. Highest LTD gets first crack at downloads.   BOINC WIKI |

Richard Haselgrove  Send message Joined: 4 Jul 99 Posts: 14686 Credit: 200,643,578 RAC: 874

|

Two things from my crunchers, all with kind - of extreme resource sharing Not if it's my octo on SETI Beta. Still working on that one. |

|

archae86 Send message Joined: 31 Aug 99 Posts: 909 Credit: 1,582,816 RAC: 0

|

In your Case 1 here, are you sure that you aren't running into the -TSI gate for the low share project? So long as I'm in tamper mode, I'm going to twiddle client_state.xml to put the SETI long-term debt less negative than the SETI short-term debt, by 2000 on the Q6600, and 1000 on the E6600. It appears that the name of the game is to assure that the (off-center) oscillation center in STD actually puts both the SETI and the Einstein LTD in the "fetch allowed" range during at least part of task-switch cycle. That was definitely not happening on either of these hosts for the past month, and did not appear to be reconciling itself. The -TSI gate, as Alinator terms it, (the requirement that the number of seconds of Long-Term-Debt for a project be no more negative than the current "Switch between applcations" parameter) seems clearly at the heart of my case 1, and seems clearly to be a likely issue for people running multi-CPU hosts with a project on low resource share. I have four hosts, two uniprocessor, one Duo, and one Quad. All are currently running Einstein at 96% share, and SETI at 4% share. The operation of the task scheduler (responding, of course, to Short-term-Debt), cycles the SETI STD between approximately 0 and approximately -3600 on my uniprocessor hosts. While this is off-center, it means that if LTD=STD, fetch is allowed for both projects throughly most of the cycle. However, on the Duo, the approximate STD cycle range is from -1500 to -5000. So if LTD=STD fetch for SETI is only allowed for about half the cycle. On the Quad, the approximate STD cycle range is from -5000 to -8600. Thus if LTD=STD, no SETI fetch occurs at any point in the normal cycle. When SETI goes to zero available tasks, then STD shrinks to the point were fetch is allowed. However, crucially, the equilibrium does NOT drive the LTD to STD relation toward the point at which stable prefetch is likely. The behavior I've seen for the past month, in which the Quad perpetually goes through the sequence of prefetching a full queue, then working it off to zero, then zero SETI for a few hours, then a full-queue prefetch with multiple task startup, appears to be the stable expected outcome. When project downtime and other effects are factored in, I actually appear to have seen gradual creep to SETI LTD being somewhat more negative than SETI STD on all four hosts--in other words biased in the direction of shutting down SETI task fetching. While the bias for uniprocessors which pushes the centerpoint of the STD cycle for the low resource project negative to about half the "switch between applications" time is perhaps innocuous, and likely the unintended consequence of some other desire relation, the fact that it is far more negative for multi-CPU hosts, has the observed destructive effect on prefetch stability, in the absence of a corresponding bias for the allowed fetching range and the LTD to STD relation toward which the whole scheme trends. With my 96/4 resource share, I've decided to do (yet another) LTD tampering, and deliberately set my uniprocessor hosts to a SETI LTD 1800 more positive than STD, the Duo 3600, and the Quad 7200. While probably not ideally centered in the fetch range, I believe that all of these will allow prefetch during the full normal cycle, and have some remaining margin for oddities. I recognize that this is a side-issue to the original poster's topic, though somewhat related. I'm grateful to Alinator for pointing out that his -TSI gate was crucial to the behavior I reported. |

|

Alinator Send message Joined: 19 Apr 05 Posts: 4178 Credit: 4,647,982 RAC: 0

|

Hmmmm... It's going to take me a little bit to digest this fully. ;-) My turn to ask for clarification though. When you say 'stable prefetch', I take it you are referring to a state where the CC will pull and maintain a fresh task in reserve? IOW, it will always have an 'on-deck' batter, so to speak, at the first possible opportunity consistent with your preference settings. Alinator |

|

1mp0£173 Send message Joined: 3 Apr 99 Posts: 8423 Credit: 356,897 RAC: 0

|

Hmmmm... The larger the connect interval is, the less likely this is to happen. I'll leave the details to JM7, but when there is deadline pressure, if BOINC fetches work, there is a risk that the new work will also have a short deadline, increasing the deadline pressure. |

|

archae86 Send message Joined: 31 Aug 99 Posts: 909 Credit: 1,582,816 RAC: 0

|

By "stable prefetch" I mean behavior similar to that I nearly always see on my high-resource project (Einstein). I see a queue maintained close to the size I've requested (about 4 days). When the queue drops moderately below that, a small amount of new work is requested (usually a single task). When an outage or a change in estimated crunching speed puts the queue low by a few hours, several tasks are prefetched together, but about the right amount to restore the queue to its intended size. When a change in estimated crunching speed makes the queue seem overfull fetching ceases for a few hours, then resumes, usually with just a single new task. Your description would fit if my requested "Computer is connected to the Internet about every" plus "Maintain enough work for an additional" were small compared to an estimated task execution time as de-rated for resource share, but since I have my connection interval set to .005 days and my additional work to a little over four days, the usual queue for SETI would be half a dozen or more work units on the Quad. All of which is categorically different from the boom and bust sequence I've been having for SETI fetching on the same Quad host. Fetch about 10 to thirty tasks adding up to four days estimated allocated crunching time. Work them down to zero with no additional fetches for four days. Run with zero SETI queue for a few hours, and another big bang fetch. |

|

Alinator Send message Joined: 19 Apr 05 Posts: 4178 Credit: 4,647,982 RAC: 0

|

OK.... Here is my current working hypothesis on this: AFAICT, unless the published documentation is just plain wrong, there is no direct LTD/STD relationship in the work fetch policy. This doesn't mean that the causal relationships between STD and LTD you are observing aren't valid or don't exist. However, there is a one other factor we haven't been taking into account and that is the Project Shortfall value when the Work Fetcher makes it run to decide which project to ask for more work when the cache needs refilling. This is what I currently think is causing the 'drift' in the STD/LTD work fetch trigger point in your case, and the divergence from the optimum efficiency work mix in the experiment Richard is running. This value comes from what I'm going to assume is the latest run of the Round Robin Simulator, and at least for me, is the hardest to track and account for when I'm evaluating expected behaviour. The problem I'm having right now is I'm not seeing a way to 'isolate' it as the variable of interest without changing the basic 'rules of the game'. IOW, you can't just go in and set an 'arbitrary' value for it (like you can with LTD or STD for example) and then let BOINC run on its own to see what happens. It seemed to me the best you can do it is to make the adjustments you can and then monitor the output value for Project Shortfall in the logs. Needless to say this makes it somewhat time consuming and more difficult to collect enough data to rule out spurious effects and build confidence in the results when you have to isolate a variable via the 'backdoor' like that. Alinator |

|

Alinator Send message Joined: 19 Apr 05 Posts: 4178 Credit: 4,647,982 RAC: 0

|

Agreed, but a given in the experiments here is that CI/CO has been chosen so that deadline pressure shouldn't be an issue even if the next fetch would have a high tightness factor. Alinator |

Richard Haselgrove  Send message Joined: 4 Jul 99 Posts: 14686 Credit: 200,643,578 RAC: 874

|

OK.... I'll buy that. STD is completely irrelevent to work fetch: LTD acts as an inhibitor (but not an absolute prohition) when below -TSI: and if more than one project makes it through Round Robin when there's a global shortfall, the contactable project with the highest LTD gets the call. I was just sitting here pondering your post, when there was a flicker of activity on the other monitor: two of the 13fe08ac errored out, thus presumably suddently reducing the known "work on hand" below treshhold and triggering a fetch. So then this happened: 13/03/2008 20:00:19|SETI@home Beta Test|Sending scheduler request: To fetch work. Requesting 785 seconds of work, reporting 1 completed tasks 13/03/2008 20:00:34|SETI@home Beta Test|Scheduler request succeeded: got 1 new tasks 13/03/2008 20:01:15|SETI@home|Sending scheduler request: To fetch work. Requesting 3468 seconds of work, reporting 2 completed tasks 13/03/2008 20:01:20|SETI@home|Scheduler request succeeded: got 2 new tasks 13/03/2008 20:01:35|SETI@home|Sending scheduler request: To fetch work. Requesting 3514 seconds of work, reporting 0 completed tasks 13/03/2008 20:01:40|SETI@home|Scheduler request succeeded: got 2 new tasks 13/03/2008 20:01:56|SETI@home|Sending scheduler request: To fetch work. Requesting 3389 seconds of work, reporting 0 completed tasks 13/03/2008 20:02:01|SETI@home|Scheduler request succeeded: got 1 new tasks 13/03/2008 20:02:16|SETI@home|Sending scheduler request: To fetch work. Requesting 362 seconds of work, reporting 0 completed tasks 13/03/2008 20:02:21|SETI@home|Scheduler request succeeded: got 1 new tasks All downloads completed between the scheduler requests (I've edited them out to save space), so there were no comms glitches to get in the way. So why, oh why, is the work fetcher disobeying Rom's heartfelt pleas to do all all the work fetch/reporting in blocked batches, to save database stress? [Edit - each of the allocations was enough to meet the number of seconds requested, by a comfortable margin. Beta got 4:54 (h:mm), SETI got 2 x 0:54, then 2 x 0:54, then 1 x 3:08, finally 1 x 3:08] |

|

Alinator Send message Joined: 19 Apr 05 Posts: 4178 Credit: 4,647,982 RAC: 0

|

Here's the key (I think): It's not just the highest LTD, it's the highest LTD + Project Shortfall which gets the first shot at fetching. I was just sitting here pondering your post, when there was a flicker of activity on the other monitor: two of the 13fe08ac errored out, thus presumably suddently reducing the known "work on hand" below treshhold and triggering a fetch. Again, this apparent break from a stated goal might be explained by my last observation when the next RRS/WF runs after the DL. Alinator |

|

archae86 Send message Joined: 31 Aug 99 Posts: 909 Credit: 1,582,816 RAC: 0

|

STD is completely irrelevent to work fetch: LTD acts as an inhibitor (but not an absolute prohition) when below -TSI: and if more than one project makes it through Round Robin when there's a global shortfall, the contactable project with the highest LTD gets the call. In saying that LTD below -TSI is an inhibition, but not a prohibition to work fetch, do you mean that if work is available from the preferred project, the disfavored project will get none? If so, than my observations are consistent with that. Einstein is pretty generally available. So this "inhibition but not prohibition" was enough to preclude my Quad host from getting any normal (i.e. not zero queue) SETI fetch for a month. Calling STD irrelevant to work fetch, as three of you have done with such energy, seems to me to ignore the minor detail that STD and LTD move in lock-step in normal operations, and that the -TSI gate driven by LTD is, in my observation, a severe influence on disfavored project work fetch for a quad. The STD to LTD offset governs whether the STD swing in normal task switching actually includes any time in which the LTD permits fetching. Is it that I've not managed to describe that clearly? or that you actually think some part of that is not true? As to the shortfall discussion: the only forum reference to shortfall I could find that gave someplace to look in my system files was many months back, and appears to have required a debug mode to be turned on, so I'll not be following up. In any case, invoking that seems not needed to model my observations. The offset bias of the STD swings as governed by task switching is not something that builds up gradually--it shifts promptly with a shift in resource share. I believe it would shift promptly with number of allowed available CPUs as well. |

Richard Haselgrove  Send message Joined: 4 Jul 99 Posts: 14686 Credit: 200,643,578 RAC: 874

|

STD is completely irrelevent to work fetch: LTD acts as an inhibitor (but not an absolute prohition) when below -TSI: and if more than one project makes it through Round Robin when there's a global shortfall, the contactable project with the highest LTD gets the call. I don't think that what we're saying about STD in any way contradicts your experience. Under circumstances where the two debts march in lockstep, an external observer would be hard put to it to determine which debt caused the fetch inhibition, and which merely coincided with it. But as you said yourself, applying some offsets, so LTD remains above -TSI while the STD swings about equilibrium, restores equitable caching: which to my mind confirms the dominant role of LTD in causing fetch inhibition. In my case, with 8 cores and some dodgy projects, I see wilder extremes of behaviour. Just at the moment, something (neither STD nor LTD) is inhibiting SETI Beta fetch for days on end. STD is limit-stopped, LTD isn't, so that breaks the lock-step without any intervention on my part. And that's what led me to assert the dominant role of LTD. @ Alinator: And because I'm getting days of +ve debt, I'm not sure that "LTD + Project Shortfall" is sufficient to explain the effect. I'll need to sleep on that one. |

|

Alinator Send message Joined: 19 Apr 05 Posts: 4178 Credit: 4,647,982 RAC: 0

|

LOL... OK, just wanted to let you guys know I have been reading along off and on since I posted last. However, I'm starting to suffer from 'noodle meltdown' from input overload. So I'm going to go rectify that with some R & R, including a good buffer cleaning with some neutral spirits solvents. :-) So back tommorrow.... Same Bat time, Same Bat Channel! Alinator |

|

1mp0£173 Send message Joined: 3 Apr 99 Posts: 8423 Credit: 356,897 RAC: 0

|

STD is completely irrelevent to work fetch: LTD acts as an inhibitor (but not an absolute prohition) when below -TSI: and if more than one project makes it through Round Robin when there's a global shortfall, the contactable project with the highest LTD gets the call. Yes, and if you think about it, fetching work is a commitment to complete the work on time. BOINC may have to exceed your resource share short-term to do that -- and that will make LTD more negative. Inhibiting work fetch is the only way to restore your resource share.

They may track, but they're measuring different things, and they're used in different ways. Short Term Debt applies to which work unit will be done next if there is no schedule-pressure (and tends to rebalance later if schedule pressure gives another project priority). |

Richard Haselgrove  Send message Joined: 4 Jul 99 Posts: 14686 Credit: 200,643,578 RAC: 874

|

Now this is how I like to see BOINC running. Both my quaddies have work from four projects at the moment, and with equal resource shares they've both found a nice little equilibrium point where one task from each project is running continuously - no task switching, no change in debt values. Almost certainly the most efficient way to run. The interesting one: CPDN - 1 task running, 1 waiting to run, NNT. LHC - 1 task running, about 12 hours cache remaining, no work from project. SETI - 1 task running, 38 hours cache, requesting top-ups regularly. Einstein - 1 task running. It's Einstein that's interesting. It got to within about an hour of running dry, then requested 65,807 seconds of work (near enough 1 day, the cache I run). Yet it has the worst LTD on the system, at -299,715 seconds. See what I mean about LTD "inhibiting, but not prohibiting"? [SETI is also negative, at -72,777, but it's the 'least worst' with available work]. Now why won't the octo do that? PS - I think I'm going to have a go at writing myself a little debt-logger over the weekend - unless someone has one to hand? |

|

archae86 Send message Joined: 31 Aug 99 Posts: 909 Credit: 1,582,816 RAC: 0

|

PS - I think I'm going to have a go at writing myself a little debt-logger over the weekend - unless someone has one to hand? Not unless I rent out my wrists. I've been populating a spreadsheet by hand, but to show the cycles I'm interested in it needs to be updated more often than once an hour at times. I'd call your case of Einstein dropping to within an hour, then asking for 65807 seconds "boom and bust". |

Richard Haselgrove  Send message Joined: 4 Jul 99 Posts: 14686 Credit: 200,643,578 RAC: 874

|

Have you ever been really, really annoyed with BOINC? So there I was, writing this neat little debt logger, and what was it doing behind my back?  Finding bloody equilibrium, that's what! Still, it gives me the ammunition to reopen [trac]#136[/trac], so not an entirely wasted afternoon. I'll have to kick it off equilibrium first, though, so I can check and verify my theory about disabling network activity. |

©2025 University of California

SETI@home and Astropulse are funded by grants from the National Science Foundation, NASA, and donations from SETI@home volunteers. AstroPulse is funded in part by the NSF through grant AST-0307956.