Interesting Post @ Einstein on upload failures

Message boards :

Number crunching :

Interesting Post @ Einstein on upload failures

Message board moderation

| Author | Message |

|---|---|

|

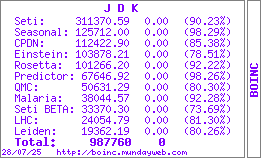

J D K Send message Joined: 26 May 04 Posts: 1295 Credit: 311,371 RAC: 0

|

http://einstein.phys.uwm.edu/forum_thread.php?id=3560#25510 And the beat goes on Sonny and Cher BOINC Wiki  |

|

Astro Send message Joined: 16 Apr 02 Posts: 8026 Credit: 600,015 RAC: 0 |

And then there is also this email from Tigher to the Devs: This is a forward of a message I sent to xxxxx(who's been trying to track down the 500 error problem for well over a month now) this morning. He'd picked up that I'd submitted a mod to fix the problem after analysing packet traces from a user who initially reported the problem on the CPDN spinup project but is experiencing it on all projects except WCG, but unfortunately I've not been around for 3 weeks. The mod I suggested hasn't been checked in. It came about after an offline discussion initiated by an email from xxxxxxx, with David and Carl copied into the conversation. There have been changes to net_xfer_curl.C since I submitted the change on 6th December, but based on the latest feedback from the CPDN user I was working with the problem was still present in BOINC 5.3.6. The problem happens when the core client receives an HTTP 100-Continue response. There's no code in net_xfer_curl.C to handle this. Processing falls through to where the socket is closed and (in BOINC stable versions earlier than 5.2.15) nxf->http_op_retval is left unchanged from HTTP_STATUS_INTERNAL_SERVER_ERROR (500). The socket closure causes the 200-OK message to disappear into the bit-bucket. Responsibility for applying the fix seems to have been lost somewhere, so here's the last message with the fix modified to account for the lines added to net_xfer_curl.C between CVS versions 1.17 and 1.17.12.1: ************************************************************************ I think I've tied down this problem. The user reverted to BOINC 4.45 and everything works as expected. The difference is that the request in 5.2.* includes an "Expect: 100-continue" line in the header. Based on my reading of sections 8.2.3 and 10.1.1 of RFC2616 ( http://www.faqs.org/rfcs/rfc2616.html ) this line is used when the request headers are sent before the body to test if the origin server is willing to accept the request, with the continue indicating that it is. Supressing the "Expect: 100-continue" line might fix the problem, but silently ignoring the "100-continue" response should definitely do it. Here are the required changes: Code: http_curl.h: 40a41 #define HTTP_STATUS_CONTINUE 100 net_xfer_curl.C: 323a324,325 } else if ((nxf->response/100)*100 == HTTP_STATUS_CONTINUE) { return; I doubt if the client should ever get a "101-switching protocols" response, and the change assumes this can be silently ignored too. Additional clean-up work may be needed in NET_XFER_SET::got_select() before returning, but the important thing is that the libcurl socket has to be kept open. Editted to remove certain names |

|

web03 Send message Joined: 13 Feb 01 Posts: 355 Credit: 719,156 RAC: 0

|

Tony - Thanks for posting this. I personally haven't been having this problem, but I have seen this referenced on other boards (CPDN and Einstein). Hopefully the devs can get this fixed for all the crunchers out there having a problem. Then it's on to the next thing, right? Wendy Wendy   Click Here for BOINC FAQ Service |

|

Astro Send message Joined: 16 Apr 02 Posts: 8026 Credit: 600,015 RAC: 0 |

When classic closed I was manning the help desk and about 1% of new users were having a similar issue, so hopefully this will fix the issues for those few. |

Tigher Tigher Send message Joined: 18 Mar 04 Posts: 1547 Credit: 760,577 RAC: 0

|

Just to confirm Thyme Lawn has submitted a fix for the error 500 problem. Yet to be checked in but went to the _dev list today again. I have asked if they could "hurry" it along as lots of folks suffering. I think it may well only be a problem in versions that use libcurl which is as far as I can recall is later than 4.45. i.e. 5.x.x - think thats correct!?! Not sure which version it will be released in - so far its not in 5.2.15 we know for sure as we tested it. Its was Thyme Lawn's email mentioned below btw - 2 Ians there! I have been chasing it for months but so has he .... he found the fix. Well done him!  |

|

Jack Gulley Send message Joined: 4 Mar 03 Posts: 423 Credit: 526,566 RAC: 0

|

I have asked if they could "hurry" it along as lots of folks suffering. Maybe you could go back and tell them to really hurry and even release a single fix version of 5.2.13 if necessary to get it out there as quickly as possible to users to test and see if it fixes problems. It is having a bigger impact then you or anyone else suspects! I have been trying to track down some of the upload and download problems I have been seeing on my Cable attached systems during recovery periods, along with looking into why so many people have been having problems doing the same. There were references in the different Message boards to "fixes" being submitted, but no details as to what was happening. No way to tell if it was the same problems or not. I have been trying different hardware configurations and software configurations trying to reproduce some of the failures, without any success. So I have been concentrating on the one problem I could see that was occurring. But it is only really noticeable to me during the heavy traffic that occurs during a recovery period on Seti. This is the case where an upload appears to start and something is sent and then it just sets there showing some number like 107.69% in the Progress column for a period of time. Often four minutes or more. Then eventually it times out and backs off into Retry later. I have seen the same problem Downloading. If two such "hangs" occur, then other uploads and downloads are blocked from starting. They remain in the Uploading or Downloading state. I have seen several of my systems get stuck in this condition for over half an hour, and the only way out was to exit BOINC and reboot the system! During yesterdays outage and recovery I was finally able to capture a usable Ethereal trace showing attempts to upload during the recovery period, where many attempts were made, several of the "failure" hangs occurred and finally a successful upload. I had noticed the HTTP 100-Continue response in the failure/hang cases, but thought nothing of it at the time. I failed to save that trace before starting the next one. The problem I was looking for looked like an upload server problem to me, but other things kept me from looking deeper into the trace of it until today. Then saw a link to this thread and your details of the problem. BINGO. This is what I saw in that one good trace. This means that not just some are having a problem with this. It is happening to everyone during the recovery periods, and they have been writing it off as just due to the overload and dropped connections. And there may be more to it than just the failures in the fix. There may be a problem with the Seti upload/download server side that "looks" the same to a user but is a server problem. In one set of traces, what I was seeing sometimes, was the first two small file requests transfer to the server, (after the TTP 100-Continue), but then in response to the Host ACK, the server responds with a WIN=0 at the point it should continue with the upload of the actual results data. At this point, the BOINC host would keep sending keep-alive packets, expecting the session to continue. But the server never comes back telling it to continue. Then finally the BOINC host times out the session. I have trouble understanding why this would happen. The server should have space allocated for the upload file by this time and be ready to continue the session, even when being pounded on. It should at least come back and continue the upload and finish it during a reasonable amount of time. If it was so overloaded that it is not able to allocate space for the result file or complete a transfer sequence, then it should not have started it in the first place, and had the Host back-off to start with, instead of wasting network and processor bandwidth doing a partial transfer. At this point, the Seti Cogent link had been up for some time and the traffic load had already started backing down. If the server considers it "valid" to stop the transfer at this point and drop the session, and a valid way of doing it, then the BOINC host should have the code to recognize this as a valid termination and not set there for four minutes or more waiting for it to continue. If the Seti server is dropping the session at this point due to a bug, then given this information on what is happening at the user end should help isolate where in the process the session is being dropped. A new version of the BOINC host with a carefully reviewed and fixed handler for the upload and download processes would help separate out the different failures currently being seen so that responsibility for the rest of the related problems can be determined, not swept under someone elses machine. |

Tigher Tigher Send message Joined: 18 Mar 04 Posts: 1547 Credit: 760,577 RAC: 0

|

I have asked if they could "hurry" it along as lots of folks suffering. I am sure Dr A will do his best here. Please remember that this may not be the end of all problems. Sadly 500 is used as an error code from the fastcgi server, the apache web server (which often translates any fastcgi error codes to...guess what....yes 500 so you cannot even know what went wrong) and the boinc cc. So tracking where it came from has been very difficult. So far as I can see this will be the third fix for error 500. Actually it will be the third fix for errors that masqueraded with the 500 error code. There may be others yet so please have patience. All will come good I am sure but tracking these things can be a nightmare.  |

|

1mp0£173 Send message Joined: 3 Apr 99 Posts: 8423 Credit: 356,897 RAC: 0

|

I also strongly suspect that we're working (and may always be working) more than one problem. Error reporting in the latest alpha clients is better. |

|

Jack Gulley Send message Joined: 4 Mar 03 Posts: 423 Credit: 526,566 RAC: 0

|

Please remember that this may not be the end of all problems. How well I know. Like any set of problems, you have to peel the onion one layer at a time. But you can't assume that one bad layer is the cause of all the problems. That is why I have posted that information now that I have something solid, not just several theories. This is one I have been trying to track down myself because I can see it fail sometimes. Just the only time I can see it often enough to try to get a trace of it, is during recovery. There may be other problems, but the problem is that if you don't assume that and look for them, then you will tend to brush them off as just part of the last problem that is in the process of being fixed. And after the fix is out, assume people have not updated correctly. I have been trying to track down some of the problems different people have had with uploading and downloading. I have tried a number of different hardware and software combinations myself and can not reproduce them. But now I have seen both failures occur. Not much we can do about the one you found until BOINC is fixed. This other one is something Berkeley staff will have to look into. But at least I now know what to look for in the traces and how to tell the different failures apart. |

|

Leo Send message Joined: 28 Mar 03 Posts: 6 Credit: 2,808,238 RAC: 1

|

Hi! I also think, that it's mor than one problem. Regarding the "really hurry": E.g. I've the problem, that I can not upload packages (stalls after some byte, looks really like the error you describe) AND that I seem to get no scheduler. Trying everything to fix it I also erased the project (including the completed results..) Upon try to install the project again a part of the procedure is to fetch new data. Of course with the result of Error 500 --> the project gets erased. If I would be somebody who wants to try out boinc and fail after trying some hours, I would give up. If 1% of users is having this issue it does not only mean that 1% less work units are processed. It could also mean that we loose 1% or more of potential new members. Regards Leo |

|

Jack Gulley Send message Joined: 4 Mar 03 Posts: 423 Credit: 526,566 RAC: 0

|

edit: added the suspect that it is the clients (Boinc SW) fault That is along the lines of what I have seen in some traces also, just not one from the initial connection. One of the problems is, that most of the users having problems don't even know what a ping would look like, much less how to do a TCP/IP trace. So it is sort of hard to get much useful feed back as to what they are really seeing. Some have been able to connect through proxy's (to get around some de-peering problems between ISP's). Others have used proxy's to get around unexplained connection problems like you are having. You are able to connect to the scheduler so it is not a basic Internet path problem that some people are having. There were some problems in the BOINC code recently identified in this area where it is dropping the connection. However those suggested fixes got dropped for several weeks for some reason. But they have now been put into the next build and have seen some limited testing. There were also a number of changes added that correct the problem of the "generic ERROR 500" message for all errors and timeouts, and a much larger and maybe more useful set of errors will be displayed in the new code. But the "fix" did not seem to fix all of the problems that it was expected to fix, so I assume they are still working on it. See one thread on one of the "new" error message that showed up from a user who is having the same type of problem. Maybe you can make more sense of it given what has been found so far. I assume you have done the ping and tracert from your location to both the scheduler and to the Upload/Download servers (the currently go in through different ISP's). There are suspected problems with MTU settings, Linksys routers with back level firmware, known problems with the BOINC code, and who knows what else is going on. It gets complicated sorting it all out. As there are several different paths into the Berkeley campus network, different routes within it, and recently a few router problems there. Scheduler: ping setiathome.ssl.berkeley.edu ping 128.32.18.151 ping 128.32.18.152 Upload/Download server: ping 66.28.250.125 Check for Black Hole router problems: ping -f -l 1472 128.32.18.151 ping -f -l 1472 128.32.18.152 ping -f -l 1472 66.28.250.125 then try tracert to see if there is a problem. I have been looking at one of the minor problems I can see, and have a theory of what might be happening somewhere, but have not had time to verify what I think I saw happening. But we really need useful detailed information about the failure, like you gave. It might help someone who has looked at the code realize what is going on. |

|

Jack Gulley Send message Joined: 4 Mar 03 Posts: 423 Credit: 526,566 RAC: 0

|

Also I'm not quite sure if the content length is correct (I count about 2046 instead of 2098 characters from the request). That might be an important observation if true! Some of the partial reports from some users suggest that all of the information expected in a response is not being seen by the scheduler. As if part of the file sent back was cut off. That also fits into one idea I am looking at. But there is just no way to explain right now why some people are having a major problem, and most of us are not. There does not seem to be any pattern as to it. Some people have two systems on their local network that work just fine and one that does not? |

|

1mp0£173 Send message Joined: 3 Apr 99 Posts: 8423 Credit: 356,897 RAC: 0

|

The X-Cache: line suggests that the proxy is a caching proxy. BOINC won't benefit from a cache because the response from the BOINC servers will always be unique, so the client would never expect a response that doesn't come from Apache and the scheduler CGI. So, yes, BOINC client flaw, but also a cache/proxy that is less transparent than it ought to be. Can the cache be bypassed?? |

|

Leo Send message Joined: 28 Mar 03 Posts: 6 Credit: 2,808,238 RAC: 1

|

Hello Ned/Jack! The cache is on the company proxy. I have no influence on it and also not much information obout it. I have some knowledge about establishing tcp and IP connections, but I've almost no clue about proxys, servers there protokolls and all this usefull little programms, like net, netsatat, tracert, that one would need to track down network issues. If you can specify some network commands (running on native XP) to find out more information about the proxy and the connection just post them or mail me (mailto:ewwatt@gmx.at) and I'll just try it out. Also I can make additional traces any time, if needed. Regards Stephan |

|

Leo Send message Joined: 28 Mar 03 Posts: 6 Credit: 2,808,238 RAC: 1

|

[quote] Scheduler: ping setiathome.ssl.berkeley.edu ping 128.32.18.151 ping 128.32.18.152 [/qoute] Ping statistics for 128.32.18.151: Packets: Sent = 4, Received = 0, Lost = 4 (100% loss) Ping statistics for 128.32.18.152: Packets: Sent = 4, Received = 0, Lost = 4 (100% loss) --> all ping requests to outside the company are blocked by the firewall. (interresting, that's new for me) Ping inside company works. --> Probably I have no way to trace the route outside the company network (as far as I remember tracert uses ping commands...) An indication: Tracing route to klaatu.SSL.Berkeley.EDU [128.32.18 over a maximum of 30 hops: 1 1 ms <1 ms <1 ms xxx.yyy.178.254 2 <1 ms <1 ms <1 ms 10.2.180.37 3 <1 ms <1 ms <1 ms 10.2.180.2 4 <1 ms <1 ms <1 ms xxx.yyy.158.195 5 <1 ms <1 ms <1 ms xxx.yyy.158.193 6 * * * Request timed out. 7 * * * Request timed out. 8 * * * Request timed out. 9 * * * Request timed out. 10 * * * Request timed out. 11 * * * Request timed out. 12 * * * Request timed out. 13 * * * Request timed out. 14 * * * Request timed out. 15 * * * Request timed out. 16 * * * Request timed out. 17 * * * Request timed out. 18 * * * Request timed out. 19 * * * Request timed out. 20 * * * Request timed out. 21 * * * Request timed out. 22 * * * Request timed out. 23 * * * Request timed out. 24 * * * Request timed out. 25 * * * Request timed out. 26 * * * Request timed out. 27 * * * Request timed out. 28 * * * Request timed out. 29 * * * Request timed out. 30 * * * Request timed out. Trace complete. I guess ping and tracert are out. How could we get some info about the proxy, if usefull? Regards Leo |

Steve Cressman Steve Cressman Send message Joined: 6 Jun 02 Posts: 583 Credit: 65,644 RAC: 0

|

[quote] If that is a hardware firewall(router) then you can access the router using your browser and should give you the ability to ping outside the network. You will need to now the address of the router to access it from the browser and probably you need to know the password and login name too. :) 98SE XP2500+ @ 2.1 GHz Boinc v5.8.8  And God said"Let there be light."But then the program crashed because he was trying to access the 'light' property of a NULL universe pointer. |

|

Leo Send message Joined: 28 Mar 03 Posts: 6 Credit: 2,808,238 RAC: 1

|

@Steve and probably you need to know the password and login name too. This was a good one. I'm not in a SOHO where I'm my own Admin. I'm in a company with several thousand people. _______________________________________________________________________ HTTP/1.0 200 OK Content-Type: text/html Date: Wed, 18 Jan 2006 09:27:19 GMT Server: Apache/1.3.34 (Unix) mod_fastcgi/2.4.0 PHP/4.4.1 Via: 1.1 webwasher (Webwasher 5.3.0.1953) X-Powered-By: PHP/4.4.1 X-Cache: MISS from xxx.yyy.zzz.at Proxy-Connection: close <scheduler>http://setiboinc.ssl.berkeley.edu/sah_cgi/cgi</scheduler> ______________________________________________________________________ This is the seti server reply that tells where to find the scheduler. This happens right before the scheduler request of the boinc SW, the trace that I allready posted. You see that web washer follows the rules and tells that it is forwarding the stream. I think that most other parts like the firewall are mainly in Software, but not all adding the via information. @ned I also noticed that the boinc client always states: pragma: no-cache in its request. The berkley server does not give an expire date in its answer. All in all, I would rather be surpirsed not having a cache miss. Regards Leo |

|

1mp0£173 Send message Joined: 3 Apr 99 Posts: 8423 Credit: 356,897 RAC: 0

|

The cache miss isn't the point. It's a hint. The point is that your company Caching Proxy is modifying the communications between the BOINC client and BOINC server. The BOINC client does not expect the 100 continue because the SETI servers never ever send that. You'll likely have to talk to your network admins. but be advised they may take a dim view of employees installing random, downloaded software on anything on their network. |

|

Leo Send message Joined: 28 Mar 03 Posts: 6 Credit: 2,808,238 RAC: 1

|

The point is that your company Caching Proxy is modifying the communications between the BOINC client and BOINC server. Why does the boinc client SW send a 'Expect: 100-continue' in it's scheduler request if it get's confused by getting a 'HTTP/1.0 100 Continue'?

You're right there's now way that I'll talk to the admins about that. Let's just forget about 'cache miss' and 'continue' for a while: After the boinc client sends the scheduler request. Who is expected to react next? *) Should the boinc client somehow send more data without waiting for a reply from the seti server? (very unlikely) *) Should the Seti server answer with a work package from the scheduler? Instead of the continue message without any content I would expect either a work package or further instructions from the seti scheduler. But after the continue message there's nothing. Maybe the boinc SW does not know how to handle the continue message. But I think that the real problem is one step before it get's this message (in fact, like you said, it should probably never get this message). Did you make a word search for '100 continue' over the seti server SW source codes (this is the step that I would expect before a 'never ever' statement ;-) )? Do you know how the Content length is calculated (e.g. are the line feeds between the 'Expect: 100-continue' and the first content counted, etc...)? Is the content length considered anywhere in the seti server SW (there is no need to do so)? Could the proxy be interrested in the content-length (allthough it probably should not)? Regards Leo |

MJKelleher MJKelleher Send message Joined: 1 Jul 99 Posts: 2048 Credit: 1,575,401 RAC: 0

|

Why does the boinc client SW send a 'Expect: 100-continue' in it's scheduler request if it get's confused by getting a 'HTTP/1.0 100 Continue'?Still missing the point: It's not the BOINC client that's sending the message, it's the proxy server.

Then you likely don't want to know about this, either, from Rules and Policies Run SETI@home only on authorized computers Run SETI@home only on computers that you own, or for which you have obtained the owner's permission. Some companies and schools have policies that prohibit using their computers for projects such as SETI@home MJ  |

©2025 University of California

SETI@home and Astropulse are funded by grants from the National Science Foundation, NASA, and donations from SETI@home volunteers. AstroPulse is funded in part by the NSF through grant AST-0307956.