Benchmarks

Message boards :

Number crunching :

Benchmarks

Message board moderation

| Author | Message |

|---|---|

RPMurphy RPMurphy Send message Joined: 2 Jun 00 Posts: 131 Credit: 622,641 RAC: 0

|

Is it possible to 'force' a cpu benchmark? BTW, am using (Linux) Fedora Core 3 on an AMD Sempron 2600+, with latest stable GUI version of BOINC. It is a sad sad day when someone takes your spoon away from you... |

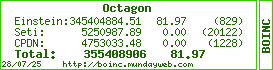

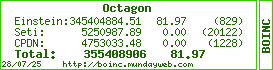

Octagon Octagon Send message Joined: 13 Jun 05 Posts: 1418 Credit: 5,250,988 RAC: 109

|

Is it possible to 'force' a cpu benchmark? In version 4.45 of the BOINC client, go to the File menu and pick Run Benchmarks. No animals were harmed in the making of the above post... much.

|

RPMurphy RPMurphy Send message Joined: 2 Jun 00 Posts: 131 Credit: 622,641 RAC: 0

|

Feel like an idiot, looked everywhere but under 'file'....thanks. It is a sad sad day when someone takes your spoon away from you... |

|

JimT Send message Joined: 18 May 99 Posts: 4 Credit: 3,974,804 RAC: 443

|

Is it usual for the 'to comletion' time for a new WU to be about twice the time it actually takes to complete a WU? I assume this is based on the benchmark. |

|

j2satx Send message Joined: 2 Oct 02 Posts: 404 Credit: 196,758 RAC: 0

|

Is it usual for the 'to comletion' time for a new WU to be about twice the time it actually takes to complete a WU? I assume this is based on the benchmark. I don't think it is benchmark or WU related. Mine seems to always be five days on all my computers. |

W-K 666  Send message Joined: 18 May 99 Posts: 19730 Credit: 40,757,560 RAC: 67

|

Is it usual for the 'to comletion' time for a new WU to be about twice the time it actually takes to complete a WU? I assume this is based on the benchmark. For information on nearly all (99.99%) of your BOINC or project queries see BOINC Wiki the link to your particular question on benchmarks is Benchmarks . Hope this helps. Andy |

|

j2satx Send message Joined: 2 Oct 02 Posts: 404 Credit: 196,758 RAC: 0

|

[quote For information on nearly all (99.99%) of your BOINC or project queries see BOINC Wiki the link to your particular question on benchmarks is Benchmarks . Hope this helps. Andy[/quote] Did you think the information that JimT asked is in the link you gave? |

Paul D. Buck Paul D. Buck Send message Joined: 19 Jul 00 Posts: 3898 Credit: 1,158,042 RAC: 0

|

Is it usual for the 'to comletion' time for a new WU to be about twice the time it actually takes to complete a WU? I assume this is based on the benchmark. Yes the information that answers this question is in the Wiki, but like a lot of things, it is not always easy to find. Basically, SETI@Home, like most science applications does "iterative" processing. Iterative is just a way of saying do the same thing over, and over, and over, etc. But, we don't know before hand how many iterations there will be to process any specific work unit, until we have done the processing. So, we guess. This number, along with the benchmarks is used to calculate a probable time to completion. As processing continues this end time is updated. But, in general, SETI@Home is over my some amount. In 4.72 and later versions we have a "correction" factor that will adjust this estimate to something that is more "real". But, with the benchmark's instability this may or may not converge on a "perfect" value. But, current experience is that the number *IS* more realistic with the correction factor. Einstein@Home for me is usually close or under, SETI@Home is usually at over by 2 times, LHC@Home is a special case altogether ... |

|

Mike Gelvin Send message Joined: 23 May 00 Posts: 92 Credit: 9,298,464 RAC: 0

|

In 4.72 and later versions we have a "correction" factor that will adjust this estimate to something that is more "real". But, with the benchmark's instability this may or may not converge on a "perfect" value. But, current experience is that the number *IS* more realistic with the correction factor. So why isn't this correction factor used in credit claim?  |

|

1mp0£173 Send message Joined: 3 Apr 99 Posts: 8423 Credit: 356,897 RAC: 0

|

In 4.72 and later versions we have a "correction" factor that will adjust this estimate to something that is more "real". But, with the benchmark's instability this may or may not converge on a "perfect" value. But, current experience is that the number *IS* more realistic with the correction factor. It is used -- in newer clients. But even with the correction factor, work units make take longer than predicted, or less time than predicted. (edit: either way, the averaging across the returned WUs evens this out) |

Paul D. Buck Paul D. Buck Send message Joined: 19 Jul 00 Posts: 3898 Credit: 1,158,042 RAC: 0

|

So why isn't this correction factor used in credit claim? Now we get into the whole benchmark problem ... And there is so much on that topic that I need for you to go an read about benchmarks in the Wiki, and the lesson topic on my old site about perfomance testing. Once that is done, then we can talk to the issues. Quite simply put, it just is not an easy thing to do. But, if you do not understand benchmarks, pros and cons, there is not much point in duplicating the Wiki content here ... Sorry, I know getting a homework assignment is not what you expected. But, this is not an easy area. You can also search on benchmark and read some of the old threads ... THEN, we can talk and communicate ... Till then, trust me ... :) |

|

Mike Gelvin Send message Joined: 23 May 00 Posts: 92 Credit: 9,298,464 RAC: 0

|

So why isn't this correction factor used in credit claim? I think the question is much simpler than what you believe. I AM an engineer and understand such things. My question is directed to: The benchmarks were used to 1) determine estimated time to complete a WU. and 2) give some figure of merit for work performed after the WU is finished. If there can be an algorithm that exists that allows a "self tuning" of the time required to complete a work unit, and this self tuning is indeed related to work done vs. time spent to do it, than that same correction should be useable to "correct" the amount of science done (which is only related to time for a given machine) and hence credit. In essence, fine tune the benchmark.  |

Darrell Wilcox  Send message Joined: 11 Nov 99 Posts: 303 Credit: 180,954,940 RAC: 118

|

I think the question is much simpler than what you believe. I AM an engineer and understand such things. My question is directed to: The benchmarks were used to 1) determine estimated time to complete a WU. and 2) give some figure of merit for work performed after the WU is finished. If there can be an algorithm that exists that allows a "self tuning" of the time required to complete a work unit, and this self tuning is indeed related to work done vs. time spent to do it, than that same correction should be useable to "correct" the amount of science done (which is only related to time for a given machine) and hence credit. In essence, fine tune the benchmark. Also as an Engineer (Class of '71), I understand that having the PERFECT number to apply to an interation of computation is not going to predict the time it takes to compute an unknown (and unknowable) number of iterations because the computing depends on the data being processed. So the predicted time is just a rough estimate, and always will be, even with a perfect benchmark. The airlines know EXACTLY how many seats are on each of their airplanes, but overbooking occurs BECAUSE they don't know how many passengers will ACTUALLY show up to take the flight. Same concept here in that SETI doesn't know how many iterations a particular WU will take to complete. |

W-K 666  Send message Joined: 18 May 99 Posts: 19730 Credit: 40,757,560 RAC: 67

|

I agree with what Darrel and Mike Gelvin have said but the problem with Seti and the benchmarks used is that they are so inaccurate as to be almost meaningless. The benchmarks used test interger operations and double precision floating point operations, but most of the work done in Seti is single precision floating point. And the errors are further compounded by the fact they don't test the computer system, they basically test the core of the CPU, i.e. they don't even test the L2 cache memory which is built into all modern CPU's. This means that two CPU's of the same family with different amounts of L2 cache will have approx the same benchmarks but the time to crunch Seti units will differ, assuming they are running the same OS/BOINC/Seti. Andy |

|

Mike Gelvin Send message Joined: 23 May 00 Posts: 92 Credit: 9,298,464 RAC: 0

|

Also as an Engineer (Class of '71), I understand that having the PERFECT number to apply to an interation of computation is not going to predict the time it takes to compute an unknown (and unknowable) number of iterations because the computing depends on the data being processed. So the predicted time is just a rough estimate, and always will be, even with a perfect benchmark. Class of '71 as well.... Just like the airlines predicting average numbers of passengers, an iterative process can indeed get real close to the average computational time for workunits. Since I perceive this as being nothing more than an adjustment on the benchmark number, could (and should?) be applied to the credit.  |

Octagon Octagon Send message Joined: 13 Jun 05 Posts: 1418 Credit: 5,250,988 RAC: 109

|

I agree with what Darrel and Mike Gelvin have said but the problem with Seti and the benchmarks used is that they are so inaccurate as to be almost meaningless. I have seen a "reference work unit" mentioned on these boards. Would it be possible to craft a "mini" reference work unit and use that as a benchmarking tool? Something worth about 2 credits should test the whole system. Each project would have to supply such a mini reference work unit, but it may be worth the effort to have "real" benchmarks to measure this vast distributed computing system. No animals were harmed in the making of the above post... much.

|

Paul D. Buck Paul D. Buck Send message Joined: 19 Jul 00 Posts: 3898 Credit: 1,158,042 RAC: 0

|

I have seen a "reference work unit" mentioned on these boards. Would it be possible to craft a "mini" reference work unit and use that as a benchmarking tool? Something worth about 2 credits should test the whole system. Sounds good. But, why not just use the current one for SETI@Home? On most modern computers the processing time is not THAT long. For slower machines, you calibrate against the faster machines so they don't spend the whole day learning. The point is that since you know the answer you can get the exact number of iterations (with an instrumented application), you know what the answer is so you can validate the accuracy of the tested system, and you test the system over the normal work load processing time. The best benchmark is the actual work load run over the actual work load's procesing time. Even if we only use the SETI@Home reference work unit the calibration over projects can occur. Our "project independent" testing system is equally flawed across all projects so I don't see how the results can get worse. But, as in many things, this is an opinion ... |

|

1mp0£173 Send message Joined: 3 Apr 99 Posts: 8423 Credit: 356,897 RAC: 0

|

There are two problems with this: 1) We move from one benchmark, to many. 2) It's going to take a whole lot longer to run the "benchmarks." ... and it is still an estimate. Remember too that each project may have more than one kind of work unit, and more than one science application. There is a good solution in the 5.1.3 BOINC client. |

|

1mp0£173 Send message Joined: 3 Apr 99 Posts: 8423 Credit: 356,897 RAC: 0

|

I have seen a "reference work unit" mentioned on these boards. Would it be possible to craft a "mini" reference work unit and use that as a benchmarking tool? Something worth about 2 credits should test the whole system. Paul, Have you looked at the correction factor in the 5.1.x clients? It basically looks at the difference between the predicted time and actual time to find a correction factor, and multiplies the benchmark by the correction factor for future work. -- Ned |

|

Bill & Patsy Send message Joined: 6 Apr 01 Posts: 141 Credit: 508,875 RAC: 0

|

There was an excellent discussion of this and all the complexity involved in thread #14987: BIIIIIGGGG!!!!! PENALTY! (Edit: --SNIP--) --Bill

|

©2025 University of California

SETI@home and Astropulse are funded by grants from the National Science Foundation, NASA, and donations from SETI@home volunteers. AstroPulse is funded in part by the NSF through grant AST-0307956.