The Server Issues / Outages Thread - Panic Mode On! (119)

Message boards :

Number crunching :

The Server Issues / Outages Thread - Panic Mode On! (119)

Message board moderation

Previous · 1 . . . 39 · 40 · 41 · 42 · 43 · 44 · 45 . . . 107 · Next

| Author | Message |

|---|---|

juan BFP  Send message Joined: 16 Mar 07 Posts: 9786 Credit: 572,710,851 RAC: 3,799

|

They are preparing us for the next week.I wonder what will be the exact time when the work distribution stops. They have only said it is on the 31st but will it happen when the 31st starts or when it ends or somewhere in between? Or will they just stop adding files so it will end on some unspecified time when the splitters run out of files to split? If was me, i just stop adding files, the rest of the process will be natural. Nothing to change on any running task, etc.

|

David@home David@home Send message Joined: 16 Jan 03 Posts: 755 Credit: 5,040,916 RAC: 28

|

It was a good long run without disruption, all good things come to an end :-( |

|

Ville Saari Send message Joined: 30 Nov 00 Posts: 1158 Credit: 49,177,052 RAC: 82,530

|

It was a good long run without disruption, all good things come to an end :-(That dry period lasted for only a few scheduler requests for me. My caches have recovered and everything seems to be running fine but looking at the SSP things look extremely worrisome. |

Keith T. Keith T. Send message Joined: 23 Aug 99 Posts: 962 Credit: 537,293 RAC: 9

|

They are preparing us for the next week.I wonder what will be the exact time when the work distribution stops. They have only said it is on the 31st but will it happen when the 31st starts or when it ends or somewhere in between? Or will they just stop adding files so it will end on some unspecified time when the splitters run out of files to split? Pacific time or UTC ? :-) |

Richard Haselgrove  Send message Joined: 4 Jul 99 Posts: 14650 Credit: 200,643,578 RAC: 874

|

That 750000 WUs is nearly the whole S@H production during the SSP blackout. So looks like the assimilators have assimilated close to nothing during that time.I've just done some big fetches, and the vast majority were _2 or later resends. I think it was the (in-)validators and the deadline clock that were running, rather than the splitters. |

|

TBar Send message Joined: 22 May 99 Posts: 5204 Credit: 840,779,836 RAC: 2,768

|

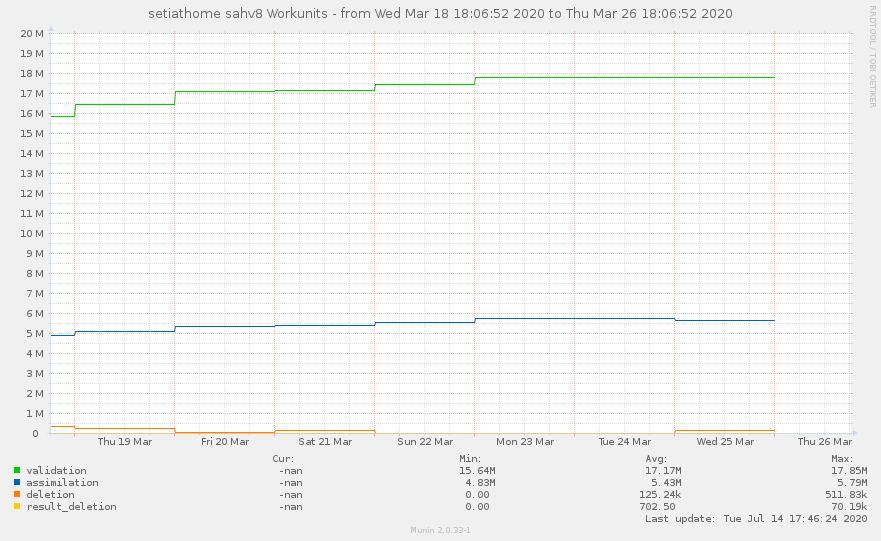

Seems whatever had been locking the Results returned and awaiting validation at 17.8 for the past couple of days broke, and it suddenly jumped by nearly 2 Mil. Quite a leap.  Speaking of Leaps, I have reconfigured My top machine just to see how high it will go. It's approaching 900k and still shows a steep incline on the Statistics Tab of Boincmgr, https://setiathome.berkeley.edu/show_host_detail.php?hostid=6813106 Now if SETI can just keep it fed a few more days. |

|

Ville Saari Send message Joined: 30 Nov 00 Posts: 1158 Credit: 49,177,052 RAC: 82,530

|

Seems whatever had been locking the Results returned and awaiting validation at 17.8 for the past couple of days broke, and it suddenly jumped by nearly 2 Mil.That leap happened because the assimilators processed nearly nothing during the 14 hours the SSP was non functional. So almost the whole production of that 14 hours ended up swelling the assimilation queue. Results waiting for 'validation' grew by 1662606 and workunits waiting for assimilation by 720273. The ratio between those two numbers is 2.3, which is very close to the long time average replication 2.2, so pretty much the entire jump is explained by assimilation queue. |

|

Ville Saari Send message Joined: 30 Nov 00 Posts: 1158 Credit: 49,177,052 RAC: 82,530

|

(or the replica has caught up, but the SSP doesn't show that)I guess they are in the middle of taking the replica offline, so the web pages come from the master now but the replica is still shown as running on the SSP. |

|

Ville Saari Send message Joined: 30 Nov 00 Posts: 1158 Credit: 49,177,052 RAC: 82,530

|

And now the master is dying. Queries/second has doubled, most variables on SSP don't update any more and I'm now getting only a small trickle of new tasks. |

juan BFP  Send message Joined: 16 Mar 07 Posts: 9786 Credit: 572,710,851 RAC: 3,799

|

We will end like the universe, in a dark cool state. The entropy will win.

|

Stephen "Heretic"  Send message Joined: 20 Sep 12 Posts: 5557 Credit: 192,787,363 RAC: 628

|

We will end like the universe, in a dark cool state. The entropy will win. . . Definitely NOT going out with a bang and it seems the whimpering has begun. 5 days to go and only 29 channels left to split .... it will be a dry 5 days. just clearing up whatever resends occur I guess. . . Oh, BTW, the SSP is back ... Stephen :( < sigh > |

Tom M Tom M Send message Joined: 28 Nov 02 Posts: 5124 Credit: 276,046,078 RAC: 462 |

My AMD just went dry and switch to E@H like it is supposed to. And AP still has a pretty big stack channels to run? Tom A proud member of the OFA (Old Farts Association). |

|

TBar Send message Joined: 22 May 99 Posts: 5204 Credit: 840,779,836 RAC: 2,768

|

Still not receiving enough work to keep the machines running. The First machine will be empty in about 20 minutes, the next one about an hour later. Seems the 'Results received in last hour' is one of the few items still updating, let's see where it is in an hour, currently at 121,636. |

Patrick Mollohan Patrick Mollohan Send message Joined: 9 Oct 08 Posts: 9 Credit: 200,862 RAC: 34

|

Still receiving and completing tasks, but credits have pretty much flatlined.  Nothing changed on my setups. |

Keith T. Keith T. Send message Joined: 23 Aug 99 Posts: 962 Credit: 537,293 RAC: 9

|

https://setiathome.berkeley.edu/workunit.php?wuid=3946507571 Mine is the _2 I have had the task for around 24 hours. It was my only _2 so I checked it when the results list caught up, Validated in around 30 minutes. |

|

AllgoodGuy Send message Joined: 29 May 01 Posts: 293 Credit: 16,348,499 RAC: 266

|

Still not receiving enough work to keep the machines running. The First machine will be empty in about 20 minutes, the next one about an hour later. I think this is pretty much the case for everyone, but adversely affects the highest performing machines. Received tasks have definitely slowed down, and most checkins are 0 tasks, but I get that occasional nice dump. Looking at the differences between our RACs, it's easy to see why. Edit: This machine is close to your: https://setiathome.berkeley.edu/show_host_detail.php?hostid=8316299 26-Mar-2020 17:02:09 [SETI@home] Scheduler request completed: got 0 new tasks 26-Mar-2020 17:07:17 [SETI@home] Scheduler request completed: got 0 new tasks 26-Mar-2020 17:14:26 [SETI@home] Scheduler request completed: got 0 new tasks 26-Mar-2020 17:19:34 [SETI@home] Scheduler request completed: got 0 new tasks 26-Mar-2020 17:25:42 [SETI@home] Scheduler request completed: got 0 new tasks 26-Mar-2020 17:30:50 [SETI@home] Scheduler request completed: got 0 new tasks 26-Mar-2020 17:35:58 [SETI@home] Scheduler request completed: got 0 new tasks 26-Mar-2020 17:41:07 [SETI@home] Scheduler request completed: got 0 new tasks 26-Mar-2020 17:52:15 [SETI@home] Scheduler request completed: got 0 new tasks 26-Mar-2020 17:57:24 [SETI@home] Scheduler request completed: got 0 new tasks 26-Mar-2020 18:02:31 [SETI@home] Scheduler request completed: got 0 new tasks 26-Mar-2020 18:07:39 [SETI@home] Scheduler request completed: got 70 new tasks 26-Mar-2020 18:12:46 [SETI@home] Scheduler request completed: got 0 new tasks 26-Mar-2020 18:17:54 [SETI@home] Scheduler request completed: got 36 new tasks 26-Mar-2020 18:24:18 [SETI@home] Scheduler request completed: got 8 new tasks |

|

TBar Send message Joined: 22 May 99 Posts: 5204 Credit: 840,779,836 RAC: 2,768

|

Obviously someone kicked the Server since I made that post as the numbers that were 3 hours old are now only 8 minutes old. People are still running out of work though since the 'Results received in last hour' is now at 115,283. You can't return what you don't have. Downloads are now moving again, and hopefully the first machine will be running again soon. |

|

AllgoodGuy Send message Joined: 29 May 01 Posts: 293 Credit: 16,348,499 RAC: 266

|

Still too early to say it has been fixed...better, but still a lot of zeros in scheduler logs. 26-Mar-2020 18:17:54 [SETI@home] Scheduler request completed: got 36 new tasks 26-Mar-2020 18:24:18 [SETI@home] Scheduler request completed: got 8 new tasks 26-Mar-2020 18:29:25 [SETI@home] Scheduler request completed: got 0 new tasks 26-Mar-2020 18:34:33 [SETI@home] Scheduler request completed: got 0 new tasks 26-Mar-2020 18:39:41 [SETI@home] Scheduler request completed: got 8 new tasks 26-Mar-2020 18:44:48 [SETI@home] Scheduler request completed: got 0 new tasks Edit: My cache is still at 400 files vice 450ish like normal. |

juan BFP  Send message Joined: 16 Mar 07 Posts: 9786 Credit: 572,710,851 RAC: 3,799

|

No more BLC tapes and only 2 Arecibo left to split for MB WU. The end is a little close now.

|

|

Ian&Steve C. Send message Joined: 28 Sep 99 Posts: 4267 Credit: 1,282,604,591 RAC: 6,640

|

I've never seen it without any BLC work. wild. ah well. we'll all just have to ride out with our remaining caches. and be all "please, sir. can I have some more" with the Arecibo tapes. Seti@Home classic workunits: 29,492 CPU time: 134,419 hours

|

©2024 University of California

SETI@home and Astropulse are funded by grants from the National Science Foundation, NASA, and donations from SETI@home volunteers. AstroPulse is funded in part by the NSF through grant AST-0307956.