Cannot kill stuck tasks.

Questions and Answers :

Unix/Linux :

Cannot kill stuck tasks.

Message board moderation

| Author | Message |

|---|---|

Joseph Stateson Joseph Stateson  Send message Joined: 27 May 99 Posts: 309 Credit: 70,759,933 RAC: 3

|

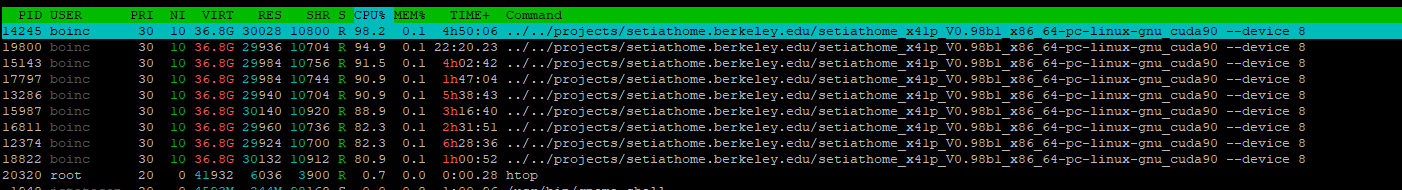

tried the following jstateson@h110btc:/usr/bin$ boinccmd --quit can't connect to local host root@h110btc:/var/lib/boinc/projects# sudo killall -v boinc boinc: no process found sudo kill -9 12374  However, the tasks are all still running, hours after they were timed out. The CPU % changes as they get a time slice and occasionally the shared memory shows a change. I would hope that if a task is "stuck" and the client cannot kill it that it would know not to assign subsequent tasks to the same device. In other news, my cheap p102-100 "mining gtx1080ti" seems to work fine with that special SETI client, unlike the even cheaper p104-90 one of which got stuck. |

Jord Jord Send message Joined: 9 Jun 99 Posts: 15184 Credit: 4,362,181 RAC: 3

|

Try stopping the actual science applications still running. It looks like they're orphaned due to the BOINC client process already being stopped/quit/crashed. That's why you can't stop running BOINC, because it's no longer running. Your image only shows the science apps running, not the client. Only that the BOINC user runs those science apps. But the BOINC users isn't the same as the BOINC client. Kill the processes by PID: https://www.linux.com/tutorials/how-kill-process-command-line/ E.g. kill SIGNAL 14245 You can always try to reboot. |

Joseph Stateson Joseph Stateson  Send message Joined: 27 May 99 Posts: 309 Credit: 70,759,933 RAC: 3

|

Thanks jord! Will try that next time. Makes sense. |

Jord Jord Send message Joined: 9 Jun 99 Posts: 15184 Credit: 4,362,181 RAC: 3

|

So what did you do this time then? I thought you still had the processes running. |

Joseph Stateson Joseph Stateson  Send message Joined: 27 May 99 Posts: 309 Credit: 70,759,933 RAC: 3

|

I rebooted which clear all. However, that same gpu failed again and I cannot get rid of them. However, I do not think I need to as I excluded the gpu and it is not being assigned tasks. I also aborted the tasks it was executing as the "gpu_exclude" did not abort it and I did not want to wait for it to time out. Below is from a screen text grab 6238 boinc 30 10 36.8G 30100 10880 R 50.5 o 2h32:11 . ./. ./projects/setiathome.berkeley.edu/setiathome _x41p_ V0.58bl_ _x86 64-pc-linux-gnu cuda90 —device 5 6644 boinc 30 10 36.8G 25560 10728 R 56.0 0.1 2h08:57 . ./. . /projects/setiathome.berkeley.edu/setiathome _x41p_ V0.58bl_ _x86 64-pc-linux-gnu cuda90 —device 5 7571 boinc 30 10 36.8G 30104 10880 R 85.0 0.1 lh25:15 . ./. ./projects/setiathome.berkeley.edu/setiathome _x41p_ V0.58bl_ _x86 64-pc-linux-gnu cuda90 —device 5 8622 boinc 30 10 36.8G 25548 10724 R 105. 0.1 30:22.42 . ./. . /projects/setiathome.berkeley.edu/setiathome x41p ‘ V0.58bl x86 64-pc-linux-gnu cuda90 —device 5 I tried various kills on 6238 through 8622 but nothing happened. Is it harmless to leave these alone? I do see the %cpu changing constantly so I assume they are getting a time slice. I am going to pull the card. It is a p106-90 and is not very efficient and is probably overheating. as it is , I cannot reset that device as it has tasks associated with it jstateson@h110btc:~$ sudo nvidia-smi -i 5 -r GPU 00000000:08:00.0 is currently in use by another process. 1 device is currently being used by one or more other processes (e.g., Fabric Manager, CUDA application, graphics application such as an X server, or a monitoring application such as another instance of nvidia-smi). Please first kill all processes using this device and all compute applications running in the system. jstateson@h110btc:~$ |

rob smith  Send message Joined: 7 Mar 03 Posts: 22190 Credit: 416,307,556 RAC: 380

|

If a GPU is failing consistently then the best thing to do is physically remove it from the stack rather than trying to get software to solve a hardware problem - it can be done, but the board will still consume power, and may even affect other devices on the same bus. Bob Smith Member of Seti PIPPS (Pluto is a Planet Protest Society) Somewhere in the (un)known Universe? |

|

TBar Send message Joined: 22 May 99 Posts: 5204 Credit: 840,779,836 RAC: 2,768

|

I rebooted which clear all.....1 device is currently being used by one or more other processes (e.g., Fabric Manager, CUDA application, graphics application such as an X server, or a monitoring application such as another instance of nvidia-smi)......When I have that problem, it's caused by the Driver losing contact with the GPU. You can check it by opening NVIDIA X Server Settings and the PowerMizer Tab for that GPU. If the Current values are listed as UnKnown, then the Driver has lost communication with the GPU and there isn't any way to control the GPU. You Must Reboot to regain communications with the GPU. I've always been able to fix that problem by simply rearranging the Power connections to the GPU. If using power adapters, change adapters, or just swap the cable with another GPU. It helps if you know the GPU is working though, if it works in other configurations/machines, and always make sure the GPU is connected to just One Power supply. |

Joseph Stateson Joseph Stateson  Send message Joined: 27 May 99 Posts: 309 Credit: 70,759,933 RAC: 3

|

I thought there was some hope as there was an "R" in the stats column but if nvidia-smi says "cant find device please reboot" then not much can be done it would appear. I put another board in its place, a quality eVga 1060, and it is working fine with the same riser and cable and have not had a problem. |

©2024 University of California

SETI@home and Astropulse are funded by grants from the National Science Foundation, NASA, and donations from SETI@home volunteers. AstroPulse is funded in part by the NSF through grant AST-0307956.