Please, rise the limit of 100 GPU WU per host.

Message boards :

Number crunching :

Please, rise the limit of 100 GPU WU per host.

Message board moderation

| Author | Message |

|---|---|

juan BFP  Send message Joined: 16 Mar 07 Posts: 9786 Credit: 572,710,851 RAC: 3,799

|

Back to an old topic... With all working fine (forget creditnew) why now not rise the limit of 100 GPU WU per host to at least 100 WU per GPU? I´m almost sure that will not crash de DB because less than 10% of the users actualy have more than 1 GPU per host, and will sure make our big crunchers build a small cache to avoid them running empty for few hours each Tuesday. I belive that´s is not so much to ask for...

|

|

tbret Send message Joined: 28 May 99 Posts: 3380 Credit: 296,162,071 RAC: 40

|

You are a volunteer!! If you don't like something about SETI you can always LEAVE...you nasty person! Ask for something?!? How DARE you?!? It must be a Brazilian thing brought-on by too many Cuban Cigars and too much sunshine. ;-) BTW, how many thousands of dollars per year are you spending on electricity to crunch as mightily as you do???!??? ...you ...you ungrateful person! Back when I was your age, we used to get one, maybe two work units per week. And we were grateful. In fact, we LIKED it! (everybody calm down - just messin' with my teammate) |

juan BFP  Send message Joined: 16 Mar 07 Posts: 9786 Credit: 572,710,851 RAC: 3,799

|

|

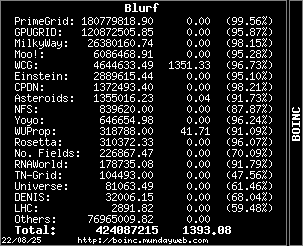

Blurf Blurf Send message Joined: 2 Sep 06 Posts: 8962 Credit: 12,678,685 RAC: 0

|

Bad link Juan   |

|

Lionel Send message Joined: 25 Mar 00 Posts: 680 Credit: 563,640,304 RAC: 597

|

Back to an old topic... Juan, I don't think that it is to much to ask either, and going towards 100 WU per GPU, in the first instance, is would seem quite a sensible approach. |

juan BFP  Send message Joined: 16 Mar 07 Posts: 9786 Credit: 572,710,851 RAC: 3,799

|

Why? I realy don´t understand, we are just having a friendly conversation... before we start the best part of the day... @Lionel . That´s exactly my ideia, ask for something that could keep our crunchers working without the posibilitity of that crash the db.

|

Wiggo Wiggo Send message Joined: 24 Jan 00 Posts: 34744 Credit: 261,360,520 RAC: 489

|

..... I wouldn't mind having that limit changed either, so long as those crappy rigs out there don't make things worse. Cheers. |

Blurf Blurf Send message Joined: 2 Sep 06 Posts: 8962 Credit: 12,678,685 RAC: 0

|

No I meant the emoticons you put up didn't come through   |

juan BFP  Send message Joined: 16 Mar 07 Posts: 9786 Credit: 572,710,851 RAC: 3,799

|

Any possibility of one who knows who is the responsable and have access to him could "politely" ask about that? I realy belive this simple change will help us a lot and bandwidht/WU splited to do that now we allready have. I´m not asking for a 10 cache days as was in the past, just 100 WU per GPU nothing more. With the new fastest cards than could easy crunch 12 WU/hr even a 100 WU per GPU cache will last for just 8 hrs... @Blurf - The emoticons apears normal on my screen with that link.

|

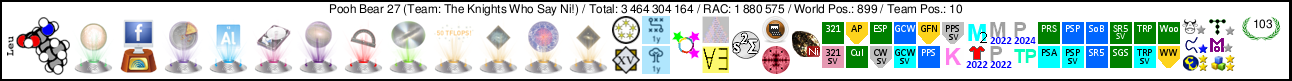

Pooh Bear 27 Pooh Bear 27 Send message Joined: 14 Jul 03 Posts: 3224 Credit: 4,603,826 RAC: 0

|

Theory I have is that the reason the are keeping it status quo is SETI is busy enough. Their databases get hit hard and are sometimes overloaded with the amount of work already being done. Adding more stress would be problematic. Plus they may not be able to keep up with creating enough work for the extra amount people could grab.  My movie https://vimeo.com/manage/videos/502242 |

juan BFP  Send message Joined: 16 Mar 07 Posts: 9786 Credit: 572,710,851 RAC: 3,799

|

Plus they may not be able to keep up with creating enough work for the extra amount people could grab. But server status page shows exactly the opositive, a lot of WU ready to be DL for crunching (314,429 by last stats just of MB, AP spliting is off). I imagine (if anyone knows please correct me if i´m wrong) probabily less than 1000 volunteers have more than 1 GPU on their hosts so that could add about 100K more WU to the db that actualy handles about 3MM of WU so it´s a very small increse of it´load. Remember i just ask for 100 WU per GPU not asking for 10 day´s of caches... and to keep the 100 WU CPU limit untouched.

|

Pooh Bear 27 Pooh Bear 27 Send message Joined: 14 Jul 03 Posts: 3224 Credit: 4,603,826 RAC: 0

|

But server status page shows exactly the opositive, a lot of WU ready to be DL for crunching (314,429 by last stats just of MB, AP spliting is off). That is just 1/10th of what is in the wild already. Over 3M being crunched currently. Also. a lot of those who have multiple GPU also crunch multiple units on each GPU, so your numbers are at the very low end. You also ignored the whole first part of my message that SETI is stressed in the amount of work it already gives. I have a hard time getting units if I do not keep a small cache of them and my backup projects start crunching. When I look at the logs at those times it is more or less telling me SETI was too busy to send me units and does a back off.  My movie https://vimeo.com/manage/videos/502242 |

Cliff Harding Cliff Harding Send message Joined: 18 Aug 99 Posts: 1432 Credit: 110,967,840 RAC: 67

|

Juan, Both of my machines have kept a cache of 10 & 10 for many years and very seldom do I run out of GPU work, unless it's an extended outage. With this setting the task manager keeps a constant total 100 tasks each for both CPU & GPU processing. The only real problem that I have is that I've almost completely run out of AP work on my i7/950 machine, which is only coming in as drips and drabs (2 CPU & 4 Open_Cl), and have had to resort to all v7 to fill in the gaps. Whenever they decide to start splitting AP again that machine will go back to crunching AP only.   I don't buy computers, I build them!! |

HAL9000 HAL9000 Send message Joined: 11 Sep 99 Posts: 6534 Credit: 196,805,888 RAC: 57

|

With the current limits we have been running between 3-4M results out in the field on most days it looks like. So I expect they chose the limits to plan on future grown until they can solve the back end issues. Where the system was meant to stop sending out work once a specific number of results were out in the field. I would guess they expected the introduction of the v7 apps to give them more time as well to work on the back end issues too. Looking at the data. The number of results is climbing to nearly the same level as before the v7 apps were introduced. If you really feel the need for your computer to have lots to do constantly. Then I would suggest you configure 1 instance of BOINC per GPU. This will give each GPU its own queue of 100 tasks. SETI@home classic workunits: 93,865 CPU time: 863,447 hours  Join the [url=http://tinyurl.com/8y46zvu]BP6/VP6 User Group[ Join the [url=http://tinyurl.com/8y46zvu]BP6/VP6 User Group[

|

juan BFP  Send message Joined: 16 Mar 07 Posts: 9786 Credit: 572,710,851 RAC: 3,799

|

@Cliff Even if you have setting 10+10 cache days that makes no diference on the cache in SETI (unless of course you have a very slow host that can´t crunch 100 WU on 20 days... not my case). Looking only the GPU work, the actual limit is 100 WU per host... that´s the point, in a 2x690 host (not one of the top cruncher) for example that crunches 8 WU each 15 minutes (not shorties of course) that 100 WU cache give only 3 hours of "safe" margin, so each time something happens like the tuesday scheduled manteinance the host empty the cache and run for hours with nothing to do. Now imagine what happen on a host who crunch 18 WU at a time even faster (12 minutes per WU at most)? In less than 2 hours all gone. That exactly the opositive on CPU task, even a fast host crunch a WU in about 2 hours, so in a top CPU cruncher (very few of them actualy running SETI) with let´s say 24 cores, a 100 CPU cache last for no less than 8 hours, but most of the fastest GPU crunchers here have 12 or less cores (just look the list of the top hosts) so a 100 WU cache holds for about a 1/2 day or more. If they realy want to slow down the DB hit´s the best think to do is change the settings of the limits to 50 WU per CPU host not limit the multiple GPU hosts to 100... As i allways say, somebody forget to balance the things on SETI, 100 WU CPU limit is very big even on multiple CPU hosts. Did you ever see a CPU based cruncher say any complais about that? The same number 100 WU per GPU hosts is to small for the fastest GPU and even smaler for the multiGPU hosts. Some balance must be achieved. And as the new generation of GPU (780+) starts to realy arrive at SETI a 100 cache will be small even for a single GPU host when the new optimized apps where avaiable to the general public. One last thing, the servers actualy have the capacity to handle the crunched WU normaly crunched daily by the big GPU crunchers, it´s just an adjust to help us to pass the down time, you could say go to a secondary project, even if boinc could handle that, the real problem, the configuration from one project to the other is diferent, so changing a big cruncher from one project to the other is not an easy task as must belive. There are a lot of secondary problems by doing that we could discuss that on another thread(off topic)

|

Cliff Harding Cliff Harding Send message Joined: 18 Aug 99 Posts: 1432 Credit: 110,967,840 RAC: 67

|

@Juan, One of the tricks that I found was to add the <report_results_immediately>1</report_results_immediately> to the cc_config.xml. This simple line keeps the queue full the greater majority of the time. If I report x number of tasks and the total number of tasks for either the CPU or GPUS falls below 100, then the queue is filled accordingly.   I don't buy computers, I build them!! |

HAL9000 HAL9000 Send message Joined: 11 Sep 99 Posts: 6534 Credit: 196,805,888 RAC: 57

|

@Cliff My 24 core CPU only box has actually run out on a Tuesday maintenance cycles a few times. It just falls over to a backup project grabs some work and then resumes SETI@home once it is able. So I don't really feel the need to complain about anything in that respect. Letting it run up to 12 AP at a time was helping, but the AP tasks have run dry again for a while. While I did like the limits they used the first time of per CPU core & GPU better. My desires have no effect on the project needs. SETI@home classic workunits: 93,865 CPU time: 863,447 hours  Join the [url=http://tinyurl.com/8y46zvu]BP6/VP6 User Group[ Join the [url=http://tinyurl.com/8y46zvu]BP6/VP6 User Group[

|

juan BFP  Send message Joined: 16 Mar 07 Posts: 9786 Credit: 572,710,851 RAC: 3,799

|

@cliff If you see in the description the use of <report_results_immediately>1</report_results_immediately> is not recomended exactly because that increases the hit on the servers. @hal9000 As i say ... very few have 24 cores ... you are one of the lucky ones. 100 CPU WU cache must last for 8 hrs at least on your host so only in very "rare" situation your host will be out of work. As you don´t do GPU work on it, you have no (or very few) inter-project configuration problems. Totaly diferent from our hosts that get dry in just 2-3 hours... and almost totaly need to change the configuration when switch to other projects. To avoid that, i simply leave the host running empty for hours... a lot of waste in computer power i agree but is better to try to deal with overheat and/or melted connectors... To try to bypass the 100 WU limit i allready split the GPUs from the 3xGPU hosts to 2xGPU´s hosts, but even then the 2xGPU´s hosts continuously rund dry when no AP work is avaiable. As i start to receive few new 770 to replace the old 580 the problem will be worst very soon. My question is... why not give us a sweeter life with few WU more, i´m not askink for 1000´s of WU just 100 per GPU not per host... i realy belive i´m not ask to much.

|

Blurf Blurf Send message Joined: 2 Sep 06 Posts: 8962 Credit: 12,678,685 RAC: 0

|

|

Donald L. Johnson Donald L. Johnson Send message Joined: 5 Aug 02 Posts: 8240 Credit: 14,654,533 RAC: 20

|

Right after the servers were moved to the CoLo facility, Matt mentioned in this message that he and Eric had throttles in place to limit bandwidth usage due to issues with, among other things, WorkUnit Storage I/O speeds. Matt noted that the WU Storage I/O ws just keeping up with demand, but they would try to improve that situation as they got time. Since the 100/100 limit for crunchers with GPUs is still in place, and having heard no more from either Matt or Eric on the subject, I must conclude that the I/O issues have not been solved, and so I would expect NO changes in WU limits. But as Blurf noted, the one to ask is Eric. Donald Infernal Optimist / Submariner, retired |

©2024 University of California

SETI@home and Astropulse are funded by grants from the National Science Foundation, NASA, and donations from SETI@home volunteers. AstroPulse is funded in part by the NSF through grant AST-0307956.