credits and workunits: a fair system needed for Boinc

Message boards :

Number crunching :

credits and workunits: a fair system needed for Boinc

Message board moderation

Previous · 1 · 2 · 3 · Next

| Author | Message |

|---|---|

Jord Jord Send message Joined: 9 Jun 99 Posts: 15184 Credit: 4,362,181 RAC: 3

|

i'm not saying its perfect to run wu's completed/hours processed, but it'd be a lot less corrupted that the system is now. What you're forgetting, and what everyone seems to forget, is that BOINC is more than just Seti@Home. The BOINC back-end version that the developers may be able to run on this project is not necessarily the version that other projects will run, ever. And as such, whatever new credit scheme is introduced and implemented in the BOINC back-end, will then first have to be used by all BOINC projects before it can be measured as a fail or success. However, there are plenty of projects where the admin thinks he knows better and where they disable the credit portion of the back-end. So then it comes down to the client, which will have to start treating same resource share projects as same RAC projects, whereas projects that overpay --and the client knows about this-- will eventually receive less and less CPU/GPU attention. Of course, then you have to convince the user to upgrade to that BOINC version. In the end it'll be projects with outdated back-end code with users running outdated BOINC versions. Do we care though? I don't. It's all your own choice what you do with BOINC. Just don't force your believes onto others. |

ML1 ML1 Send message Joined: 25 Nov 01 Posts: 20252 Credit: 7,508,002 RAC: 20

|

... The individual user can track hours per WU to see if their tweaks and hardware upgrades have the intended results. Changes in WU size and/or complexity (which will continue to happen) will just require you to "wait a bit" till the numbers settle down again. Except that the variable nature of the WU complexity means that you may never get anything settling as the WU continuously vary in 'size'. Having something whereby you can compare/calibrate against real-world performance would be good. Both for measuring how effective whatever tweaks might be, and for seeing some real science in measuring the "Boinc-supercomputer" performance. ... Whether their motive is more science or more credit is really irrelevant, as long as more science gets done. That might be the case, however it is the credits freaks that contribute most to the science! Happy fast crunchin', Martin See new freedom: Mageia Linux Take a look for yourself: Linux Format The Future is what We all make IT (GPLv3) |

Slavac Slavac Send message Joined: 27 Apr 11 Posts: 1932 Credit: 17,952,639 RAC: 0

|

|

|

-BeNt- Send message Joined: 17 Oct 99 Posts: 1234 Credit: 10,116,112 RAC: 0

|

Statistical multipliers is the answer! lol. 1 credit per work unit X the multiplier of predetermined speed your computer is, then you take the fastest machine and the wingman average out that number multiply it by 1 and that's the credits. Of course this would also require a lot better system benchmark that it accurate within limits. Every quarter the project tasks all the averages and averages those to reach a "milestone" credit average in which you can be granted no less than that per workunit. Once it has been performed once it will do nothing but climb along with the hardware getting faster. This would also account for the time it has taken, newer hardware, and how many work units you have processed. It doesn't need to be as complicated as it is, because after all this isn't a competition. Or how about simply breaking apart CPU, GPU, and AP tasks. AP task are 2 credits, CPU tasks are 1 credit, GPU tasks are .50-.75 credits and be done with it. If you feel cheated because someone with a faster machine is pushing more units out and racking up a higher RAC you should upgrade. When it comes down to it, it doesn't really matter. And with the way the new system works assigning wildly different credits for everything across the board at time it's a joke at best. Traveling through space at ~67,000mph! |

betreger betreger  Send message Joined: 29 Jun 99 Posts: 11361 Credit: 29,581,041 RAC: 66

|

I have been doing this a long time and I just do not get this credit system. 2 vlars with the same run time have been seen with a 25% difference in credit. The vlars average somewhere around 40 credits per hour and the shorties only 30. I use the same amt of electricity per hour. The work I did was the same, I feel the credit should be the same per hour. The divergent scores make no sense to me. The only value credits have are to track the health, performance of my machine and this is a very crude tool for that at best. I will crunch on even if I do not get a toaster. |

SciManStev SciManStev  Send message Joined: 20 Jun 99 Posts: 6652 Credit: 121,090,076 RAC: 0

|

I have been doing this a long time and I just do not get this credit system. 2 vlars with the same run time have been seen with a 25% difference in credit. The vlars average somewhere around 40 credits per hour and the shorties only 30. I use the same amt of electricity per hour. The work I did was the same, I feel the credit should be the same per hour. The divergent scores make no sense to me. The only thing I can say, is that we are all gauged by the same system. As long as that is true, then it's OK. Steve Warning, addicted to SETI crunching! Crunching as a member of GPU Users Group. GPUUG Website |

W-K 666  Send message Joined: 18 May 99 Posts: 19045 Credit: 40,757,560 RAC: 67

|

This is a topic that has been discussed, in public, since day one of BOINC. And without the cooperation of all the project administrators is never going to be solved. The benchmark * time system is flawed, just try running the benchmarks on the same computer under two different OS's. Linux and Windows will give very different results. As pointed out by others counting tasks has its problems as an AP tasks takes Y time longer that an MB task. So there would a least have to be a table with headings of each application used. Counting tasks also comes with problems, that we have already had, there are people who will run a script and discard all MB tasks that are not VHAR. Hours run also makes no sense, running a top end gpu for one day is probably equal to running a 233MHz Pentium MMX for a year. The Flop counting method used here previously to this present random number generator was probably the best system but has been rejected by most other projects and also the imfamous unseen non-communicative Dr.A |

Slavac Slavac Send message Joined: 27 Apr 11 Posts: 1932 Credit: 17,952,639 RAC: 0

|

I have been doing this a long time and I just do not get this credit system. 2 vlars with the same run time have been seen with a 25% difference in credit. The vlars average somewhere around 40 credits per hour and the shorties only 30. I use the same amt of electricity per hour. The work I did was the same, I feel the credit should be the same per hour. The divergent scores make no sense to me. Valid point and one that keeps me from whining too much. I would just like a stable benchmark from which credit is awarded.  Executive Director GPU Users Group Inc. - brad@gpuug.org |

|

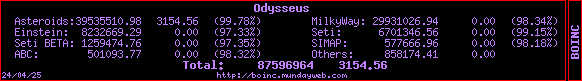

Odysseus Send message Joined: 26 Jul 99 Posts: 1808 Credit: 6,701,347 RAC: 6

|

My two cobblestones’ worth: I’d just as soon see each project count WUs or time—possibly in a number of categories, whatever makes sense for the kind of work it does—and leave it to third parties to publish cross-project stats. I think this would make the whole issue much less contentious, eliminating the constant pressure on admins to adjust their credit system where it’s perceived to be either over-generous or miserly. The various teams that run stats sites could use weighting methods or algorithms of their choosing; some do already (e.g. the BOINCstats “World Cup†rankings), or present comparisons in average performance, so I don’t see that users would lose anything if the universal credits were abandoned. Meanwhile projects would be freer to ‘reward’ their volunteers as they see fit.  |

rob smith  Send message Joined: 7 Mar 03 Posts: 22184 Credit: 416,307,556 RAC: 380

|

While the idea of counting WU completed for each project sounds good and fair it breaks down somewhat for projects that use very large WU, or have WU of highly variable size. As far as I'm aware the concept behind the credits system was to allow comparisons between projects not just within a project. To achieve this one hour of processor time has to be worth the same on all projects, without the "loyalty bonuses", or the miserly credits per hour, that come from various projects. Obviously such a system has to take note of the performance of the processor concerned, and the "efficiency" of the software. Now the processor performance is fairly easy to grasp, and so calibrate, the "efficiency" of the software depends on quite a number of things including the ration of maths to logic operations in the core algorithm, the amount of data fetch dispatch from bulk store , the use of swap/cache store and so on. We've seen this on S@H where The Lunatics and others have taken the basic algorithm supplied by the lab and "twisted" it to make far better use of the processor - increased the "efficiency" of the software on a given hardware platform. Thinking about it, each project team must have a fair idea of the balance between the various types of operation, so could simply rank the balance for each of the key operation classes e.g. FOPS, IOPS, Fetch, Logic. These figures need to normalised so they add up to 100%. They must add up to 100% because this is a description of the whole software - thus a project might have 80%FOPS, 12%IOPS, 1%Fetch and 1%Logic; while another might have 8%FOPS, 20%IOPS, 22%Fetch, 50%Logic; by looking at these figures you can see that the first project will spend most of its life sitting in the processor, while the second is for ever fetching data from bulk store. There needs to be a way of "timing" the operational classes on an "ideal" hardware platform, so you might give a Logic operation the score of 1, a Fetch score 90, an IOP score 2, and a FOP score 7. Use multiply the two figure for each class of operation, then add all the products together and you have the project weighting - a figure that is used to scale actual runtime to normalised run time, which is then used to calculate the award per WU..... Bob Smith Member of Seti PIPPS (Pluto is a Planet Protest Society) Somewhere in the (un)known Universe? |

kittyman  Send message Joined: 9 Jul 00 Posts: 51468 Credit: 1,018,363,574 RAC: 1,004

|

Ya know......... This credit debate could go on ad infinitum, and no matter what, not everybody is EVER gonna be happy with the outcome. I'm walking out the door on it. I'm gonna crunch Seti until my wheels come off, we find Mr. Green Jeans, or the project goes under. I would like to see a workable system whereby computer time and power spent on various projects could be equitably awarded token credits so that somebody's efforts for one project could be compared to another person's on a different project. It may not be possible, given the range in the nature of the mathematical/computational work required to do the science on various projects. I guess getting as close as possible is better than not trying at all, and I think DA is trying to do that. And he is gonna gore somebody's ox no matter HOW he tries to do that. The biggest flap recently was a certain project awarding 'double credit days' to make up for downtime on their project. Hell.....Seti's never done that, and they have had a few major outages. It was posted that Seti had made some exceptions and granted credit due to technical difficulties for work that had been turned in late or had validation problems. But that was just granting the standard credit rate for work that was already done. NOT granting double credits for future work to make up for lost time. Sooooo...I guess other projects can grant whatever the heck they want to. Wrong as it may be, their users aren't gonna earn their free toaster any sooner than I am gonna....LOL. Just don't come around the Seti boards crowing about the bazillion credits you earned last week somewhere else. 'Cuz that dog don't hunt 'round here, boys. "Freedom is just Chaos, with better lighting." Alan Dean Foster

|

Link Link Send message Joined: 18 Sep 03 Posts: 834 Credit: 1,807,369 RAC: 0

|

Results received: 93865 Total CPU time: 98.567 years Average CPU time per work unit: 9 hr 11 min 55.7 sec Such system works only if each WU takes (almost) exactly the same amount of time, otherwise it leads to cherry picking and does not at all tells how much one actually did for the project, or at least far less than the current cradit system. Someone could come to the same numbers with an old machine while crunching only shorties while other one, who crunches everything that comes would need a lot faster machine to get the same numbers, specially with APs between. Looking at the numbers, you could think both did the same for the project even if the first one has done not even half of what the other did. The current system would be OK so far, if it was not a random credit generator, that should be fixed of course. Should not be too difficult, for example if taking AR into account one could set boundaries where the credit is expected, that would eliminate things like 0 or even negative credits or 10000+ credits for AP WUs and so on.

|

|

alan Send message Joined: 18 Feb 00 Posts: 131 Credit: 401,606 RAC: 0

|

Number of work units crunched is the only sensible means of comparison within SETI@home. Credit based on how much processed data you returned. Separate lists for different types of unit - vlar, vhar, MB and AP, say. Across projects, use something different, and I don't care what it is; it will be largely meaningless because of the impossibility of comparing the value of the projects themselves. In any case, the relative worth of the various projects is only meaningful to the individual contributors/crunchers, and cannot and should not be assigned by "the management". Sure, those with multiple GPU's, water-cooled overclocked units and power bills the size of a major town will rack up more units. That's more data for the project, and a better chance of discovering ETI, which is what we all want, isn't it? The problem with the last couple of credit systems is that we do not get a consistent days credit for a days work. Credit per hours spent in waiting for the unit to finish varies wildly and arbitrarily, which is unfair, confusing and demotivating. Over the past month I've had a units that produced credit ratings from a low of 475 CPU-seconds per credit to a high of 179 CPU-seconds per credit. Both those were ordinary MB units, not vlars or AP. With such huge and unscientific variance in results it is difficult to escape the conclusion that credits are meaningless. To put it another way, why should my credits depend on how much work my wingman did? |

W-K 666  Send message Joined: 18 May 99 Posts: 19045 Credit: 40,757,560 RAC: 67

|

Number of work units crunched is the only sensible means of comparison within SETI@home. Credit based on how much processed data you returned. Separate lists for different types of unit - vlar, vhar, MB and AP, say. But then someone has to compare the time taken to do the different types of tasks with the default apps for all projects on the same computer. Then when he has a comparison that someone is going to get complaints because they haven't used the latest app for "these tasks".

There will always be variations especially with floating point ops because not all similar tasks take the same time. FPU's are similar to humans it is easy to multiply 2.2 * 0.5 but to do 1.414... * 1.732... takes a little longer and sqrt of 4 is going to be easier than sqrt of 5.

That's part of the anti-cheating thing, they don't want you to get the 1000 cr you claimed for a VHAR. It goes; if 3 or more tasks, discard high and low claim, grant mean of the other(s) if two tasks, discard highest claim grant the other claim I only one task, then project verifies by some other means. |

Link Link Send message Joined: 18 Sep 03 Posts: 834 Credit: 1,807,369 RAC: 0

|

Yeah, that might have worked like this with the old system, with the new one it's OK to claim over 10000 for AP or negative credits, in both cases the credit is granted if the claim comes from the stock app and not from anonymous platform.

|

HAL9000 HAL9000 Send message Joined: 11 Sep 99 Posts: 6534 Credit: 196,805,888 RAC: 57

|

Results received: 93865 Total CPU time: 98.567 years Average CPU time per work unit: 9 hr 11 min 55.7 sec That is true & why I shared my thoughts on how a weighted system might work in my first message in this thread. This second message was more just explaining how I liked to use the old numbers for stats/benchmarks.

I would not change any of the anti cherry picking checks or other things currently in place. It is just about the numbers they show us for the work we have done. I like the Application details that were added, and I think APR could be a good benchmark for system/application performance between machines. However it seems like it could still use a bit more work. Right now it just seems like an arbitrary number and doesn't mean much to me. Other then it is generally high on faster machines, sometimes. SETI@home classic workunits: 93,865 CPU time: 863,447 hours  Join the [url=http://tinyurl.com/8y46zvu]BP6/VP6 User Group[ Join the [url=http://tinyurl.com/8y46zvu]BP6/VP6 User Group[

|

rob smith  Send message Joined: 7 Mar 03 Posts: 22184 Credit: 416,307,556 RAC: 380

|

The last line of Alan's comment is quite telling - why should what you get depend on what someone else has done? I agree, variations in score just because someone else has produced their result in more, or less time than you aren't "fair". For a given WU any pair of crunchers should take the same "normalised time" within a small margin of error. Normalised time is the "real time" corrected for the performance of the processor system in use, thus 1"normal" second on a '590 gpu will be far shorter in real time than 1"normal" second on an old P4. If you think about this is "quite fair". One of the problems with normalisation is you need to calibrate the process, and that means defining a set of calibration WU, that need to be run on every cruncher with a high degree of anonymity and regularly. I'm not sure how this would sit with those who "stack the odds" by transferring data between crunchers for whatever reason. Bob Smith Member of Seti PIPPS (Pluto is a Planet Protest Society) Somewhere in the (un)known Universe? |

Link Link Send message Joined: 18 Sep 03 Posts: 834 Credit: 1,807,369 RAC: 0

|

That is true & why I shared my thoughts on how a weighted system might work in my first message in this thread. Yeah, but that's not that much different from the credit system BOINC has, only the numbers are different. It really does not matter if you get 100 credits for a 0.44 WU and 30 credits for a VHAR or you count them as 1.0 and 0.3 WU. The numbers are going to be weird anyway and will not have anything to do with the actuall amount of completed WUs as the project develops and things like increased chirp_resolution or the stuff that comes in v7 are introduced. Or have I get your thoughts wrong? Anyway, the current credit system is not bad I think, it just needs some finetuning and also some logic to recognize completely wrong credit claims.

|

betreger betreger  Send message Joined: 29 Jun 99 Posts: 11361 Credit: 29,581,041 RAC: 66

|

E@H seems to have it figured out. |

|

Iona Send message Joined: 12 Jul 07 Posts: 790 Credit: 22,438,118 RAC: 0

|

Well said, Mark. For myself, I care little or nothing for the 'credits'...they will continue to be just meaningless numbers, when there is no relation to anything much at all. I just do S@H and thats it; the place where the fairly large number of 'spare cycles' I have, is used. Merely contributing to the 'crunching' is sufficient for me.....when you have PCs such as I have available, one has very little to prove! "I am a Vulcan, I have no ego to bruise" (Captain Spock, of the USS Enterprise, to Admiral James T Kirk, in Star Trek 2). Don't take life too seriously, as you'll never come out of it alive! |

©2024 University of California

SETI@home and Astropulse are funded by grants from the National Science Foundation, NASA, and donations from SETI@home volunteers. AstroPulse is funded in part by the NSF through grant AST-0307956.