Estimates and Deadlines revisited

Message boards :

Number crunching :

Estimates and Deadlines revisited

Message board moderation

Previous · 1 · 2 · 3

| Author | Message |

|---|---|

|

Josef W. Segur Send message Joined: 30 Oct 99 Posts: 4504 Credit: 1,414,761 RAC: 0

|

... The Windows stock 5.27 build was done with GCC 3.4.2, Linux and Mac builds probably GCC 4.x.x which might account for the difference. MinGW current version has GCC 3.4.5, though there's a Technology Preview with GCC 4.2.1. I don't want to speculate about what may change in the future, getting the estimates adapted to reality now is my focus. Setting the VLAR estimate to about 9900 on your chart would give about +/- 21% accuracy, acceptable but not great. We'll see what comes out of the numbers when I get the other systems done. Joe |

|

Josef W. Segur Send message Joined: 30 Oct 99 Posts: 4504 Credit: 1,414,761 RAC: 0

|

The data I've gathered, combined with Richard's, seems enough to finish this off. Here's the list of hosts running stock applications for which I got sufficient data distribution to be useful:

___Host C Description__________________________________________________

3924828 1 Intel(R) Pentium(R) III Mobile CPU 1066MHz (Win2k)

2225734 1 Intel(R) Pentium(R) M processor 2.00GHz (WinXP)

2994846 1 Intel(R) Pentium(R) 4 CPU 2.40GHz (Linux)

1810096 1 Intel(R) Pentium(R) 4 CPU 2.80GHz (WinXP)

2037132 1 Intel(R) Pentium(R) 4 CPU 3.20GHz (WinXP)

2122553 1 Intel(R) Pentium(R) 4 CPU 3.20GHz (WinXP)

2286447 1 Intel(R) Pentium(R) 4 CPU 3.20GHz (WinXP)

2068601 1 AMD Sempron(tm) 2800+ (Win2k)

3634645 1 AMD Athlon(tm) 64 Processor 3000+ (WinXP)

3137240 1 AMD Athlon(tm) 64 Processor 3200+ (Linux)

3764381 1 AMD Athlon(tm) 64 Processor 3400+ (WinXP)

3913097 1 AMD Athlon(tm) 64 Processor 3500+ (WinXP)

3632734 1 AMD Athlon(tm) 64 Processor 3700+ (Vista)

1789875 1 AMD Athlon(tm) 64 Processor 3800+ (WinXP)

4018306 1 AMD Athlon(tm) 64 FX-55 Processor (Linux)

511476 2 Intel(R) Pentium(R) 4 CPU 2.40GHz (WinXP)

3723910 2 Intel(R) Pentium(R) 4 CPU 2.40GHz (WinXP)

3786101 2 Intel(R) Pentium(R) 4 CPU 2.60GHz (WinXP)

3899870 2 Intel(R) Pentium(R) 4 CPU 2.80GHz (Win2k3)

3951032 2 Intel(R) Pentium(R) 4 CPU 2.80GHz (Linux)

1965165 2 Intel(R) Pentium(R) D CPU 2.80GHz (WinXP)

2043294 2 Intel(R) Pentium(R) 4 CPU 3.00GHz (WinXP)

3375370 2 Intel(R) Pentium(R) 4 CPU 3.00GHz (WinXP)

3866519 2 Intel(R) Pentium(R) 4 CPU 3.06GHz (WinXP)

2869294 2 Intel(R) Pentium(R) 4 CPU 3.40GHz (WinXP)

2964014 2 Intel(R) Xeon(TM) CPU 3.40GHz (Linux)

2848363 2 Intel(R) Pentium(R) D CPU 3.40GHz (WinXP)

3848006 2 AMD Athlon(tm) 64 X2 Dual Core Processor 6000+ (WinXP)

3811020 4 AMD Athlon(tm) 64 FX-74 Processor (Linux)

3983844 4 Dual Core AMD Opteron(tm) Processor 280 (Linux)

2842066 8 AMD Opteron(tm) Processor 885 (Linux)

3842351 8 Dual-Core AMD Opteron(tm) Processor 8220 SE (Win2k3)

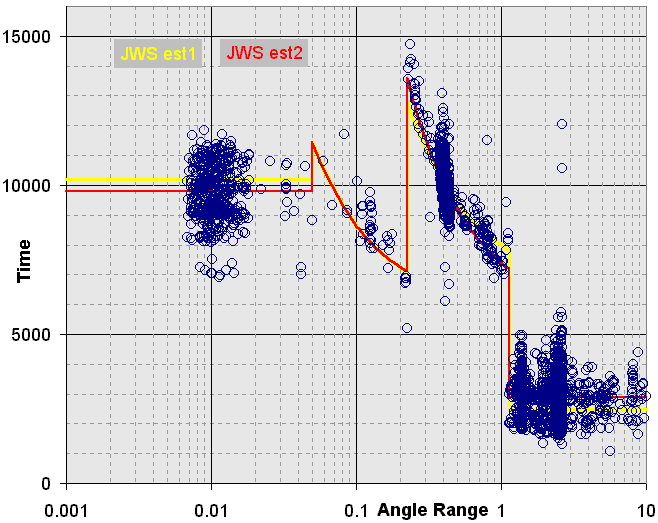

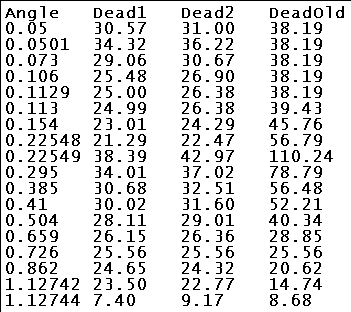

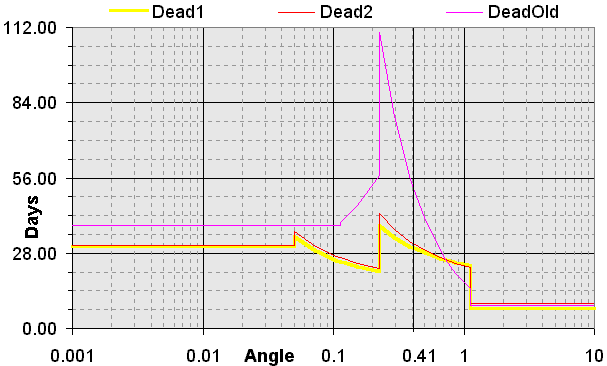

And here's a composite graph of the data points from those hosts:  There's also a larger version (1236x849, 26568 bytes). With that many hosts, I decided not to try color-coding each separately. The yellow line is the estimates from my original post, the red/orange line is as I'm revising them to fit observed data, here are the revised formulae: AR 0 through 0.05: N * 0.98 AR 0.05+ thru 0.22548: N * (0.586 + 0.028/AR) AR 0.22549 thru 1.1274: N * (0.56 + 0.18/AR) AR 1.1275 and up: N * 0.29 As before, N is the approximate time to do an angle range 0.41 WU. Scaling the formulae to give the 25.56 day deadline at angle range 0.726 validated by the P60 test gives these numbers for deadlines in days:  And here are those numbers graphed:  Dead1 is my original posting, Dead2 the revision, and DeadOld what the splitter is producing now. Joe |

Richard Haselgrove  Send message Joined: 4 Jul 99 Posts: 14650 Credit: 200,643,578 RAC: 874

|

Having said that I won't bother to post any more charts - here are some more charts! Positively the last, and mainly to show Joe's new curve, but a few more data points [edit: total now 11,127] have inevitably crept in.  (direct link)  (direct link)  (direct link) Apart from the new curve, nothing much else to say. A few more excluded wild points from Intel_Quad_Windows_XP, and the same averaged normalisation as last time. |

|

Josef W. Segur Send message Joined: 30 Oct 99 Posts: 4504 Credit: 1,414,761 RAC: 0

|

For the last 3 weeks I've been using a technique which has greatly reduced one of the ways the bad estimates have annoyed me. It's probably only applicable to others who don't run multiple projects, only connect intermittently, and don't run a huge queue, but I'll pass it on anyhow. I use an older version of BOINC, so only the "connect interval" defines queue length; it's set to 1.125 days (27 hours) for my hosts. I actually want to connect once a day, the extra is so the time of day can vary depending on what else I'm doing. Letting BOINC manage everything itself resulted in queue lengths which lasted anyplace from 14 to 52 hours. Obviously I could have doubled the setting, but I live in a rural location and don't really want to have up to 4 days of work on hand if there's a bad storm and I'm without power for several days. The technique I've been using is to adjust the <rsc_fpops_est> values after each day's work fetch. The splitter produces a value near 1.353e14 for angle range 0.41; I leave any values for angle ranges 0.3556 to 0.4571 as they are (roughly 1.1e14 to 1.6e14 estimate values), they're within 10% or so of being relatively OK. For VHAR (aka shorties) it produces 22500000000000.000000 exactly, I change those to 40000000000000.000000 exactly. For VLAR (angle range below .05) it produces 99000000000000.000000 exactly, I change those to 132300000000000.000000 exactly. Those two changes are easily done with a text editor's replace function. For the very occasional WU which falls outside those ranges I just use a calculator and my estimate formulas with N=1.353e14 after checking the WU header to see what the <true_angle_range> is. The result of that couple of minutes work once a day is a Duration Correction Factor which is very stable, and my hosts know how much work to request fairly accurately. The Scheduler is of course still seeing the unadjusted estimates; it will send somewhat more work than is asked for if some are VLAR or VHAR WUs, or slightly less if some are in the badly overestimated 0.2 to 0.35 range of angles. But so far there's never been more than 40 hours nor less than 23 hours of work, a worthwhile improvement IMO. I'm only using the technique on 2 of my 3 hosts; the P200 takes over a day for even a shorty so the queue setting is basically to keep from getting more than one WU for any request but allows fetching that one when the previous WU is in its last day. Actually I usually let it run a WU to completion before asking for another if it is going to complete while I'm awake. I have BoincLogX set to play a distinctive sound when the work is done, and letting it go idle for a few minutes represents such a small fraction of a WU that doesn't bother me. Joe |

Richard Haselgrove  Send message Joined: 4 Jul 99 Posts: 14650 Credit: 200,643,578 RAC: 874

|

Other small news: recent splitter updates include (a) more realistic deadlines, i.e. they have been reduced 25%, and .... (Matt Lebofsky, message 709438, posted 7 Feb 2008 22:58:44 UTC) I've recently been sent tasks 743711904 and 743711940. Both workunits were created (=split?) at 8 Feb 2008 11:08 UTC, some 12 hours after Matt's post. Yet they've been given time allowances of 82 days 19:50 hours - deadline 2 May 2008. These two new tasks are at AR=0.283440. By comparison, older work at AR=0.272446 was given an allowance of 87 days, and at AR=0.287628 an allowance of 81.3 days. Not much sign of a 25% reduction there, at an angle range that I would have expected to have been the target of any adjustment. So is the deadline adjustment still in testing, or has there been a finger-fumble between intention and implementation? |

|

Josef W. Segur Send message Joined: 30 Oct 99 Posts: 4504 Credit: 1,414,761 RAC: 0

|

Other small news: recent splitter updates include (a) more realistic deadlines, i.e. they have been reduced 25%, and .... I will not be surprised if there's a week or two before we see work made with the new splitter code, it includes the very critical blanking of the radar signal. I think that's going to be touchy, they have to replace the data affected by the radar with a pseudo-random sequence which matches the noise floor before and after the replaced section so the replacement doesn't cause false "signal" detections. To elaborate on what Matt said, Eric's message when he checked in the estimate changes was: Changed computation of workunit FLOP estimates to better match measured amounts. The second line is the 25% reduction. The first line is the revised estimates developed in this thread with your help and that of everyone else who commented, etc. So the deadline reduction is 25% from the deadlines I posted earlier, and ends up with this number of days: Angle Deadline 0.001 23.25 0.05 23.25 0.0501 27.16 0.073 23.00 0.106 20.17 0.154 18.22 0.22548 16.85 0.22549 32.23 0.295 27.76 0.385 24.38 0.41 23.70 0.504 21.76 0.659 19.77 0.726 19.17 0.862 18.24 1.12742 17.08 1.12744 7.00 10 7.00 The 7.00 for the very high angle ranges is actually a minimum Eric added, from the formula it would have been 6.88 days. So we have the formula value for work fetch and displayed "Time to completion", but the deadline has a little extra possibly for those who can only access some of their hosts once a week. Joe |

|

Brian Silvers Send message Joined: 11 Jun 99 Posts: 1681 Credit: 492,052 RAC: 0

|

Congratulations to you, Richard, and anyone else that contributed to this. Thanks to Eric for listening...

|

Richard Haselgrove  Send message Joined: 4 Jul 99 Posts: 14650 Credit: 200,643,578 RAC: 874

|

Will this task be one of the last of the 108 day deadlines? Due 28 May 2008. Congratulations Joe, both for the initiative in deriving and refining the new curve, and also for knowing how to get it implemented effectively with the minimum of fuss. |

|

Josef W. Segur Send message Joined: 30 Oct 99 Posts: 4504 Credit: 1,414,761 RAC: 0

|

Work I was given today has the new estimates and deadlines. Apparently the new splitter code which Matt noted had more changes than he realized. Because the estimates are mostly lower, Duration Correction Factor will increase for most hosts when they finish the first WU with the adjustments. That will make the queue look longer and inhibit work downloads temporarily. Also there will be reissues of WUs from previous splits which will have the old estimates and deadlines for awhile. Joe |

Adrian Taylor Adrian Taylor Send message Joined: 22 Apr 01 Posts: 95 Credit: 10,933,449 RAC: 0

|

wow ! thats some serious work joseph and the others here have put in over the last few months, well done ! i'd like to add my thanks and appreciation to the bottom of this thread... keep up the good work folks :-) regards adrian Work I was given today has the new estimates and deadlines. Apparently the new splitter code which Matt noted had more changes than he realized. 63. (1) (b) "music" includes sounds wholly or predominantly characterised by the emission of a succession of repetitive beats |

Fred J. Verster Fred J. Verster Send message Joined: 21 Apr 04 Posts: 3252 Credit: 31,903,643 RAC: 0

|

wow ! Thanx again, for your technical expanation ,Joe and Matt ,you're doing a terrific job, keeping us informed about a the 'technical "stuff" '. The graph's too, are clear and show's, the actual influence on true Angle Range, A.R. I, also noticed, new DEADLINES are used. Got some {216} WU's, with a deadline of 23 till 30march, some NEW one's (23feb08ab/ad) and some that are 1 year old.

|

Neil Blaikie Neil Blaikie Send message Joined: 17 May 99 Posts: 143 Credit: 6,652,341 RAC: 0

|

Excellent job to all involved. Personally I am enjoying crunching Seti Beta at the moment, got a ton of workunits all with march 13 2008 deadlines, nice to give the main seti program a break for a bit while it crunches all the beta units on high priority. Keep up the good work, makes life so much easier with many people helping the project rather than just those at Berkeley.

|

©2024 University of California

SETI@home and Astropulse are funded by grants from the National Science Foundation, NASA, and donations from SETI@home volunteers. AstroPulse is funded in part by the NSF through grant AST-0307956.