Why can't I maintain a cache of x-days worth of WUs?

Message boards :

Number crunching :

Why can't I maintain a cache of x-days worth of WUs?

Message board moderation

| Author | Message |

|---|---|

Christian Seti (user) Christian Seti (user) Send message Joined: 31 May 99 Posts: 38 Credit: 73,899,402 RAC: 62

|

There's something wrong with the way BOINC is failing to maintain a cache of workunits on my network. My setup: 250 computers running BOINC (all version 5.10.xx). Some Windows, some Macs. This alone suggests that the problem is not something specific to a particular version or particular platform. I have my General Settings in the BOINC webmin to indicate my computers are connected all the time ("connect to the Internet every 0 days" in the General prefs) and "maintain work for an additional 10 days" (the largest the system will permit). Despite this, over a time such as we have had recently where workunit production has dropped behind demand significantly for about a week, my BOINC user has shown a big dip in RAC. (as seen as) Why would this be the case when, if the settings were working properly, I should have a ten day buffer of work to tide me over until workunit production picks up? In theory, even a total workunit drought of ten days should result in no dropoff in credit so long as each computer's cache of work was full to start with. These outages are sometimes unavoidable, but I am concerned I am missing something when the provided mechanism fails to maintain enough work at my end when there isn't enough to go around. --------------------------------- Nathan Zamprogno http://baliset.blogspot.com |

|

Grant (SSSF) Send message Joined: 19 Aug 99 Posts: 13736 Credit: 208,696,464 RAC: 304

|

In theory, even a total workunit drought of ten days should result in no dropoff in credit so long as each computer's cache of work was full to start with. It won't but other people will be affected & it will take time for their results to be returned & credit granted. Also there have been changes to the credit multiplier for the new science application, combined with a change to only 2 copies of a Work Unit initially being released, combined with deadlines as long as 8 weeks. End result- your average credit is going to be up & down like a yoyo for some time to come; and your large cache will have a big effect on others RAC as well. I run a 4 day cache & have only run out of work about 3 times in the last 5 or 6 years. Grant Darwin NT |

Christian Seti (user) Christian Seti (user) Send message Joined: 31 May 99 Posts: 38 Credit: 73,899,402 RAC: 62

|

I run a 4 day cache & have only run out of work about 3 times in the last 5 or 6 years. How is this possible when outages of far longer than this have occurred over that time? I can see how the fact that workunits get processed by 2 or 3 users might hang up the allocation of credit, but it doesn't explain why, even when there is plenty of work to go around, if I look at the tasks tab of any of my clients, there are usually only a handful of workunits "in the pipe"- the one or two that are being processed and between 0-4 "extras" waiting. This is despite the fact that the computer would finish those off within only a day or two. Thus my question stands. If (a) there is plenty of work waiting to be sent (and again, I know this isn't always the case, but I confine my observation to when I know there is) and (b) I've asked my computers to maintain a buffer of ten days, why is there rarely that much work buffered at my end? --------------------------------- Nathan Zamprogno http://baliset.blogspot.com |

|

Alinator Send message Joined: 19 Apr 05 Posts: 4178 Credit: 4,647,982 RAC: 0

|

Well there are several facets to the answer to that. Historically, with the early versions of the CC the estimating algorithms were very crude, and the benchmarks regularly produced readings all over the place resulting in normally carrying far less than what you set. Later as the CC became more sophisticated, things improved, but a change was made from trying to fill the cache on an individual project basis to a host cache overall one. This could result in diverging from what you expect in some cases. Variable deadline also play a role in pinching off what you think would be appropriate as well in terms of cache load. Currently, and looking with an eye to SAH only, we have to deal with running LF and MB data at the same time. As a hedge against issues the project side estimates for MB WU runtime were increased far in excess of what the actual runtime increase is, so the the Scheduler sends a lot less right now for a given number of seconds of work than it would for LF WU's. As the CC adjusts the DCF this should improve some, but since in all likelyhood we're going to be a 'mixed bag' operation from now on (don't forget about AP in the bullpen), I'd expect the team will leave the project side estimates on the real conservative side. Alinator |

|

1mp0£173 Send message Joined: 3 Apr 99 Posts: 8423 Credit: 356,897 RAC: 0

|

Please tell us your "connect every 'x' days" setting, and your "extra days" setting. |

Christian Seti (user) Christian Seti (user) Send message Joined: 31 May 99 Posts: 38 Credit: 73,899,402 RAC: 62

|

Please tell us your "connect every 'x' days" setting, and your "extra days" setting. I did, in my original question. "Connect every '0' days" (which the explanatory text on the website suggests is 'being connected to the internet all the time', which they are, and "maintain work for an extra '10' days". To the replier "Alinator"- thanks for taking the time to reply. Despite being a sysadmin and SETI@Homer since the very week it all started, I got a bit lost in your explanation. You use the following abbreviations and I do not know what they mean: CC LF MB AP If I'm hearing you correctly, factors beyond those relating to there being plenty of workunits to send are having an effect (and I'm only doing SAH, not any other BOINC project, FWIW). OK. Would there be any benefit from increasing the "connect to the Internet" delay from 0 to 10 days? Am I correct in assuming this would allow the computers to keep a buffer of (up to) 20 days instead of 10? Or am I reading this wrong? Thanks again. --------------------------------- Nathan Zamprogno http://baliset.blogspot.com |

KWSN - MajorKong KWSN - MajorKong Send message Joined: 5 Jan 00 Posts: 2892 Credit: 1,499,890 RAC: 0

|

Please tell us your "connect every 'x' days" setting, and your "extra days" setting. CC = the BOINC client.... 'the BOINC Core Client' was an old term for it. LF = the old antenna feed at Arecibo... 'line feed'. MB = the new multibeam antenna feed at Arecibo. AP = AstroPulse. Another science app being developed by the project to re-crunch the old (and eventually new) data from the tapes using different routines and looking for a different type of signal. Its currently in beta testing over on the Beta project, and last I saw was on v4.18. ---------------- IMO, there would not be any benefit to increasing the 'connect every' interval very much. To the best of my knowledge, you are still going to be capped at 10 days. There used to be an issue that sometimes happened with the connect interval set to 0, so some people were recommending 0.01 (but I have been running with 0 for quite some time, and it never happened to me), but I do believe that it has been fixed. What happened was that the client reported the result immediately after upload, before the servers at Berkeley could catch up enough to 'know about' the upload. So, the results errored out. But, like I said, I do believe that they have fixed it. Right now, I run 0 interval, 1 day extra 'additional' on mine. I usually have 1 SETI result in process and 4 or 5 waiting on this AMD64X2. Its also running PrimeGrid on the other core (with currently 191 of the short 6 or 7 minute each TPS results waiting). You can really get by with a much smaller cache level, especially since you declare a connect interval of 0. An extra of 3 days should be more than enough. Huge caches are really counter-productive to the project, bloating the databases. It wouldn't surprise me at all (though I have no direct proof of this) to learn that the project admins had the scheduler in some sort of 'stingy' mode to help spread the workload around. You might want to try reducing the extra days figure down from 10 slowly to see if you get more by asking for less. But then, I had it on 3 days additional during and right after the outage, and only recently dropped it back down to 1 day additional. And I didn't see much of a change on how much I had cached. YMMV, VWP, SSFD. https://youtu.be/iY57ErBkFFE #Texit Don't blame me, I voted for Johnson(L) in 2016. Truth is dangerous... especially when it challenges those in power. |

|

Grant (SSSF) Send message Joined: 19 Aug 99 Posts: 13736 Credit: 208,696,464 RAC: 304

|

Would there be any benefit from increasing the "connect to the Internet" delay from 0 to 10 days? Am I correct in assuming this would allow the computers to keep a buffer of (up to) 20 days instead of 10? I would expect that to reduce the amount of work you carry even further as the Scheduler would be even more concerned about missing deadlines. Grant Darwin NT |

|

Grant (SSSF) Send message Joined: 19 Aug 99 Posts: 13736 Credit: 208,696,464 RAC: 304

|

I run a 4 day cache & have only run out of work about 3 times in the last 5 or 6 years. There have been long outages, but outages of more than 3 days are rare. And other than 2 or 3 of them, it had been possible to pick up some work sporradically during those other outages. Grant Darwin NT |

Keck_Komputers Keck_Komputers Send message Joined: 4 Jul 99 Posts: 1575 Credit: 4,152,111 RAC: 1

|

The connect every setting should not be set higher than 1.8 days, anything higher may result in less work due to short deadlines on some tasks. Allthough I have not tested it or seen a reliable report, I would expect the total of the connect and additional settings should not exceed 4 days to prevent deadline problems. In other words 4 days is about the maximum queue you can reliably achieve with any combination of settings at this project, anything higher and work fetch will periodically be suspended due to deadline pressure. When this happens the client may be in a situation where it must get all but one task finished before it can get more work. Since SETI has been using BOINC the only time my CPUs have been idle was when my internet connection was down for a week or more due to hurricane Katrina. It wouldn't have happened then except my CPDN model crashed about 4 or 5 days into the outage. Connect every 0.1 to 0.5, additional less than 1.0. Backup projects are the way to go to prevent idle CPUs. BOINC WIKI   BOINCing since 2002/12/8 |

|

n7rfa Send message Joined: 13 Apr 04 Posts: 370 Credit: 9,058,599 RAC: 0

|

Please tell us your "connect every 'x' days" setting, and your "extra days" setting. Some information that might have an impact: What are the last 4 numbers when you look at details of your system from the "My Account" -> "Computers on this account"? Do you have a "global_prefs_override.xml" file in your BOINC directory? If so, what are the contents?

|

|

Alinator Send message Joined: 19 Apr 05 Posts: 4178 Credit: 4,647,982 RAC: 0

|

To the replier "Alinator"- thanks for taking the time to reply. Despite being a sysadmin and SETI@Homer since the very week it all started, I got a bit lost in your explanation. You use the following abbreviations and I do not know what they mean: Sorry. I see Kong defined most of the acronyms except for DCF. (R)DCF = (Result) Duration Correction Factor. Displayed as the last line on your Computer Summary page, just above the Location drop down dialog box. Alinator |

|

1mp0£173 Send message Joined: 3 Apr 99 Posts: 8423 Credit: 356,897 RAC: 0

|

Please tell us your "connect every 'x' days" setting, and your "extra days" setting. In my opinion, Connect every '0' is not handled all that well. A small non-zero number is a better idea -- like maybe 0.08. Two reasons I can think of for caching problems: 1) an inaccurate duration correction factor. 2) errors, possibly caused by excessive overclocking, or a short connect interval. If #1, this will sort itself out as BOINC learns the difference between predicted time and actual time. I can't look at #2 because your computers are hidden, but if you are "throwing errors" constantly, BOINC will intentionally starve your computer. |

doublechaz doublechaz Send message Joined: 17 Nov 00 Posts: 90 Credit: 76,455,865 RAC: 735

|

I have a problem where computers that I've added to the project recently can't get any or more than one or two WUs, but computers that I have had in the project for years have a cache of 25 to 100 WUs. They all run with three projects: seti = 10000 abc = 5 spin = 5 Much of the time they are busy working on the two alternate projects, but can't get seti, and don't show the others as overworked. None of them are throwing errors. Any guesses? |

Richard Haselgrove  Send message Joined: 4 Jul 99 Posts: 14650 Credit: 200,643,578 RAC: 874

|

Two reasons I can think of for caching problems: Adding: 3) changing settings for wrong venue - changing 'default' setting when computers are on 'home', or v.v. 4) playing with local preferences in BOINC manager, and not clearing them - local preferences override webset preferences. |

|

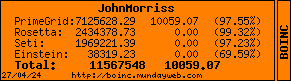

john_morriss Send message Joined: 5 Nov 99 Posts: 72 Credit: 1,969,221 RAC: 48

|

Two reasons I can think of for caching problems: Adding: 5) A low or zero value for <active_frac>, found in the <time_stats> section of client_state.xml I did this to myself by playing with the system clock while BOINC was running in the background.

|

|

John McLeod VII Send message Joined: 15 Jul 99 Posts: 24806 Credit: 790,712 RAC: 0

|

Two reasons I can think of for caching problems: Not only <active_frac> but <on_frac> and <cpu_efficiency>. Note that a project that is under time pressure will not fetch work. a host that has sufficient work, and has a task under time pressure will not get work from anywhere. With abc and spin having such low resource shares, any time that they have work on the host they will be under time pressure, and work fetch will be suspended while they get through their work. Eventually (fairly rapidly in this case) the Long Term Debt (LTD) of these two projects will drop enough that they will not be asked for work unless S@H cannot supply work (or is under time pressure and the queue is not full). Since some deadlines in S@H are as short as 4.5 days, any setting for "connect every X" that is much above 1 is going to cause time pressure when there is one of these short deadline tasks on the host. Any combination with a total of "Connect every X" aned "extra work" that sums to much more than 4 days will have the same effect. It is also possible that you have some long running tasks with deadlines a month away. These exist in S@H as well and a small number of these will fill your queue.   BOINC WIKI |

doublechaz doublechaz Send message Joined: 17 Nov 00 Posts: 90 Credit: 76,455,865 RAC: 735

|

The machines with problems are all dual 1.2 GHz. They are 100% uptime and marked as such in the venue. They just refuse to cache seti. They will cache several abc or spin units. My PII 350 always has more WUs cached (2-5) than the new dual PIII 1200, but the old PIII 1000 has a couple dozen. |

|

1mp0£173 Send message Joined: 3 Apr 99 Posts: 8423 Credit: 356,897 RAC: 0

|

The machines with problems are all dual 1.2 GHz. They are 100% uptime and marked as such in the venue. They just refuse to cache seti. They will cache several abc or spin units. My PII 350 always has more WUs cached (2-5) than the new dual PIII 1200, but the old PIII 1000 has a couple dozen. Have you looked at the long term and short term debt? If BOINC has crunched more SETI than your resource share says it should have, then it will not download more SETI until ABC and SPIN are "caught up." If you have work that is near deadlines (as JM7 mentioned) then BOINC will not add work until the deadline pressure is passed. These are both good things -- they're features, not problems. |

doublechaz doublechaz Send message Joined: 17 Nov 00 Posts: 90 Credit: 76,455,865 RAC: 735

|

I figured this out. 5.8.16 CC ignores my cache settings. 5.10.0 CC honors them. At one point 5.10.0 was available for download, but now (and when I installed the newer machines) 5.8.16 is what you get. I've copied 5.10.0 from my older machines to the newer, and now they are all working correctly. That should keep them out of weird EDF mode with overworked projects while seti can't or won't get work. |

©2024 University of California

SETI@home and Astropulse are funded by grants from the National Science Foundation, NASA, and donations from SETI@home volunteers. AstroPulse is funded in part by the NSF through grant AST-0307956.