From FX to Ryzen

Message boards :

Number crunching :

From FX to Ryzen

Message board moderation

Previous · 1 . . . 9 · 10 · 11 · 12 · 13 · 14 · Next

| Author | Message |

|---|---|

Mike Mike Send message Joined: 17 Feb 01 Posts: 34258 Credit: 79,922,639 RAC: 80

|

Keith, your times looks great. How many instances are you running on your Ryzen ? With each crime and every kindness we birth our future. |

Keith Myers Keith Myers Send message Joined: 29 Apr 01 Posts: 13164 Credit: 1,160,866,277 RAC: 1,873

|

I'm sticking to my FX methodology of only crunching CPU tasks on physical cores even though the virtual cores get access to the FPU registers on Ryzen. So I have set maximum task concurrent to 12. Eight CPU and 4 GPU tasks running on the system. Occasionally though the CPU tasks rise to 9 because a MW or Einstein task moves onto one of the GPUs displacing a SETI task so the count rises accordingly. I don't know whether that task running on a virtual core gets impacted though because it won't have run it entirety on one of the virtual cores because even the BLC VLARs take longer to run than one of the Einstein or MW tasks on a GPU. I don't think that shorties from overruns have been impacted. I just haven't had the patience to actually track an individual CPU task through to completion through the database, compare equivalent AR and see if it actually ran longer on a virtual core. I decided to take baby steps with regard this new architecture. The CPU is only running at 75% utilization. I might move to all 16 cores in use a bit later since I think the new cooling and the new graphics cards have brought the system into final configuration. The only bit of uncertainty is what the May AGESA updates will bring to the memory situation. Perfectly happy to run at 3200 for now, but it will be interesting to see whether the memory will run at factory 3600 spec come May. Seti@Home classic workunits:20,676 CPU time:74,226 hours   A proud member of the OFA (Old Farts Association) |

Keith Myers Keith Myers Send message Joined: 29 Apr 01 Posts: 13164 Credit: 1,160,866,277 RAC: 1,873

|

Just a quick note about the efficiency of the 1700X. I'm not sure why the reason but I am crunching Einstein tasks about 50-100 seconds faster on the Ryzen system. That is with a supposed handicap of running the dual 1070s in PCIe X8 mode instead of PCIe X16 mode like the other 1070s in my FX systems and also at a 600-800 Mhz slower CPU clock rate. I assume that the Ryzen system is more efficient in shoveling work to and from the GPU's compared to the FX systems. The 1070s are all the same being Founders Edition versions. Seti@Home classic workunits:20,676 CPU time:74,226 hours   A proud member of the OFA (Old Farts Association) |

Stephen "Heretic"  Send message Joined: 20 Sep 12 Posts: 5557 Credit: 192,787,363 RAC: 628

|

Just a quick note about the efficiency of the 1700X. I'm not sure why the reason but I am crunching Einstein tasks about 50-100 seconds faster on the Ryzen system. That is with a supposed handicap of running the dual 1070s in PCIe X8 mode instead of PCIe X16 mode like the other 1070s in my FX systems and also at a 600-800 Mhz slower CPU clock rate. I assume that the Ryzen system is more efficient in shoveling work to and from the GPU's compared to the FX systems. The 1070s are all the same being Founders Edition versions. . . As Wiggo likes to remind me, GPUs do better on more powerful platforms. His 1060-3GB cards are kicking my 1060-6GB cards' rear ends, because his are on an i5 and mine are on a Pentirum-D :( . . But we work with what we have. I am looking forward to wasting err... spending a whole lot more cash on a Ryzen when all the dust has settled and the final versions are on the shelves. Then the 1060-6GBs should be able to really strut their stuff, and have a whole lot of backup crunching on the CPUs. Stephen |

|

Grant (SSSF) Send message Joined: 19 Aug 99 Posts: 13736 Credit: 208,696,464 RAC: 304

|

. . As Wiggo likes to remind me, GPUs do better on more powerful platforms. His 1060-3GB cards are kicking my 1060-6GB cards' rear ends, because his are on an i5 and mine are on a Pentirum-D :( I'll see if I can find the link, but I recently came across an article looking at gaming comparing a Core 2 Quad system with current CPUs. Even the current low end Pentium G brand CPUs beat out the C2Q. And yes, the more powerful the video card, the greater the impediment to it's performance with the older CPU. Considering the base level Core 2 series CPUs left the high end PentiumDs behind, even a basic i5 or Ryzen 5 system will produce a big boost to it's crunching output. Ah, here go. Q6600 v current hardware (2017). Grant Darwin NT |

Keith Myers Keith Myers Send message Joined: 29 Apr 01 Posts: 13164 Credit: 1,160,866,277 RAC: 1,873

|

I was just commenting because based on all the prior experience with Einstein forum member comments, the apps for Einstein really respond well to higher GPU memory clocking and also higher GPU bandwidth with respect to the PCIe bus. The Ryzen system should have a deficit compared to the FX systems. Not seeing that in my case though. YMMV. Seti@Home classic workunits:20,676 CPU time:74,226 hours   A proud member of the OFA (Old Farts Association) |

Keith Myers Keith Myers Send message Joined: 29 Apr 01 Posts: 13164 Credit: 1,160,866,277 RAC: 1,873

|

I'm trying an experiment. Now that I have more physical CPU cores than I did previously on my FX platform, I am going to run 3 tasks concurrent per GPU. That is a change from running 2 tasks per card. Still allotting 1 CPU core per task since I am running the OpenCL SoG app. We shall see I guess. I still am showing only about 75-82% CPU utilization in SIV. It will be interesting to find out if my RAC per day goes up, goes down or stays the same. Anybody reading the thread want to comment on their experiences running a GTX 1070 at 2 or 3 tasks concurrent? Each card has 8GB of memory and even at my -SBS size of 2048KB, I am still keeping within the memory footprint of the cards. Too early to tell, and the mix of tasks lately has been Arecibo shorty VHAR tasks, so that is clouding the runtime question, but I don't see much of a negative impact so far. Seti@Home classic workunits:20,676 CPU time:74,226 hours   A proud member of the OFA (Old Farts Association) |

Stephen "Heretic"  Send message Joined: 20 Sep 12 Posts: 5557 Credit: 192,787,363 RAC: 628

|

I'm trying an experiment. Now that I have more physical CPU cores than I did previously on my FX platform, I am going to run 3 tasks concurrent per GPU. That is a change from running 2 tasks per card. Still allotting 1 CPU core per task since I am running the OpenCL SoG app. We shall see I guess. I still am showing only about 75-82% CPU utilization in SIV. It will be interesting to find out if my RAC per day goes up, goes down or stays the same. Anybody reading the thread want to comment on their experiences running a GTX 1070 at 2 or 3 tasks concurrent? Each card has 8GB of memory and even at my -SBS size of 2048KB, I am still keeping within the memory footprint of the cards. Too early to tell, and the mix of tasks lately has been Arecibo shorty VHAR tasks, so that is clouding the runtime question, but I don't see much of a negative impact so far. . . From my experimentation I have observed that whatever you set as the -sbs maximum (and this is only the buffer size) the task running will consume approx. 50% more ram. So with a buffer size of 1GB, 1.5 GB will be used. I would think that with an 8GB card running three tasks your buffer should be at -sbs 1024 or -sbs 1536. But see how you go :) Stephen ?? |

|

Grant (SSSF) Send message Joined: 19 Aug 99 Posts: 13736 Credit: 208,696,464 RAC: 304

|

It will be interesting to find out if my RAC per day goes up, goes down or stays the same. My i7 has 2*GTX 1070s and I run with rather aggressive settings and I've found running 1 WU at a time best. Running 2 produces about the same amount of work per hour, 3 reduces it significantly. However the system itself is RAM limited- only 4GB so there are always lots of hard paging faults- so the system is hitting the swap file a lot (being on an SSD the performance penalty isn't nearly as severe as it would be for a HDD). With more RAM, those faults would drop to pretty much nothing, and multiple WUs on the GPU may give better results. Generally running WUs of the same type isn't an issue (ref above), how ever running a Arecibo WU on the same card as a GBT WU resulted in the Arecibo WU taking 3 times longer than usual to crunch. -tt 1500 -hp -period_iterations_num 1 -high_perf -high_prec_timer -sbs 2048 -spike_fft_thresh 4096 -tune 1 64 1 4 -oclfft_tune_gr 256 -oclfft_tune_lr 16 -oclfft_tune_wg 256 -oclfft_tune_ls 512 -oclfft_tune_bn 64 -oclfft_tune_cw 64 -cpu_lock Reducing the SBS, period iterations & tune values may result in better multi WU performance, particularly when running 1 Arecibo & 1 GBT WU on the same card. Grant Darwin NT |

Keith Myers Keith Myers Send message Joined: 29 Apr 01 Posts: 13164 Credit: 1,160,866,277 RAC: 1,873

|

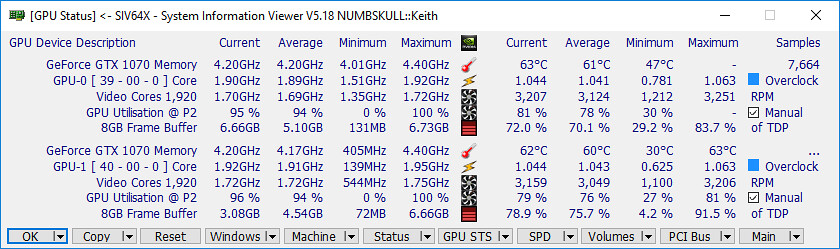

Not seeing that effect here. Only about a 500KB increase over 3 X 2048 = 6144GB usage. Here is the SIV GPU Status window.  SIV GPU status SIV GPU statusMaximum graphics card usage is 6.73GB. Well within the 8GB memory capacity of each 1070. Seti@Home classic workunits:20,676 CPU time:74,226 hours   A proud member of the OFA (Old Farts Association) |

Keith Myers Keith Myers Send message Joined: 29 Apr 01 Posts: 13164 Credit: 1,160,866,277 RAC: 1,873

|

It will be interesting to find out if my RAC per day goes up, goes down or stays the same. Thanks for the feedback. I have 16GB system RAM and only am using about 2.28GB of it currently. My tuning is equally aggressive. <cmdline>-sbs 2048 -period_iterations_num 1 -tt 1500 -high_perf -spike_fft_thresh 4096 -tune 1 64 1 4 -oclfft_tune_gr 256 -oclfft_tune_lr 16 -oclfft_tune_wg 256 -oclfft_tune_ls 512 -oclfft_tune_bn 64 -oclfft_tune_cw 64 -high_prec_timer</cmdline> It's going to take some mindful monitoring of the completion times to see any effect I guess. I already have had mixed types process on the same card. Also I have Einstein and MilkyWay tasks move in and out of a card while there was a SETI task running also. I DIDN'T increase the GPU count for those projects though, they are still at 0.5 tasks. I know I could get away with the 0.33 tasks for MW but no way for Einstein. Einstein OpenCL tasks work the GPU card the hardest. So when either of those projects run on a card, that drops the SETI usage down to 0.5 too. I've not seen any performance impact so far on my FX systems when running two up on a card and running Arecibo and BLC tasks at the same time. But I have NOT ever tested at 1 task per card so I don't know if I ever had the same performance impairment as you observe. As I stated, it's an experiment. If I see a significant increase in crunching times for all tasks and my RAC drops, I will for sure roll back to 2 tasks per card concurrent. Seti@Home classic workunits:20,676 CPU time:74,226 hours   A proud member of the OFA (Old Farts Association) |

|

Grant (SSSF) Send message Joined: 19 Aug 99 Posts: 13736 Credit: 208,696,464 RAC: 304

|

Thanks for the feedback. I have 16GB system RAM and only am using about 2.28GB of it currently. Good to know. My In use is usually around 2GB, the rest for caching etc. The hard faults generally occur when I start running more than the usual BOINC, IE, mail etc. So it looks like even 8GB would be plenty for my general usage needs. I've been looking at increasing the RAM, but reluctant to spend money on a system that is getting on and has a few issues. Saving for a whole new system. Grant Darwin NT |

Zalster Zalster Send message Joined: 27 May 99 Posts: 5517 Credit: 528,817,460 RAC: 242

|

For seti 2 is the best for the 1070, at einstein it is 3

|

Keith Myers Keith Myers Send message Joined: 29 Apr 01 Posts: 13164 Credit: 1,160,866,277 RAC: 1,873

|

Grant, on your 1070's at 1 task per card ... what is your GPU utilization percentage? Is one task per card enough to keep it fully busy? Seti@Home classic workunits:20,676 CPU time:74,226 hours   A proud member of the OFA (Old Farts Association) |

Keith Myers Keith Myers Send message Joined: 29 Apr 01 Posts: 13164 Credit: 1,160,866,277 RAC: 1,873

|

For seti 2 is the best for the 1070, at einstein it is 3 Zalster, is that exclusively running only Einstein tasks on the card. I find the systems sluggish when there is a SETI and Einstein both running on the same card. Seti@Home classic workunits:20,676 CPU time:74,226 hours   A proud member of the OFA (Old Farts Association) |

Keith Myers Keith Myers Send message Joined: 29 Apr 01 Posts: 13164 Credit: 1,160,866,277 RAC: 1,873

|

OK, I have moved back to 0.5 tasks per card. I know realistically my experiment didn't run long enough, but it looks like my RAC trajectory had plateaued out. I also found that over time that I ended up only at two SETI tasks per card anyway because of Einstein and MW tasks moving in and out. My concurrent tasks setting in app_config just added more CPU tasks to balance the setting and overrode my desire to only run CPU tasks on a physical core and not on a HT core. It would have worked fine if I was only doing SETI or changed both Einstein and MW to 0.33 task per card also. Seti@Home classic workunits:20,676 CPU time:74,226 hours   A proud member of the OFA (Old Farts Association) |

|

Grant (SSSF) Send message Joined: 19 Aug 99 Posts: 13736 Credit: 208,696,464 RAC: 304

|

Grant, on your 1070's at 1 task per card ... what is your GPU utilization percentage? Is one task per card enough to keep it fully busy? Generally high 80s to mid 90s, depending on the WU. Memory controller load is usually low to high 80s, power load around 60% Running more than 1 WU bumps up the GPU load, and slightly bumps up the power load, but didn't result in any more WUs per hour being done, and since it does result in much longer crunch times for Arecibo WUs if crunched on the same card as a GBT I just didn't see any benefit to running more than 1WU. Earlier versions of SoG gave more WUs per hour with more than 1 WU at a time, but the later releases, while not in the same league as Petri's LINUX Cuda application, made better use of the hardware so I just went back to 1 WU at a time & reserved a CPU core for each GPU WU EDIT- these days I have to admit I don't pay that much attention to the GPU load (and likewise the memory controller load), i'm more concerned with how many WUs per hour it can churn out. If 50% GPU load gives more WUs per hour than 75% or even 99%, then 50% is what i'll go with. There are times where the GPU load is as high as 96%, but the actual power load drops. The biggest complication, particularly with my GTX 750Tis, is what gives great throughput for one type of WU, might really bog down on another- and that's just Arecibo shorties v normal range WUs v the longer running normal range WU. Add in GBT, and it's an even bigger compromise. Grant Darwin NT |

Keith Myers Keith Myers Send message Joined: 29 Apr 01 Posts: 13164 Credit: 1,160,866,277 RAC: 1,873

|

OK, I'm intrigued. I've never run 1 task per card before. I just carried over my settings and assumptions from when I ran the CUDA apps. With them you had the SETI Performance app which actually could profile just which task configuration was most efficient. That doesn't work with the OpenCL SoG app. I guess I need to run a single task experiment to see how the SoG app behaves myself. Seti@Home classic workunits:20,676 CPU time:74,226 hours   A proud member of the OFA (Old Farts Association) |

Brent Norman Brent Norman  Send message Joined: 1 Dec 99 Posts: 2786 Credit: 685,657,289 RAC: 835

|

I would say 2 tasks would be best for you since you are not using the threads anyways (from what I understand). At the very least it will fill in the time between loading tasks, and probably 5-10% more load. EDIT: If you have unused threads, try -hp and see if it kicks up a notch. |

Keith Myers Keith Myers Send message Joined: 29 Apr 01 Posts: 13164 Credit: 1,160,866,277 RAC: 1,873

|

That's an idea too. I saw too much system lag when I attempted -hp in the past. I have now reduced all projects to a single gpu task per card for the experiment. My observation is that the tasks are taking about half the time to finish which is what I expected or maybe 5% faster?? Of course the fact that I am crunching through tasks faster means I will run out even earlier come the weekly outage. I guess that is normal for people that are running Petri's app too. Seti@Home classic workunits:20,676 CPU time:74,226 hours   A proud member of the OFA (Old Farts Association) |

©2024 University of California

SETI@home and Astropulse are funded by grants from the National Science Foundation, NASA, and donations from SETI@home volunteers. AstroPulse is funded in part by the NSF through grant AST-0307956.