GPU Lockups

Message boards :

Number crunching :

GPU Lockups

Message board moderation

| Author | Message |

|---|---|

Shaggie76 Shaggie76 Send message Joined: 9 Oct 09 Posts: 282 Credit: 271,858,118 RAC: 196

|

In the last month or so I've seen an uptick in the number of system lockups -- I'll find my cruncher on a black screen and unresponsive (capslock doesn't toggle the light, machine doesn't respond to ping and I've needed a hard-reset to recover). This has happened at least 4 times on my dual 980 Ti and 3 times on my triple 1070. My other triple and dual 1070's haven't seem to have gotten hit yet. I updated my drivers yesterday on the 980 Ti machine but I found it locked up again this morning. All my machines used the same command-lines and only the dual 980 Ti machine does CPU-work; only recently have I been dialing down tt in my 980 Ti command-line but it hasn't helped. -sbs 1024 -high_perf -instances_per_device 2 -tt 100 -period_iterations_num 10 -spike_fft_thresh 4096 -tune 1 64 1 4 -oclfft_tune_gr 256 -oclfft_tune_lr 16 -oclfft_tune_wg 256 -oclfft_tune_ls 512 -oclfft_tune_bn 64 -oclfft_tune_cw 64 My cluster is a mix of Windows 10 and Windows 7 -- currently one of each is locking up and one of each is fine. I also want to believe that this problem is relatively new -- I don't have solid evidence but seems coincident with v8.22 because I hadn't updated my video drivers since last year and I don't recall having problems in December). Event Viewer has generally been unhelpful but I noticed checked today and saw: \Device\Video11 Graphics Exception: ESR 0x52de48=0x5 0x52de50=0x0 0x52de44=0x1918 0x52de4c=0x3e So something isn't happy. Since it's hitting multiple machines that are in a well-cooled environment I'm skeptical that it's a heat problem. About the only thing I can think of that might be unusual is I've turned of TDR checks because dedicated crunching with aggressive command-lines was tripping it before; maybe that would save it from lockup but I don't know if it's actually the real problem. I'm loathe to stop running 2 tasks per GPU because in my tests this is easily gets 20% more work done but at this point I'm not sure what else to try. |

BetelgeuseFive  Send message Joined: 6 Jul 99 Posts: 158 Credit: 17,117,787 RAC: 19

|

I had a similar problem a while ago on my previous system. After buying a new graphics card the system would freeze randomly. Tried different drivers, but the problem remained. It turned out to be the motherboard: it was relatively old and didn't handle the new generation of graphics card very well. After upgrading the BIOS and changing some BIOS settings (something with PCI-lane assignments, I don't remember exactly) the problems were gone ... May be worth checking if there are BIOS updates available for your boards. |

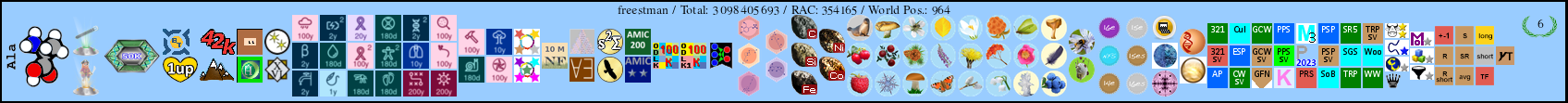

freestman freestman Send message Joined: 14 Oct 01 Posts: 21 Credit: 19,736,133 RAC: 177

|

Try driver ver.372.90

|

rob smith  Send message Joined: 7 Mar 03 Posts: 22189 Credit: 416,307,556 RAC: 380

|

A few things to look at before trying the drop to one-per or updating the driver. Seating of the cards PSU connections PSU voltage stability (not so easy to check) Dust bunnies everywhere Bob Smith Member of Seti PIPPS (Pluto is a Planet Protest Society) Somewhere in the (un)known Universe? |

|

Greg Tippitt Send message Joined: 19 Apr 04 Posts: 24 Credit: 20,455,837 RAC: 39

|

I've worked on computer hardware since the TRS80 days and ran SETI@Home since the original pre-BOINC version. The component that I've most often found to cause such otherwise unexplained random lockups and crashes is the power supply. I'm fairly good at diagnosing hardware and software problems, but for some reason my brain too often thinks of power supplies in terms of all or nothing. If it powers on and starts running, the power supply is fine. Wrong. Please excuse me if I go into too much detail in my explanation below. I'm not good at being brief, and I never know the expertise of someone I'm chatting with in these forums. Especially when using GPUs that pull lots of power (more power than the rest of a system combined in many cases) we often run these power supplies at near their max watts, which can dramatically shorten the lifespan of some components within the PSUs. If you are running a PSU within 10% of its max when your system is 100% busy, you can often get temporary dips in voltage, EMI, etc. As power supplies age, I've found that they are much less reliable when running at near their max output rating. For example I've got 1 power supply that is over 10 years old that used to output 1000 watts fine, but now it doesn't work well for more than 500 watts. With an 800 watt load, it will only run for a day or so before the system will crash. Dirty power can cause problems to be a nightmare to locate. It may run fine for hours, but something as simple as the room's temp going up by a few degrees can cause an "iffy" component within a PSU to cause problems leading to less than acceptable power being output. The easiest way I've normally found to isolate a PSU problem that's random is to lower it's load by half to see if the crashes persist or go away. The easiest way to do this is to use entries to your cc_config.xml file to lower the number of CPU cores being used on the machine (" <ncpus>#</ncpus> ") and to have BOINC ignore one or more of your GPUs ("<ignore_nvidia_dev>#</ignore_nvidia_dev>"). I don't have the link handy, but you can search for BOINC client cc_config.xml and find the page easily for a full explanation of these commands. This is much faster, cheaper, and easier than first swapping the PSU when drivers or other software are the problem. Using the cc_config.xml to control the number of cores used is discouraged by some of the BOINC documentation, but I find it really helpful when testing things. First it will override settings elsewhere that tell BOINC to use more cores than you specify in the cc_config.xml. When you finish testing, you can simply delete these line so that all of your settings elsewhere take over as they were before. This makes it the easiest place to change things and put them back as before. The ignore CPU# lines are super helpful with testing graphics cards with dual GPUs. Using this you can have BOINC use one of the GPUs on a card and ignore the other, which lowers the power being used by a card in half, while you test its GPUs one at a time. One you change a setting in the cc_config.xml, you don't have to reboot or restart BOINC. You simply select "Options" then "Read Config File" in the BOINC manager. Even best of all, this works the same using Windows or Linux. If cutting the number of GPU and CPU cores in half stops the system from crashing, then the cause of your problem is likely power and/or heat. Besides the excellent suggestions of the others and my flaky PSU suggestions, I found one other circumstance that causes such symptoms as yours. Are you perhaps using those PCi slot extenders that have a USB3 cable to connect the graphics cards that are not directly plugged into the motherboard? Bitcoin miners were the first time heard of these being used, and they've generally worked well for me with BOINC systems until recently. They are really handy when I'm testing a setup before putting it all together in a case. If you are not using these PCI extenders, then ignore the rest of this message: I was testing a setup with the motherboard running naked before I put everything into a case. I had 2 Nvidia dual GPU graphics cards attached using PCI slot extenders and USB3 cables to connect the cards remotely to the motherboard. These same extenders have worked fine with single GPU graphics card, but with the dual GPU cards, the system would run for an hour or 2 and then crash. When I ignored 1 GPU on each card, the machine crunched fine for 2 days without a crash, but with all GPUs running, it would crash. I ruled out the PSUs by having a 5 PSUs, where one was dedicated to each of the power connector on each card. I don't know why, but when I plugged the cards directly into the motherboard, they've worked fine now with both GPUs being used. Even though they ran fine with only 1 GPU on each card being used, as soon as I used both of the GPUs on either card, the machine would only run for 1 or 2 hours using the PCI extenders. The crazy thing that had me pulling out my hair was that they would start up fine and run for at least an hour, but after I walked away they'd crash. Only once the machine ran for just over 3 hours before crashing. I'm using these same extenders now with much faster single GPU cards, and they are working fine. ??? I never figured out why these extenders work fine for me with single GPU cards and not dual GPU cards using both GPUs. It might be something like others mentioned about PCI slot timing. Graphics cards with dual GPUs, both running full out processing, may not tolerate some PCI bus conditions that work fine with single GPU. That's my best guess. Good Luck Greg |

Shaggie76 Shaggie76 Send message Joined: 9 Oct 09 Posts: 282 Credit: 271,858,118 RAC: 196

|

For posterity: Good news: Updated drivers solved the Triple-1070 cruncher's problems and it hasn't locked up in months. Bad news: during the dearth of GBP data the high-number of quick Arecibo tasks seemed to increase the frequency lockups on the Dual-980 Ti rig; I went back to one task per GPU and I haven't had a lockup since (about two weeks steady now). -g |

Zalster Zalster Send message Joined: 27 May 99 Posts: 5517 Credit: 528,817,460 RAC: 242

|

what brand of 980Tis are they? edit.. -sbs 1024 -high_perf -instances_per_device 2 -tt 100 -period_iterations_num 10 -spike_fft_thresh 4096 -tune 1 64 1 4 -oclfft_tune_gr 256 -oclfft_tune_lr 16 -oclfft_tune_wg 256 -oclfft_tune_ls 512 -oclfft_tune_bn 64 -oclfft_tune_cw 64 you still use this commandline? -instances_per_device 2isn't required unless you are using cpu_lock I believe

|

Shaggie76 Shaggie76 Send message Joined: 9 Oct 09 Posts: 282 Credit: 271,858,118 RAC: 196

|

I figured 2 weeks was a safe bet; I was wrong. It locked up at 3AM last night. -sbs 1024 -high_perf -tt 100 -period_iterations_num 10 -spike_fft_thresh 4096 -tune 1 64 1 4 -oclfft_tune_gr 256 -oclfft_tune_lr 16 -oclfft_tune_wg 256 -oclfft_tune_ls 512 -oclfft_tune_bn 64 -oclfft_tune_cw 64 They're MSI GeForce GTX 980 Ti Gaming 6G's; I'd swap in some 1070's but it was a nightmare. I'm reluctant to believe that it's the PSU given they run so far from the red-line (the PSU and UPS are rated for 850W and the readout on the UPS rarely puts me as high as 600W). Since this is my main workstation I'm pretty unhappy with the instability. I'm tempted to downgrade to a pair RX480's as an experiment -- if they're stable I can cram up to 4 in this rig given the PSU and motherboard. The other thing I'm starting to wonder about is if this board is just bad somehow -- the other option is to build a new workstation and put this pig out to pasture for CPU-only crunching. It's a 5960x so it's not bad for CPU work. |

Zalster Zalster Send message Joined: 27 May 99 Posts: 5517 Credit: 528,817,460 RAC: 242

|

I remember having a problem with a computer that would randomly shut down. There is a memory dump log somewhere that we managed to export and then had to get a program to decrypt it. We finally figured out it was a bad stick of RAM. I ended up having to removed 2 at a time until we figured out which set it was. I even did a stress test with only 2 sticks but they all passed the test. Finally I just keep the 2 sticks out and it never locked up or crashed again. Some would want to know which of the 2 it was, but did make much sense to find out as I did order a new set of RAM to replace them and those were 2 new sticks. Something to think about.

|

Keith Myers Keith Myers Send message Joined: 29 Apr 01 Posts: 13164 Credit: 1,160,866,277 RAC: 1,873

|

I am just wondering. I haven't been following all of the thread but I wonder about the tuning parameters. Shouldn't you be able to be more aggressive with 980Ti's? I know it sounds counter intuitive since you are dealing with instability. I would think that dropping the -period_iterations down to at least 2 and increasing the -TT to 1500 would have the cards do much less context switching of work in an out of the CPU and bus. Maybe they are not getting enough time to just crunch. Seti@Home classic workunits:20,676 CPU time:74,226 hours   A proud member of the OFA (Old Farts Association) |

Keith Myers Keith Myers Send message Joined: 29 Apr 01 Posts: 13164 Credit: 1,160,866,277 RAC: 1,873

|

If he has mini-dumps set up, his computer should produce debug output files for examination. You can at least look in Event Viewer for a simple explanation of what happened. I like the utility Bluescreenview-x64 for examining the mini-dump. It has much better and more descriptive debug output decoding of the mini-dumps. For example it will most assuredly point at a memory problem as the cause of the crash. You can then run any of the mem test utilities to determine which stick is bad. Seti@Home classic workunits:20,676 CPU time:74,226 hours   A proud member of the OFA (Old Farts Association) |

Zalster Zalster Send message Joined: 27 May 99 Posts: 5517 Credit: 528,817,460 RAC: 242

|

I am just wondering. I haven't been following all of the thread but I wonder about the tuning parameters. Shouldn't you be able to be more aggressive with 980Ti's? I know it sounds counter intuitive since you are dealing with instability. I would think that dropping the -period_iterations down to at least 2 and increasing the -TT to 1500 would have the cards do much less context switching of work in an out of the CPU and bus. Maybe they are not getting enough time to just crunch. He can be more aggressive with his tuning but I don't think it's related to the crashes. That is probably a subject for once he gets the system stable. I still think it's a memory issue but he needs to find that memory dump file and examine it.

|

Shaggie76 Shaggie76 Send message Joined: 9 Oct 09 Posts: 282 Credit: 271,858,118 RAC: 196

|

I started with more aggressive and backed a few things off in the hopes of stability. Event Viewer is usually silent on the subject but the few times I've seen it catch anything it was like in the original post "\Device\Video11 Graphics Exception: ESR 0x52de48=0x5 0x52de50=0x0 0x52de44=0x1918 0x52de4c=0x3e" I could re-enable TDR checking I guess -- might be less-bad than full lockups but I recall it causing more harm than good before when it would bite on some work-units. I suspect that won't tell me much more than "graphics driver died in some opaque way." |

petri33 petri33 Send message Joined: 6 Jun 02 Posts: 1668 Credit: 623,086,772 RAC: 156

|

Hi, I remember having system lockups and after some inspecting I found out that I had set PCIE GEN3 in bios. The GPUs were GEN3, but the CPU did only support GEN2. Setting PCIE GEN2 in bios solved the problem. Petri To overcome Heisenbergs: "You can't always get what you want / but if you try sometimes you just might find / you get what you need." -- Rolling Stones |

Shaggie76 Shaggie76 Send message Joined: 9 Oct 09 Posts: 282 Credit: 271,858,118 RAC: 196

|

Interesting, but the i7-5960x should do 3.0. Thanks for the idea, though. |

Cruncher-American  Send message Joined: 25 Mar 02 Posts: 1513 Credit: 370,893,186 RAC: 340

|

I have a system I have been playing around with and am testing some parameters with. It was getting the old STOP 0X116 VIDEO_TDR_ERROR fairly often, like 2 or 3 times a day...but you know what? The system crashed, produced a crash dump (which I could look at with Who Crashed?) and then rebooted. Rather than go crazy trying to figure out why it crashed, it is easier (since it restarts itself) to just ignore the problem (for now, anyway). It is off line for only about 5 minutes so why bother? I could even live with that in production, if I had to. |

Keith Myers Keith Myers Send message Joined: 29 Apr 01 Posts: 13164 Credit: 1,160,866,277 RAC: 1,873

|

I see that the 980Ti system you are having issues with is on Windows 7. I think that somewhere along the way with all the updates and maybe even in video driver updates, that the default settings for TDR were changed. Is that system still on the old "bad" settings for TDR management? I would have to research the setting again but I seem to remember that the setting was relaxed to 8 or something. I still get the driver timeout dropout on my Windows 7 computers if I have a Einstein task currently running in the mix along with the SETI tasks and I use the rescheduler. Doesn't happen if I have just SETI or a mix of MilkyWay and SETI tasks on the system. Almost guaranteed if there is an Einstein task. Interesting that I have not seen one TDR driver timeout dropout on the Windows 10 system. Seti@Home classic workunits:20,676 CPU time:74,226 hours   A proud member of the OFA (Old Farts Association) |

Shaggie76 Shaggie76 Send message Joined: 9 Oct 09 Posts: 282 Credit: 271,858,118 RAC: 196

|

I'm pretty sure I had TDRs disabled correctly -- I've re-enabled and will let it go for a while and report back. I'm really torn - this system is good for work but bad for SETI; the lockups mean my bots go down and my builds aren't done in the mornings which is really frustrating. It just seems such a waste of a quad-SLI-capable board to not run multiple GPUs for SETI; maybe the answer is a single 1080 Ti and give up the dream. |

Ninos Y Ninos Y Send message Joined: 26 Aug 99 Posts: 15 Credit: 55,831,116 RAC: 0

|

Hi Shaggie, This maybe a shot in the dark, but try turning off hyper-threading and dedicate 1cpu/gpu task if you haven't done so already. I've also experimented with 2cpu/task with my workstations that have high core counts. Good luck! |

Shaggie76 Shaggie76 Send message Joined: 9 Oct 09 Posts: 282 Credit: 271,858,118 RAC: 196

|

I've replaced the pair of 980Ti's with a single 1080Ti and single-tasked it's doing about 1900 CPH with the same command-line below. It's only been cooking for a few days now so I'll have to give it time to see if it stays stable. |

©2024 University of California

SETI@home and Astropulse are funded by grants from the National Science Foundation, NASA, and donations from SETI@home volunteers. AstroPulse is funded in part by the NSF through grant AST-0307956.