Trying To Increase The Clock Speed Of An Nvidia 750 ti ??

Message boards :

Number crunching :

Trying To Increase The Clock Speed Of An Nvidia 750 ti ??

Message board moderation

| Author | Message |

|---|---|

|

OTS Send message Joined: 6 Jan 08 Posts: 369 Credit: 20,533,537 RAC: 0

|

I am trying to increase the clock speed of my MSI 750 ti video card. First I set the GPU to persistence mode. # nvidia-smi -pm 1 Enabled persistence mode for GPU 0000:01:00.0. All done. Then I determined the default settings with the card idling.

GPU 0000:01:00.0

Clocks

Graphics : 135 MHz

SM : 135 MHz

Memory : 405 MHz

Video : 405 MHz

Applications Clocks

Graphics : 1058 MHz

Memory : 2700 MHz

Default Applications Clocks

Graphics : 1058 MHz

Memory : 2700 MHz

Max Clocks

Graphics : 1333 MHz

SM : 1333 MHz

Memory : 2700 MHz

Video : 1199 MHz

SM Clock Samples

Duration : 13.69 sec

Number of Samples : 5

Max : 1058 MHz

Min : 135 MHz

Avg : 207 MHz

Memory Clock Samples

Duration : 13.69 sec

Number of Samples : 5

Max : 2700 MHz

Min : 405 MHz

Avg : 405 MHz

Clock Policy

Auto Boost : N/A

Auto Boost Default : N/A

Based on that output, I am assume the application clock speed is 1058 MHz., the actual clock speed is currently 135 MHz., and the maximum the card can be set to is 1333 MHz. I also confirmed the maximum speed of 1333 MHz. using nvidia-smi -q -d SUPPORTED_CLOCKS. Next I started boinc which assigned two MB V8 tasks to the GPU and then looked at the clock figures again as shown below

nvidia-smi -q -d CLOCK

==============NVSMI LOG==============

Timestamp : Tue May 10 12:49:30 2016

Driver Version : 361.42

Attached GPUs : 1

GPU 0000:01:00.0

Clocks

Graphics : 1202 MHz

SM : 1202 MHz

Memory : 2700 MHz

Video : 1081 MHz

Applications Clocks

Graphics : 1058 MHz

Memory : 2700 MHz

Default Applications Clocks

Graphics : 1058 MHz

Memory : 2700 MHz

Max Clocks

Graphics : 1333 MHz

SM : 1333 MHz

Memory : 2700 MHz

Video : 1199 MHz

SM Clock Samples

Duration : 673.16 sec

Number of Samples : 8

Max : 1202 MHz

Min : 135 MHz

Avg : 1040 MHz

Memory Clock Samples

Duration : 673.16 sec

Number of Samples : 8

Max : 2700 MHz

Min : 405 MHz

Avg : 2653 MHz

Clock Policy

Auto Boost : N/A

Auto Boost Default : N/A

It appears the application clock speed and maximum clock speed have not changed while the actual clock speed is now 1202 MHz. I then killed boinc and set the clock speed to the maximum 1333 MHz. # nvidia-smi -ac 2700,1333 Applications clocks set to "(MEM 2700, SM 1333)" for GPU 0000:01:00.0 All done. # nvidia-smi -r -i 0000:01:00.0 GPU 0000:01:00.0 was successfully reset. All done. I next obtained the following without boinc running. # nvidia-smi -q -d CLOCK

==============NVSMI LOG==============

Timestamp : Tue May 10 13:04:42 2016

Driver Version : 361.42

Attached GPUs : 1

GPU 0000:01:00.0

Clocks

Graphics : 135 MHz

SM : 135 MHz

Memory : 405 MHz

Video : 405 MHz

Applications Clocks

Graphics : 1333 MHz

Memory : 2700 MHz

Default Applications Clocks

Graphics : 1058 MHz

Memory : 2700 MHz

Max Clocks

Graphics : 1333 MHz

SM : 1333 MHz

Memory : 2700 MHz

Video : 1199 MHz

SM Clock Samples

Duration : 386.23 sec

Number of Samples : 13

Max : 1202 MHz

Min : 135 MHz

Avg : 1088 MHz

Memory Clock Samples

Duration : 386.23 sec

Number of Samples : 13

Max : 2700 MHz

Min : 405 MHz

Avg : 2533 MHz

Clock Policy

Auto Boost : N/A

Auto Boost Default : N/A

It appears changing the application clock speed to 1333 MHz was successful. I then started boinc and let things stabilize with the following results.

nvidia-smi -q -d CLOCK

==============NVSMI LOG==============

Timestamp : Tue May 10 13:11:46 2016

Driver Version : 361.42

Attached GPUs : 1

GPU 0000:01:00.0

Clocks

Graphics : 1202 MHz

SM : 1202 MHz

Memory : 2700 MHz

Video : 1081 MHz

Applications Clocks

Graphics : 1333 MHz

Memory : 2700 MHz

Default Applications Clocks

Graphics : 1058 MHz

Memory : 2700 MHz

Max Clocks

Graphics : 1333 MHz

SM : 1333 MHz

Memory : 2700 MHz

Video : 1199 MHz

SM Clock Samples

Duration : 726.55 sec

Number of Samples : 14

Max : 1202 MHz

Min : 135 MHz

Avg : 1141 MHz

Memory Clock Samples

Duration : 726.55 sec

Number of Samples : 14

Max : 2700 MHz

Min : 405 MHz

Avg : 2611 MHz

Clock Policy

Auto Boost : N/A

Auto Boost Default : N/A

The application clock speed is still reported as 1333 MHz but the actual clock speed processing two WUs remained at 1202 MHz. I then looked at the power, temperature, and performance using nvidia –smi

# nvidia-smi -q -d TEMPERATURE

==============NVSMI LOG==============

Timestamp : Tue May 10 13:20:44 2016

Driver Version : 361.42

Attached GPUs : 1

GPU 0000:01:00.0

Temperature

GPU Current Temp : 49 C

GPU Shutdown Temp : 101 C

GPU Slowdown Temp : 96 C

# nvidia-smi -q -d POWER

==============NVSMI LOG==============

Timestamp : Tue May 10 13:21:27 2016

Driver Version : 361.42

Attached GPUs : 1

GPU 0000:01:00.0

Power Readings

Power Management : Supported

Power Draw : 27.59 W

Power Limit : 38.50 W

Default Power Limit : 38.50 W

Enforced Power Limit : 38.50 W

Min Power Limit : 30.00 W

Max Power Limit : 38.50 W

Power Samples

Duration : 10.13 sec

Number of Samples : 119

Max : 32.99 W

Min : 19.46 W

Avg : 29.90 W

GPU 0000:01:00.0

Temperature

GPU Current Temp : 48 C

# nvidia-smi -q -d PERFORMANCE

==============NVSMI LOG==============

Timestamp : Tue May 10 13:22:03 2016

Driver Version : 361.42

Attached GPUs : 1

GPU 0000:01:00.0

Performance State : P0

Clocks Throttle Reasons

Idle : Not Active

Applications Clocks Setting : Not Active

SW Power Cap : Not Active

HW Slowdown : Active

Sync Boost : Not Active

Unknown : Not Active

GPU Shutdown Temp : 101 C

GPU Slowdown Temp : 96 C

The temperature and power readings are similar to ones I obtained (but not shown here) processing two WUs prior to the trying to increase the clock speed which seems to confirm the clock speed (1202 MHz.) is the same before and after. In looking at the PERFORMANCE output I see “HW Slowdown : Active†but that is the same regardless of the clock setting or whether it is processing WUs or not. It ALWAYS reports HW Slowdown : Active†, i.e. even at a reported clock speed of 135 MHz. Now that I have probably bored you to tears with TMI (too much information), here are the questions. 1. Does the term “HW Slowdown : Active†mean that it is currently throttling the GPU or does it only mean that it is actively looking for conditions that would require a slowdown? 2. Why does the card not run at 1333 HMz? It is been set to run at 1333 MHz., the temperatures are way below the reported 96C slowdown temperature and there is at least another 5 watts of head room before it reaches the max of 38.5. |

Al  Send message Joined: 3 Apr 99 Posts: 1682 Credit: 477,343,364 RAC: 482

|

Sorry that I have no idea as to your answer, but just wanted to say I appreciated your question. Excellent detail!

|

jason_gee jason_gee Send message Joined: 24 Nov 06 Posts: 7489 Credit: 91,093,184 RAC: 0

|

HW Slowdown: Active, implies the following HW Slowdown HW Slowdown (reducing the core clocks by a factor of 2 or more) is engaged. Given your Max Power Limit shown says 38.5W, and you read 32.99W, If power scaled linearly with clock frequency 1333 at 111% of 1200W, Power would supposedly shave in at 36.6W. However, I strongly suspect all other things being equal. the power would increase supralinearly with clock, the higher frequency may require a small core voltage bump (managed internally, ramping the power more). Not sure whether the fan or other component power draws are included in the shown figures, which would also ramp up, but looks as though the reading may be just the core, so they probably keep a safety margin in the boost logic. [Edit:] the memory related failures you're getting there appear to also indicate insufficient voltage for the speeds. Various safeties appear to be kicking in attempting to salvage the situation, but erroring within Cuda runtime and driver calls. "Living by the wisdom of computer science doesn't sound so bad after all. And unlike most advice, it's backed up by proofs." -- Algorithms to live by: The computer science of human decisions. |

|

OTS Send message Joined: 6 Jan 08 Posts: 369 Credit: 20,533,537 RAC: 0

|

HW Slowdown: Active, implies the following The fan reading seems to be frozen at 32% so if it is a factor, it is a constant. I did try using "nvidia-smi -i 0 -pl 50" but that results in the message "not a valid power limit which should be between 30.00 W and 38.50 W". I guess that means that if power limits are throttling the clock frequency, I am pretty much dead in the water. Too bad since the temperatures at 33W is so low and from what I have read the 750 ti can easily handle a few more watts. As far as the two "Invalids" and the string of "Error while computing" results that I think you are referencing, they may not be related to the clock frequency lowering the voltage. They all occurred when I was experimenting with other things such as running three WUs at a time and playing with MPS which admittedly is a little out of my league. The bottom line is that I was not experimenting with clock frequency when those errors occurred. In addition nothing I did or tried to do later in the day changed my clock frequency. If I only loaded the driver it ran at 1202 MHz and after everything I tried with "nvidia-smi", it still ran at 1202 MHz when processing WUs. I have run at 1202 MHz for days and I have never seen any errors or invalids that I can remember until I started experimenting and using newer driver versions. The jury is probably still out on some pending WUs that I did while experimenting with the clock frequency but if there are errors I would also consider the fact that I terminated boinc many times ungracefully with the "kill" command in addition to using a variety of different driver versions looking for ones that would recognize all the nvidia-smi commands. I am sure some WUs were worked on by several different driver versions before completion. |

|

spitfire_mk_2 Send message Joined: 14 Apr 00 Posts: 563 Credit: 27,306,885 RAC: 0

|

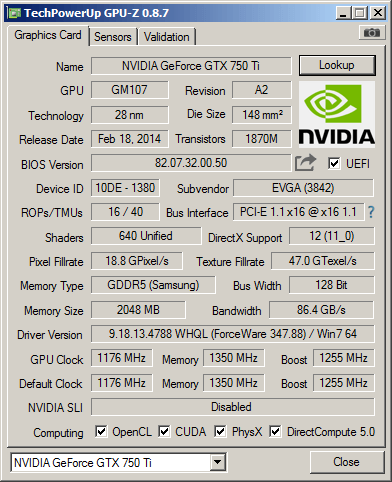

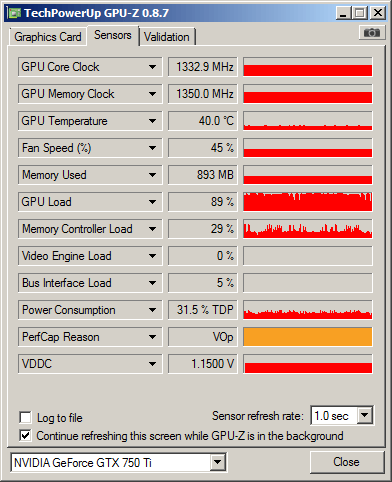

I think 1333 MHz is the boosted speed. I have GTX 750ti, the GPU-z sensor says GPU Clock 1176 MHz in the card information tab, the sensor for GPU Core Clock says 1332.9 MHz. This while crunching two gpu wu so the card is fully loaded. Also. Judging by the command line stuff, you are on Linux? Might be spotty nVidia support/driver thing.

|

|

fractal Send message Joined: 5 Mar 16 Posts: 5 Credit: 1,000,547 RAC: 0 |

I did try using "nvidia-smi -i 0 -pl 50" but that results in the message "not a valid power limit which should be between 30.00 W and 38.50 W". I guess that means that if power limits are throttling the clock frequency, I am pretty much dead in the water. Too bad since the temperatures at 33W is so low and from what I have read the 750 ti can easily handle a few more watts. I too have read that the 750ti can easily go faster if you can give it more power. Most 750ti cards are held back by the 75 watt limit of the PCIe bus. There are 750ti cards with a PCIe power plug that you can push harder. But, I too have had little luck getting a standard, no additional power plug 750ti to go any faster. They appear power limited rather than clock limited. I am not sure I would buy an overclockable 750ti with a power plug considering the other options at a similar price point that also require a PCIe power plug. It is a nice card but it does have its limitations. |

|

OTS Send message Joined: 6 Jan 08 Posts: 369 Credit: 20,533,537 RAC: 0

|

I think 1333 MHz is the boosted speed. I would be curious to know what your GPU and ambient temperature is to see how the delta temperature compares with mine running two WUs. |

|

OTS Send message Joined: 6 Jan 08 Posts: 369 Credit: 20,533,537 RAC: 0

|

I did try using "nvidia-smi -i 0 -pl 50" but that results in the message "not a valid power limit which should be between 30.00 W and 38.50 W". I guess that means that if power limits are throttling the clock frequency, I am pretty much dead in the water. Too bad since the temperatures at 33W is so low and from what I have read the 750 ti can easily handle a few more watts. Nvidia's specs say 60W (http://www.geforce.com/hardware/desktop-gpus/geforce-gtx-750-ti/specifications) but I would happy just to able to push it to 50 watts. At 30 to 35 watts and 50C it is just loafing. It seems the maximum of 38.5 watts is way too much of a safety factor. |

jason_gee jason_gee Send message Joined: 24 Nov 06 Posts: 7489 Credit: 91,093,184 RAC: 0

|

Yeah, tough to determine whether you're pushing some fundamental limit of the GPU, or other problems are creeping in (or likely a combination). Some cracks are definitely appearing on Linux that most people would have associated with Windows in the past. These relate to boincapi (built in every application) and how it treats threads. I'm unclear why Linux is suddenly moving to modern threading models, but can guess they needed to amp up scalability for GPU virtualisation/unified-memory-models. Actually one thing stands out in recent Linux kernel changes, that Block-Layer devices (especially SSDs) needed to be multithreaded to cope with the NVMe bandwidths & latencies coming about. All that means is that in terms of boinc enabled applications, on recent Linux Kernels you can expect all the weirdness and need for workarounds/fixes that I've been arguing with Boinc Developers since about 2007 (because Windows was ahead in this). i.e. Must treat threads as first class citizens, rather than exterminate them, because there are now layers. [Edit:] if you come across unexpected time exceeded or finished file present too long aborts, you may find setting the no_priority_change cc_config.xml option might help. Won't help potential GPU or driver issues of course, but at least should reduce the Boinc client going berserk when something it set as low priority doesn't get a chance to exit/cleanup. "Living by the wisdom of computer science doesn't sound so bad after all. And unlike most advice, it's backed up by proofs." -- Algorithms to live by: The computer science of human decisions. |

|

OTS Send message Joined: 6 Jan 08 Posts: 369 Credit: 20,533,537 RAC: 0

|

Yeah, tough to determine whether you're pushing some fundamental limit of the GPU, or other problems are creeping in (or likely a combination). I will keep you last comment in mind if I see that. Thanks. |

HAL9000 HAL9000 Send message Joined: 11 Sep 99 Posts: 6534 Credit: 196,805,888 RAC: 57

|

I tried several of the same commands on my 750ti. Mine was already running 1345.5MHz when running SETI@home, but I figured I would see if I could push it any further using the nvidia-smi commands. C:\Program Files\NVIDIA Corporation\NVSMI>nvidia-smi -pm 1 Setting persistence mode is not supported for GPU 0000:08:00.0 on this platform. Treating as warning and moving on. All done. Looks like Windows doesn't get that function. C:\Program Files\NVIDIA Corporation\NVSMI>nvidia-smi -q -d CLOCK

==============NVSMI LOG==============

Timestamp : Wed May 11 11:50:38 2016

Driver Version : 364.51

Attached GPUs : 1

GPU 0000:08:00.0

Clocks

Graphics : 135 MHz

SM : 135 MHz

Memory : 405 MHz

Video : 405 MHz

Applications Clocks

Graphics : 1189 MHz

Memory : 2700 MHz

Default Applications Clocks

Graphics : 1189 MHz

Memory : 2700 MHz

Max Clocks

Graphics : 1463 MHz

SM : 1463 MHz

Memory : 2700 MHz

Video : 1317 MHz

SM Clock Samples

Duration : 109.42 sec

Number of Samples : 13

Max : 1189 MHz

Min : 135 MHz

Avg : 1027 MHz

Memory Clock Samples

Duration : 109.42 sec

Number of Samples : 13

Max : 2700 MHz

Min : 405 MHz

Avg : 2296 MHz

Clock Policy

Auto Boost : N/A

Auto Boost Default : N/ASo it says 1463 MHz. Let's go for it! C:\Program Files\NVIDIA Corporation\NVSMI>nvidia-smi -ac 2700,1333 Applications clocks set to "(MEM 2700, SM 1333)" for GPU 0000:08:00.0 All done. C:\Program Files\NVIDIA Corporation\NVSMI>nvidia-smi -ac 2700,1345 Applications clocks set to "(MEM 2700, SM 1345)" for GPU 0000:08:00.0 All done. C:\Program Files\NVIDIA Corporation\NVSMI>nvidia-smi -ac 2700,1346 Applications clocks set to "(MEM 2700, SM 1346)" for GPU 0000:08:00.0 All done. C:\Program Files\NVIDIA Corporation\NVSMI>nvidia-smi -ac 2700,1347 Applications clocks set to "(MEM 2700, SM 1347)" for GPU 0000:08:00.0 All done. C:\Program Files\NVIDIA Corporation\NVSMI>nvidia-smi -ac 2700,1348 Specified clock combination "(MEM 2700, SM 1348)" is not supported for GPU 0000:08:00.0. Run 'nvidia-smi -q -d SUPPORTED _CLOCKS' to see list of supported clock combinations Treating as warning and moving on. All done. Looks like the max I am allowed to set is 1347 MHz. All values over that gave me the same warning message. The command nvidia-smi -q -d SUPPORTED_CLOCKS only displayed memory clock values. Starting with 2700 and descending. After setting nvidia-smi -ac 2700,1347 C:\Program Files\NVIDIA Corporation\NVSMI>nvidia-smi -q -d CLOCK

==============NVSMI LOG==============

Timestamp : Wed May 11 11:58:57 2016

Driver Version : 364.51

Attached GPUs : 1

GPU 0000:08:00.0

Clocks

Graphics : 135 MHz

SM : 135 MHz

Memory : 405 MHz

Video : 405 MHz

Applications Clocks

Graphics : 1346 MHz

Memory : 2700 MHz

Default Applications Clocks

Graphics : 1189 MHz

Memory : 2700 MHz

Max Clocks

Graphics : 1463 MHz

SM : 1463 MHz

Memory : 2700 MHz

Video : 1317 MHz

SM Clock Samples

Duration : 589.67 sec

Number of Samples : 26

Max : 1346 MHz

Min : 135 MHz

Avg : 522 MHz

Memory Clock Samples

Duration : 589.67 sec

Number of Samples : 26

Max : 2700 MHz

Min : 405 MHz

Avg : 1257 MHz

Clock Policy

Auto Boost : N/A

Auto Boost Default : N/A

C:\Program Files\NVIDIA Corporation\NVSMI>nvidia-smi -q -d POWER

==============NVSMI LOG==============

Timestamp : Wed May 11 11:58:24 2016

Driver Version : 364.51

Attached GPUs : 1

GPU 0000:08:00.0

Power Readings

Power Management : Supported

Power Draw : 1.00 W

Power Limit : 52.00 W

Default Power Limit : 52.00 W

Enforced Power Limit : 52.00 W

Min Power Limit : 30.00 W

Max Power Limit : 52.00 W

Power Samples

Duration : 54.60 sec

Number of Samples : 119

Max : 3.38 W

Min : 0.77 W

Avg : 0.97 WI was unable to set the power limit beyond 52w & received a similar message as you did for the command. With a task running. I was just running 1 as I have run down the queue on the system. I'm planning to try the 750 in my HTPC to see how it interacts with my TV. C:\Program Files\NVIDIA Corporation\NVSMI>nvidia-smi -q -d POWER

==============NVSMI LOG==============

Timestamp : Wed May 11 12:09:03 2016

Driver Version : 364.51

Attached GPUs : 1

GPU 0000:08:00.0

Power Readings

Power Management : Supported

Power Draw : 19.78 W

Power Limit : 52.00 W

Default Power Limit : 52.00 W

Enforced Power Limit : 52.00 W

Min Power Limit : 30.00 W

Max Power Limit : 52.00 W

Power Samples

Duration : 10.13 sec

Number of Samples : 119

Max : 24.46 W

Min : 18.46 W

Avg : 20.33 W

C:\Program Files\NVIDIA Corporation\NVSMI>nvidia-smi -q -d PERFORMANCE

==============NVSMI LOG==============

Timestamp : Wed May 11 12:09:44 2016

Driver Version : 364.51

Attached GPUs : 1

GPU 0000:08:00.0

Performance State : P0

Clocks Throttle Reasons

Idle : Not Active

Applications Clocks Setting : Active

SW Power Cap : Not Active

HW Slowdown : Not Active

Sync Boost : Not Active

Unknown : Not ActiveDespite setting nvidia-smi -ac 2700,1347 I was still seeing 1345.5 MHz in GPUz. GPUz also indicates that my GPU performance is being limited by voltage. Which it was doing before I had used any nvidia-smi commands.  SETI@home classic workunits: 93,865 CPU time: 863,447 hours  Join the [url=http://tinyurl.com/8y46zvu]BP6/VP6 User Group[ Join the [url=http://tinyurl.com/8y46zvu]BP6/VP6 User Group[

|

|

OTS Send message Joined: 6 Jan 08 Posts: 369 Credit: 20,533,537 RAC: 0

|

I tried several of the same commands on my 750ti. Mine was already running 1345.5MHz when running SETI@home, but I figured I would see if I could push it any further using the nvidia-smi commands. Interesting. Just for grins I might have tried 1463 MHz. first rather than work my way up. When I ran "nvidia-smi -q -d SUPPORTED_CLOCKS" it gave a short list of discrete values. Perhaps you cannot set it to any of the in between values not listed. It would only be an academic exercise though if you are limited by low voltage. I wonder why your max. power is 52 W while mine is 38.5 W. I guess it must be a hard limit the card manufacturer puts in their firmware and the cure is the same as for the under voltage condition - flashing a modded BIOS. After my recent MB fiasco, I do not think I am going there :). |

petri33 petri33 Send message Joined: 6 Jun 02 Posts: 1668 Credit: 623,086,772 RAC: 156

|

I have not yet tried this. http://www.phoronix.com/scan.php?page=news_item&px=MTg0MDI Tell us if it works. EDIT: just did. do something like this: root@Linux1:~/sah_v7_opt/Xbranch/client# nvidia-settings -a "[GPU:0]/GPUOverVoltageOffset=16000" Attribute 'GPUOverVoltageOffset' (Linux1:0[gpu:0]) assigned value 16000. root@Linux1:~/sah_v7_opt/Xbranch/client# nvidia-settings -a "[GPU:1]/GPUOverVoltageOffset=16000" Attribute 'GPUOverVoltageOffset' (Linux1:0[gpu:1]) assigned value 16000. root@Linux1:~/sah_v7_opt/Xbranch/client# nvidia-settings -a "[GPU:2]/GPUOverVoltageOffset=16000" Attribute 'GPUOverVoltageOffset' (Linux1:0[gpu:2]) assigned value 16000. root@Linux1:~/sah_v7_opt/Xbranch/client# nvidia-settings -a "[GPU:3]/GPUOverVoltageOffset=16000" Attribute 'GPUOverVoltageOffset' (Linux1:0[gpu:3]) assigned value 16000. root@Linux1:~/sah_v7_opt/Xbranch/client# EDIT2: After setting higher voltages the cards raised their clocks automagically upwards. I'm expecting some errors, but this is definitely something I have to try. :) EDIT3: nvidia-smi reports a couple of more Watts consumed by GPU's. To overcome Heisenbergs: "You can't always get what you want / but if you try sometimes you just might find / you get what you need." -- Rolling Stones |

jason_gee jason_gee Send message Joined: 24 Nov 06 Posts: 7489 Credit: 91,093,184 RAC: 0

|

... EDIT2: Hmmm, will have to see what (if any) of the control features my 680 on Ubuntu will allow (once updated a few things). It always did go slightly better with a voltage bump, and likewise boost higher once it had it. "Living by the wisdom of computer science doesn't sound so bad after all. And unlike most advice, it's backed up by proofs." -- Algorithms to live by: The computer science of human decisions. |

HAL9000 HAL9000 Send message Joined: 11 Sep 99 Posts: 6534 Credit: 196,805,888 RAC: 57

|

I tried several of the same commands on my 750ti. Mine was already running 1345.5MHz when running SETI@home, but I figured I would see if I could push it any further using the nvidia-smi commands. Looks like I was misinterpreting the values being displayed. I saw Memory & 2700 and figured it was a list of memory clock speeds. Attached GPUs : 1

GPU 0000:08:00.0

Supported Clocks

Memory : 2700 MHz

Graphics : 1463 MHz

Graphics : 1450 MHz

Graphics : 1437 MHz

Graphics : 1424 MHz

Graphics : 1411 MHz

Graphics : 1398 MHz

Graphics : 1385 MHz

Graphics : 1372 MHz

Graphics : 1359 MHz

Graphics : 1346 MHz

Graphics : 1333 MHz[pre]

I'm surprised that 1345 & 1347 MHz were accepted previously. Perhaps +/- 1MHz is allowed?

After setting setting nvidia-smi -ac 2700,1463 my GPU still only runs at 1345.5 MHz, but the reason displayed changes from [b]Applications Clocks Setting[/b] to [b]Unknown[/b].

[pre]C:\Program Files\NVIDIA Corporation\NVSMI>nvidia-smi -q -d PERFORMANCE

==============NVSMI LOG==============

Timestamp : Wed May 11 15:46:07 2016

Driver Version : 364.51

Attached GPUs : 1

GPU 0000:08:00.0

Performance State : P0

Clocks Throttle Reasons

Idle : Not Active

Applications Clocks Setting : Not Active

SW Power Cap : Not Active

HW Slowdown : Not Active

Sync Boost : Not Active

Unknown : ActiveI imagine if I used a tool like Nvidia Inspector I could overclock the card further, but I don't really feel the need to. The fact it runs at 1345MHz instead of specified 1189 MHz or 1268 MHz already seems like a win to me. SETI@home classic workunits: 93,865 CPU time: 863,447 hours  Join the [url=http://tinyurl.com/8y46zvu]BP6/VP6 User Group[ Join the [url=http://tinyurl.com/8y46zvu]BP6/VP6 User Group[

|

|

spitfire_mk_2 Send message Joined: 14 Apr 00 Posts: 563 Credit: 27,306,885 RAC: 0

|

I think 1333 MHz is the boosted speed. I am not sure how useful it would be, I got cheap mid tower case: - GTX 750ti has fan - cpu fan - I think psu might have a fan on the inside of the case - one case fan in the back Plus I run fan control utilities, for gpu I got MSI Afterburner and I made it to maintain 40-42°C for the card. Also. I think I owe an apology. I keep forgetting that my card is not just Ti, it is Ti SC, superclocked. It is slightly faster, only one fan and no external power, no 6 pin socket, all power comes from pci-e slot: http://www.techpowerup.com/gpudb/b2784/evga-gtx-750-ti-superclocked Some screen shots.

|

|

OTS Send message Joined: 6 Jan 08 Posts: 369 Credit: 20,533,537 RAC: 0

|

I think 1333 MHz is the boosted speed. It looks like there are enough variables to make it comparing apples to oranges. Thanks for replying. |

petri33 petri33 Send message Joined: 6 Jun 02 Posts: 1668 Credit: 623,086,772 RAC: 156

|

... EDIT2: And, .. please do not forget about to google "NVIDIA coolbits" EDIT: And I know You knew about this. To overcome Heisenbergs: "You can't always get what you want / but if you try sometimes you just might find / you get what you need." -- Rolling Stones |

Brent Norman Brent Norman  Send message Joined: 1 Dec 99 Posts: 2786 Credit: 685,657,289 RAC: 835

|

Some things I notice compared to my ASUS 750Ti OC I show bus Interface: PCI-E 2.0x16@x16 2.0 ... yours shows v1.1 Your clock speeds are higher than mine 1072 1350 1150 versus 1175 1350 1255 But SETI always reports me running at 1228 |

ace_quaker ace_quaker Send message Joined: 12 Oct 01 Posts: 17 Credit: 33,678,474 RAC: 1

|

Make sure any requested GPU clock offsets are set in 7 Mhz increments, as far as I know this is a architectural limitation. (memory clock offsets are unaffected by this). EVGA PrecisionX on Windows does this automatically, but if you want to sit in your command line bunker be sure to take this into account. |

©2024 University of California

SETI@home and Astropulse are funded by grants from the National Science Foundation, NASA, and donations from SETI@home volunteers. AstroPulse is funded in part by the NSF through grant AST-0307956.