Identical Processors, Different Speeds...What The Hey?

Questions and Answers :

Macintosh :

Identical Processors, Different Speeds...What The Hey?

Message board moderation

| Author | Message |

|---|---|

|

Jimmy Gondek Send message Joined: 1 Oct 06 Posts: 20 Credit: 715,874 RAC: 0 |

Hi All, After a several year hiatus, I've returned to BOINC crunching and, well, the world has clearly changed from how it was back in my single processor days! Case in point, I've been reviewing some of my results and have been noticing some amazingly disparate crunching times on some of the WUs. Now I've been getting-up to-speed on the new GPU-crunching abilities that some folks are getting to enjoy but I've been wondering is that all there is to this phenomenon. To use one of my WUs as an example...fellow cruncher BWX and I are both using the same CPU, Intel(R) Core(TM) i7-2600 CPU @ 3.40GHz [x86 Family 6 Model 42 Stepping 7] and we're both using a 64-bit OS, OSX 10.6.8 (Darwin 10.8.0) vs. Microsoft Windows 7 Professional x64 Edition, Service Pack 1, (06.01.7601.00). For GPUs, BWX is using a NVIDIA GeForce GTX 560 (993MB) driver: 28026, which I'm guessing they were able to use to help them along, vs my unused (and unrecognized) AMD Radeon HD 6970M (2GB). Workunit 831482235: http://setiathome.berkeley.edu/workunit.php?wuid=831482235 Besides the disparate CPU times, their 104.49 seconds vs. my 15,861.08 seconds, what I noticed was that while there were similarities in BWX's Measured floating point speed of 3304.93 million ops/sec vs. my 3768.57 million ops/sec, there was nearly a 2x difference in his Measured integer speed of their 12329.97 million ops/sec vs. my 6538.69 million ops/sec. So, here are my questions...how much of the difference between our results is accounted for by the GPU usage? How much of the difference is accounted for by the OS? And, how much of the difference is due to the BOINC and/or SETI software versions? Any insight here will be greatly appreciated! Best to all, Jimmy G |

arkayn arkayn Send message Joined: 14 May 99 Posts: 4438 Credit: 55,006,323 RAC: 0

|

Hi All, The difference is all in the GPU as the GTX560 did all of the computing on the particular work unit. He is also using optimized applications that help speed up the work being done. They are still working on ATI detection in the Mac client, CUDA has already been finished and it working with a couple of other projects. For a slight boost, you can install the Optimized apps for Mac. You would have to run down your work as I have not updated it too the 6.05 app version since I am waiting for v7 to be released.

|

|

Jimmy Gondek Send message Joined: 1 Oct 06 Posts: 20 Credit: 715,874 RAC: 0 |

Hi arkayn, Thanks for the quick reply and the insights here...it is appreciated! I had been suspecting that the GPU usage and custom WUs for those cards were the main culprits here, but I figured that there must have been some other contributory factors for this processing speed jump. So now my confusion still remains... Since we're basically talking about different types of processors doing, well, uh, math...how is it that the NVIDIA GeForce GTX 560 with only 1GB VRAM running at only 1002MHz can outperform the i7-2600 CPU @ 3.40GHz with either 8 or 16GB of DDR3 RAM? To put my question about CPU vs. GPU in more specific terms... We've all been led to believe over the years (as consumers) that we "need" the latest CPUs running at faster speeds with plenty of RAM to eke the best performance out of our computers. But, based on what you're telling me here, and these amazing results disparities, it seems to me that all this >CPU + >RAM = >performance, er, advice is just a bunch of bunk. So, 1GHz GPU + 1GB VRAM > 3.4GHz CPU + 16GB RAM? If so, the next time I upgrade machines I'll be sure to buy the middle model and invest in a killer graphics card, instead! Again, any insights here would be appreciated! :) Thanks, again, JG |

skildude skildude Send message Joined: 4 Oct 00 Posts: 9541 Credit: 50,759,529 RAC: 60

|

Parallel processing units or Cuda Cores. the work is broke down and split for use on the GPU which can process the smaller chunks much faster than a CPU. Some nVIdia GPU's like the geforce 590 have 1024 parallel processing units which make for very fast processing. Of note, the nVidia GPU's do very well on the short and average workunits but have trouble with VLAR WU's. The OpenCL lunatics app for newer nVidia cards is supposed to be a cure for that problem.  In a rich man's house there is no place to spit but his face. Diogenes Of Sinope |

|

Jimmy Gondek Send message Joined: 1 Oct 06 Posts: 20 Credit: 715,874 RAC: 0 |

Hi skildude, Thanks for the insights on parallel processing...that certainly helps explain for me (at least in simpler terms) what might be going on here, but I'm still thinking there is a bit more to it on the processor and software side of this equation. Case in point, SETI runs in 32-bit mode and has been giving me a consistent speed of 12.26 GFLOPS/sec per WU while running 8 WUs simultaneously. Now, comparing, er, apples to oranges MilkyWay runs in 64-bit mode for me and delivers (surprisingly to me) only 3.66 GFLOPS/sec per WU when processing 8 simultaneous "0.82" WUs but delivers an impressive 9451.69 GFLOPS/sec per WU when doing a single 8 CPU "N-Body Simulation 0.60 (mt)" WU! What that translates to in real life is this...my i7 2600K @ 3.4GHz can spit out a 2,321,721 GFLOP 8-core 64-bit MilkyWay WU unit in about 4 minutes. So, if a typical ~160-170,000 GFLOP SETI WU is taking me ~16,000 seconds and a CUDA GPU is taking only 104 seconds on the same WU, then that would work out to the CUDA ≈ 1634 GFLOPS/sec?...that is, if I'm doing the math right... :) Now, I don't know exactly how many GFLOPS/sec BWX's GPU is delivering, but it seems to me that if these numbers are correct (e.g. 9451.69 vs. 1634 GFLOPS) then there is an awful lot of unused processing potential to be found with the i7 2600K that there were 64-bit softwares being employed for SETI. Or am I seeing this wrong? :) JG |

skildude skildude Send message Joined: 4 Oct 00 Posts: 9541 Credit: 50,759,529 RAC: 60

|

the difference is in the math being processed. To explain simply, Milkyway is to 1+1 as seti is to Advanced Calculus. It's difficult to compare then in any other way. Yes the throughput is different but so are the numbers being crunched. We really are comparing apples and oranges when we compare seti to other projects.  In a rich man's house there is no place to spit but his face. Diogenes Of Sinope |

|

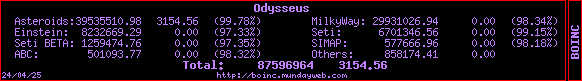

Odysseus Send message Joined: 26 Jul 99 Posts: 1808 Credit: 6,701,347 RAC: 6

|

[…] a CUDA GPU is taking only 104 seconds on the same WU […] This is not the actual processing time, which GPUs don’t report AFAICT, rather it’s the time the CPU spent ‘babysitting’ the GPU, handling memory I/O and whatnot.  |

skildude skildude Send message Joined: 4 Oct 00 Posts: 9541 Credit: 50,759,529 RAC: 60

|

you will see RUN TIME and CPU TIME on each WU. the run time for GPU WU's is the actual GPU Time plus the load time from the CPU.  In a rich man's house there is no place to spit but his face. Diogenes Of Sinope |

|

Jimmy Gondek Send message Joined: 1 Oct 06 Posts: 20 Credit: 715,874 RAC: 0 |

the difference is in the math being processed. To explain simply, Milkyway is to 1+1 as seti is to Advanced Calculus. Understood. It's difficult to compare then in any other way. Yes the throughput is different but so are the numbers being crunched. We really are comparing apples and oranges when we compare seti to other projects. I acknowledge that I have been taking a rather simplistic approach to my unstated line of reasoning here, but I've been trying to get a mental handle on some of the building-block aspects of some much broader issues. (Well, at least as I see them.) Thanks so much for the insights! :) JG |

|

Jimmy Gondek Send message Joined: 1 Oct 06 Posts: 20 Credit: 715,874 RAC: 0 |

you will see RUN TIME and CPU TIME on each WU. the run time for GPU WU's is the actual GPU Time plus the load time from the CPU. Good stuff to know...thanks! :) |

©2024 University of California

SETI@home and Astropulse are funded by grants from the National Science Foundation, NASA, and donations from SETI@home volunteers. AstroPulse is funded in part by the NSF through grant AST-0307956.