Panic Mode On (54) Server problems?

Message boards :

Number crunching :

Panic Mode On (54) Server problems?

Message board moderation

Previous · 1 · 2 · 3 · 4 · 5 · 6 · 7 · 8 . . . 10 · Next

| Author | Message |

|---|---|

|

Cosmic_Ocean Send message Joined: 23 Dec 00 Posts: 3027 Credit: 13,516,867 RAC: 13

|

Cricket shows uploads are on the rise. They may be hit and miss though as the server gets hammered with requests. A few hours ago, I had six across my two boxes to upload, and I just hammered the retry button and they finally went though after about an hour. One of the MBs on the Sempron machine had an elapsed upload time of almost 90 minutes (it was trying on its own most of the day and then I hammered it). Nothing downloaded/assigned since. That may be a good thing with the "in progress" limit though. The super-crunchers with their 5000+ caches may gripe about it, but it should put a limit on runaway requests. Back when DCF went server-side, my Sempron machine downloaded close to 8 weeks worth of APs before I set NNT. Main cruncher hit the limit of 1gb for WUs, which ended up being about 20 days, otherwise it would have kept going, as well. That's an example of runaway requests. Linux laptop: record uptime: 1511d 20h 19m (ended due to the power brick giving-up) |

Sunny129 Sunny129 Send message Joined: 7 Nov 00 Posts: 190 Credit: 3,163,755 RAC: 0

|

just another confirmation that uploads are working now, even though you have to babysit BOINC to make it happen. i was just able to upload 33 AP tasks over the span of ~10 minutes...of course i had to click the update button quite a few times. still not getting new AP tasks just like most everyone else...

|

Sutaru Tsureku Sutaru Tsureku Send message Joined: 6 Apr 07 Posts: 7105 Credit: 147,663,825 RAC: 5

|

9/19/2011 10:58:22 PM SETI@home Sending scheduler request: To fetch work. I saw this already for 72 days (Message 1126289). It looks like 2,559 WUs/GPU. I see it every now and then. With nearly only shorties it's very quick reached. - Best regards! - Sutaru Tsureku, team seti.international founder. - Optimize your PC for higher RAC. - SETI@home needs your help. -

|

Eric Martin Eric Martin Send message Joined: 11 Jun 99 Posts: 4 Credit: 3,374,061 RAC: 0

|

We all know about the Tuesday 3 day outages. But what's with this being down on the weekends and other "up times"? I've been getting "Server is temporarily down" on both my cruching boxes all day. Tuesday is coming real soon and I won't be able to get or upload anything for 3 days. This seems like it happens almost EVERY week. What gives? It seems like only get a good 3 days of crunching in per a week with all the connectivity issues and WU shortages. I'd say at least 50% of the time that the servers are "supposed" to be up, I get that they are down...MOST INFURIATING...especially since I just upgraded to a new 1090T and GTX550 for the cause! C'mon! |

Sutaru Tsureku Sutaru Tsureku Send message Joined: 6 Apr 07 Posts: 7105 Credit: 147,663,825 RAC: 5

|

The message on the first page isn't longer up-to-date. S@h have since months again the ~ 4 hours maintenance every Tuesday (~ 16:00 - 20:00 UTC). - Best regards! - Sutaru Tsureku, team seti.international founder. - Optimize your PC for higher RAC. - SETI@home needs your help. -

|

|

Wembley Send message Joined: 16 Sep 09 Posts: 429 Credit: 1,844,293 RAC: 0

|

Now it's the scheduling server that is down. Can't connect to server to report or request new work. 2011-09-20 08:01:31 AM | SETI@home | [sched_op] Starting scheduler request 2011-09-20 08:01:31 AM | SETI@home | [work_fetch] request: CPU (0.00 sec, 0.00 inst) NVIDIA GPU (102211.78 sec, 0.00 inst) 2011-09-20 08:01:31 AM | SETI@home | Sending scheduler request: Requested by user. 2011-09-20 08:01:31 AM | SETI@home | Reporting 1 completed tasks, requesting new tasks for NVIDIA GPU 2011-09-20 08:01:31 AM | SETI@home | [sched_op] CPU work request: 0.00 seconds; 0.00 CPUs 2011-09-20 08:01:31 AM | SETI@home | [sched_op] NVIDIA GPU work request: 102211.78 seconds; 0.00 GPUs 2011-09-20 08:01:53 AM | SETI@home | Scheduler request failed: Couldn't connect to server 2011-09-20 08:01:53 AM | SETI@home | [sched_op] Deferring communication for 31 min 7 sec 2011-09-20 08:01:53 AM | SETI@home | [sched_op] Reason: Scheduler request failed 2011-09-20 08:01:53 AM | | [work_fetch] Request work fetch: RPC complete

|

|

MikeN Send message Joined: 24 Jan 11 Posts: 319 Credit: 64,719,409 RAC: 85

|

keep trying. The server is working, I have just reported tasks successfully, but you may have to try a few times before you get through. Probably just swamped with people reporting thousands of shorties after the recent upload problems. |

|

S@NL - John van Gorsel Send message Joined: 5 Jul 99 Posts: 193 Credit: 139,673,078 RAC: 0

|

Now it's the scheduling server that is down. Can't connect to server to report or request new work. The scheduling server is hard to reach but it is not down. Two of my Linux pc's were able to report tasks in the last 30 minutes. Seti@Netherlands website |

Richard Haselgrove  Send message Joined: 4 Jul 99 Posts: 14650 Credit: 200,643,578 RAC: 874

|

Now it's the scheduling server that is down. Can't connect to server to report or request new work. It was down for a while, but it's back up now - round about 30 minutes ago, judging by the spike in the cricket graph. New work issued, and - despite the congestion - swimming up the pipe to reach it's target. |

James Sotherden James Sotherden Send message Joined: 16 May 99 Posts: 10436 Credit: 110,373,059 RAC: 54

|

My I7 is out of work,Says cant connect to server. Other two machines have work. Edit- Just got some work. yeehaw!  [/quote] [/quote]Old James |

W-K 666  Send message Joined: 18 May 99 Posts: 19060 Credit: 40,757,560 RAC: 67

|

This "This computer has reached a limit on tasks in progress" is not very helpful, even if it is to protect the computer from d/loading too many tasks when the fix has been put in place. After I received it I check on the actual crunching time I have left, and on the GPU it is less than 7 hrs. It's now 14:00 UTC so should run out right in the middle of maintenance. Will it go away on the next request? |

Richard Haselgrove  Send message Joined: 4 Jul 99 Posts: 14650 Credit: 200,643,578 RAC: 874

|

This "This computer has reached a limit on tasks in progress" is not very helpful, even if it is to protect the computer from d/loading too many tasks when the fix has been put in place. I'm trying to find where the limits are. Just got another 16 tasks, to bring my test probe machine up to 128 tasks in progress (for a single GPU, no CPU on this project), and estimated cache 8 hours 50 minutes. When they were regularly using cache management this time last year, it was expressed as 'number per compute resource'. So, it should go away when you've completed and returned some of the ones you've got already. |

W-K 666  Send message Joined: 18 May 99 Posts: 19060 Credit: 40,757,560 RAC: 67

|

This "This computer has reached a limit on tasks in progress" is not very helpful, even if it is to protect the computer from d/loading too many tasks when the fix has been put in place. Yup, just noticed a request gone through and I got three GPU tasks. All shorties, but on this low end GPU that's about 20 mins, not the 8 hrs as given in the completion time col. |

|

MikeN Send message Joined: 24 Jan 11 Posts: 319 Credit: 64,719,409 RAC: 85

|

My dual core CPU only laptop just got a 'This computer has reached a limit on tasks in progress' message from the server and it has only 50 tasks in its stash. It has been getting WU 1-2 at a time all day, roughly at the same rate it has processed them, so looks like 25 WU's per CPU is the limit. I am not complaining, some of the 50 WU's it does have are APs and will take 24 hours each to crunch, so I am not exactly in danger of running out of work. |

Richard Haselgrove  Send message Joined: 4 Jul 99 Posts: 14650 Credit: 200,643,578 RAC: 874

|

This "This computer has reached a limit on tasks in progress" is not very helpful, even if it is to protect the computer from d/loading too many tasks when the fix has been put in place. You computer must be quota limited on CPU tasks then - do the figures nmn17 has just posted seem about right? In theory, we could ask the project to relax the quota on CPU - it's only GPU hosts (most specifically, GPU-only hosts) which need protecting from excessive work fetch. But I'm reluctant to do that - some people will merely see it as an enticement to use rescheduling, without thinking about what sort of a mess they're digging themselves into. |

|

MikeN Send message Joined: 24 Jan 11 Posts: 319 Credit: 64,719,409 RAC: 85

|

In theory, we could ask the project to relax the quota on CPU - it's only GPU hosts (most specifically, GPU-only hosts) which need protecting from excessive work fetch. The problems are not related just to GPU only hosts. I run a CPU only host AND A CPU+GPU host both of which have been badly affected by downloading shorties on Saturday with ridiculously long time estimates which immediately ran in HP mode and then resulted in BOINC reducing its time estimates for all my other WUs so that I got an avalanch of shorties for both CPU and GPU which will take me until Friday to clear with them all running in HP mode and will leave the time estimates for APs and VLARs on my CPUs horribly messed up. |

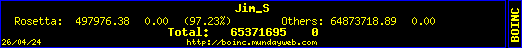

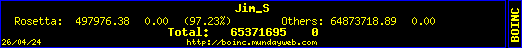

Jim_S Jim_S Send message Joined: 23 Feb 00 Posts: 4705 Credit: 64,560,357 RAC: 31

|

Ok, clock is ticking. If I don't get any AP work in 10-15 years, I'll quit this project. Me Too!  I Desire Peace and Justice, Jim Scott (Mod-Ret.) |

zoom3+1=4 zoom3+1=4 Send message Joined: 30 Nov 03 Posts: 65745 Credit: 55,293,173 RAC: 49

|

Ok, clock is ticking. If I don't get any AP work in 10-15 years, I'll quit this project. Motion seconded, I'm out too, actually I was out around 4am this morning, still can't download a thing... 5093 SETI@home 9/20/2011 2:44:17 PM update requested by user 5094 SETI@home 9/20/2011 2:44:20 PM Sending scheduler request: Requested by user. 5095 SETI@home 9/20/2011 2:44:20 PM Requesting new tasks for GPU 5096 9/20/2011 2:44:42 PM Project communication failed: attempting access to reference site 5097 SETI@home 9/20/2011 2:44:42 PM Scheduler request failed: Couldn't connect to server 5098 9/20/2011 2:44:44 PM Internet access OK - project servers may be temporarily down. The T1 Trust, PRR T1 Class 4-4-4-4 #5550, 1 of America's First HST's

|

|

.clair. Send message Joined: 4 Nov 04 Posts: 1300 Credit: 55,390,408 RAC: 69

|

Ok, clock is ticking. If I don't get any AP work in 10-15 years, I'll quit this project. [dream] Ho hum not to wory cos by then i will have my quantum nano particle powered GPU online, it can do an AP in ten seconds and fifty of them at a time [/dream] Now that thing will shure stiff the servers . . . |

Jim_S Jim_S Send message Joined: 23 Feb 00 Posts: 4705 Credit: 64,560,357 RAC: 31

|

S@H needs a few of these: Sun, IBM push multicore boundaries Rick Merritt 8/26/2009 10:56 AM EDT More kudos for Power7 PALO ALTO, Calif. — Sun Microsystems claimed a new watermark for server CPUs, unveiling Rainbow Falls, a 16-core, 128-thread processor at the Hot Chips conference Tuesday (August 25). But analysts gave the IBM Power7 kudos as the more compelling achievement in the latest round of high-end server processors. Power7 packs as many as 32 cores supporting 128 threads on a four-chip module with links to handle up to 32 sockets in a system. "It is scaling well beyond anything we've ever really seen before," said Peter Glaskowsky, a technology analyst for Envisioneering Group (Seaford, NY). Glaskowsky was quick to point out the two chips target very different markets. Sun aims at Web servers that cost tens of thousands of dollars while IBM targets "monstrously large databases where you need a single memory image," he said. Indeed, IBM revealed it will use Power7 as a building block for the high-end supercomputer it is building under contract to the Defense Advanced Research Projects Agency. Cray is competing with IBM in a final phase of that project. Both the IBM and Sun designs max out by supporting up to 128 threads in a single socket. However, IBM's Power7 has built in significantly more memory and bandwidth than Sun's Rainbow Falls to support IBM's big database customers. Power7 includes 32 Mbytes in embedded DRAM in L3 cache alone. The chip also sports 590 Gbytes/second total chip bandwidth including two four-channel memory controllers per die. IBM's Power7 packs eight cores and 32 Mbytes eDRAM on a die. Click on image to enlarge. By contrast, Sun's design—focused on raw thread throughput for Web servers—does not even use a level three cache. However, Sun is expected to adopt L3 cache in future chips. IBM can configure its L3 cache in chucks that include private, local caches, shared caches and duplicated caches placed close to cores expected to need them/ In terms of on-chip interconnects, Power7 uses a hybrid ring and crossbar approach, although IBM did not detail the interconnect. Rainbow Falls uses a hierarchy of two crossbars with two cores sharing a link to the first-level crossbar. "They are really force feeding their cores through those crossbars," said James Kahle, who led the IBM Cell processor design before heading up the Power7 effort. IBM will make versions of Power7 with four, six and eight cores. They will fit into a similar power consumption envelop as the Power6, believed to range from 100W to 190W. http://www.eetimes.com/electronics-news/4084449/Sun-IBM-push-multicore-boundaries  I Desire Peace and Justice, Jim Scott (Mod-Ret.) |

©2024 University of California

SETI@home and Astropulse are funded by grants from the National Science Foundation, NASA, and donations from SETI@home volunteers. AstroPulse is funded in part by the NSF through grant AST-0307956.