Some news from Berkeley

Message boards :

Cafe SETI :

Some news from Berkeley

Message board moderation

| Author | Message |

|---|---|

Celtic Wolf Celtic Wolf Send message Joined: 3 Apr 99 Posts: 3278 Credit: 595,676 RAC: 0

|

For those of you that do not venture from the Cafe' I posted this in Number Crunching: I just got some rather interesting news: 1. Matt and Jeff are indeed fully aware of the issue out here. While they have been diligently trying to eliminate the connection errors some of us have been keeping them updated. 2. The technical news is accurate. The download servers are downloading even if some of you are still having some issues. Once berkeley clears up the backlog those of us who like to help can accurately assist you. Right now there are way to many other issues that need to be removed. 3. The Upload servers are uploading. Look at the number of WU's you have an multiply that by 300-400K and you know why it appears they aren't. Check Your Computer and see when WU are sent (received by SETI) and you will see that there are some with todays dates on them. It will take some time to clear up this backlog. 4. The 500 errors are NOT being caused by the SETI Apache servers after all. It seems that BOINC itself is erroranously reporting a 500 error when a connection fails. More accurate error codes are being looked into and should be available in a future release. For those of you that need to be hit with a brick (<grin>) this explains why 500 errors are occurring with Einstein and Rosetta. I now return ya'll to your regularly scheduled bitching.. :) |

|

AC Send message Joined: 22 Jan 05 Posts: 3413 Credit: 119,579 RAC: 0

|

Usually when there's upload/download issues like this I just shut BOINC down and wait for the all clear news on the main page. |

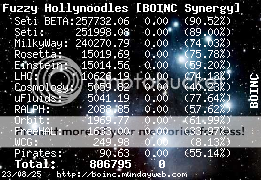

Fuzzy Hollynoodles Fuzzy Hollynoodles Send message Joined: 3 Apr 99 Posts: 9659 Credit: 251,998 RAC: 0 |

Usually when there's upload/download issues like this I just shut BOINC down and wait for the all clear news on the main page. Or you could set Seti to No new work and crunch some other projects in the meantime. EDIT: BTW, hi Alex! :-) "I'm trying to maintain a shred of dignity in this world." - Me

|

|

AC Send message Joined: 22 Jan 05 Posts: 3413 Credit: 119,579 RAC: 0

|

Usually when there's upload/download issues like this I just shut BOINC down and wait for the all clear news on the main page. Hi Fuzzy. Yea I'll have to check to see if my EINSTEIN account is still active. I registered there but quit when I saw how much time their WUs took to process and how SETI WUs took longer because of the resource share. 8-) |

Prognatus Prognatus Send message Joined: 6 Jul 99 Posts: 1600 Credit: 391,546 RAC: 0

|

The problem with the dropped connections, as I see it, is that the server tries to serve everybody at the same time with equal priority. It's like waiting on a telephone line for someone to pick up and when they finally pick up the receiver, they only say "Hi, but excuse me I'll get back to you in a minute"... and you never hear from them again because they're too busy saying that same thing to all the others too. In the mean time you give up and hang up. IMO, we need a better system of client/server communication here. The server should serve clients until they're finished on a strict queue issuing system. Like when you take a queue ticket in the farmacy or bank and then wait in line. The client OTOH should then wait and not nag anymore until it's its time. In the mean time, the client should display its number in the queue. Just my 2 cents. |

Fuzzy Hollynoodles Fuzzy Hollynoodles Send message Joined: 3 Apr 99 Posts: 9659 Credit: 251,998 RAC: 0 |

I've joined Rosetta, which is a really cool project! Maybe you should check it out? http://boinc.bakerlab.org/rosetta/ And the people over there are really nice. "I'm trying to maintain a shred of dignity in this world." - Me

|

|

AC Send message Joined: 22 Jan 05 Posts: 3413 Credit: 119,579 RAC: 0

|

Thanks for telling me about them. I'm sure that their work is important over there, but my heart is more towards the stars. |

Celtic Wolf Celtic Wolf Send message Joined: 3 Apr 99 Posts: 3278 Credit: 595,676 RAC: 0

|

OK when you re-write the TCP/IP standard let us know :) The standard says first in first served.. However, ever there are just so many TCP sockets that are available to be serviced. That number is < 64,000. If you multiply the number of WU's you have waiting to upload by the number of crunchers you will see that number gets exhausted rather quickly. Futhermore the listen queue is a number far less then 64,000. Somebody will have to time out.. That can be extended by Load Balancing, but that requires extra hardware. Remember SETI@HOME is a NON-PROFIT.. Hardware cost money.. |

Prognatus Prognatus Send message Joined: 6 Jul 99 Posts: 1600 Credit: 391,546 RAC: 0

|

OK when you re-write the TCP/IP standard let us know :) The standard says first in first served.. However, ever there are just so many TCP sockets that are available to be serviced. That number is < 64,000.I understand that, but surely it must be possible to program a C/S system to raise above the limitations of the TCP? For instance, let's say for the sake of the argument that you issue one queue ticket to each client, you'll then have upto 64,000 clients in the queue at the same time. That's not so half bad for each server. Add to this the benefit of knowing that when you're served, you are sure to empty your stack of WU's, plus if you also know how you're doing in the waiting line, you'll probably have more patience than if you're left out in the dark with no status information. So, an additional effect would be less frustration and questions like "I can't upload! What's wrong??!!???? Help!!!" Information is everything. :) Sure, some will time out and will then need to try again, but then they'll know that it was because there was a huge queue of about 63,999 people before them in the line... |

Celtic Wolf Celtic Wolf Send message Joined: 3 Apr 99 Posts: 3278 Credit: 595,676 RAC: 0

|

OK when you re-write the TCP/IP standard let us know :) The standard says first in first served.. However, ever there are just so many TCP sockets that are available to be serviced. That number is < 64,000.I understand that, but surely it must be possible to program a C/S system to raise above the limitations of the TCP? For instance, let's say for the sake of the argument that you issue one queue ticket to each client, you'll then have upto 64,000 clients in the queue at the same time. That's not so half bad for each server. Add to this the benefit of knowing that when you're served, you are sure to empty your stack of WU's, plus if you also know how you're doing in the waiting line, you'll probably have more patience than if you don't know anything. What will happen when you run out of tickets to issue?? What happens to that ticket holder that has gone off line? How long do you wait before you service the next ticket holder? What happens if you jump to the next ticket holder and the older ticket comes back? Ohhhh wait that is how TCP/IP works now never mind!!! Prognatus no matter what you do you will still get 6,000 people starting new threads to tell you they can't upload!!! |

Prognatus Prognatus Send message Joined: 6 Jul 99 Posts: 1600 Credit: 391,546 RAC: 0

|

Oh sure, there are bound to be problems even in the future - no matter how we change things - but the main object is to make the system best fitted to its purpose, right? And all of us are genuinly interested in working to achieve that objective, right? At least we should support the idea of adjusting things that doesn't work very well. I've said two times already in this thread that I beleive serving clients PER WU is a wrong approach. Logic says that a PER CLIENT approach instead would reduce the load. And please stop your patronizing tone, CW. You've always used that towards me, and it doesn't work. I react to reason, not know-it-all sentences with three exclamation marks. |

Celtic Wolf Celtic Wolf Send message Joined: 3 Apr 99 Posts: 3278 Credit: 595,676 RAC: 0

|

Oh sure, there are bound to be problems even in the future - no matter how we change things - but the main object is to make the system best fitted to its purpose, right? And all of us are genuinly interested in working to achieve that objective, right? At least we should support the idea of adjusting things that doesn't work very well. Present issues excluded. It is serving per client now. When a WU is finished it gets uploaded by the client. TCP/IP makes connections that are client based. It will leave the conection open till the CLIENT closes it or the keep_alive time and close_wait_intreval has expired. All the server does is LISTEN for the client to request a connection. Regardless if there are 60,000 client waiting to upload and there are only 59000 connections available 10,000 will have to be told go away and try again. This is why the CLIENT says retry in X minutes. The only possible change I can see that could be made is if there are a number of WU's waiting to upload is that the single connection be left open till all the WU's are uploaded. Even then someone will get turned away and be told to wait. The way it is now they might not have to wait days to get a single WU to upload. BTW that was not patronizing that was sarcastic. Sutle difference I know, but a difference none the less. |

Prognatus Prognatus Send message Joined: 6 Jul 99 Posts: 1600 Credit: 391,546 RAC: 0

|

The only possible change I can see that could be made is if there are a number of WU's waiting to upload is that the single connection be left open till all the WU's are uploaded. There has been many other ideas. If you search the board with the search string "zip wu's", you'll find some threads discussing what I think is a very good idea! |

Celtic Wolf Celtic Wolf Send message Joined: 3 Apr 99 Posts: 3278 Credit: 595,676 RAC: 0

|

The only possible change I can see that could be made is if there are a number of WU's waiting to upload is that the single connection be left open till all the WU's are uploaded. I have read them all, and given the fact the the results will zip up 70-80% that may allow more transfers per minute, but at the same time they will have to be unzipped at the other end. So your upload will be faster, but the backend will be busy unzipping files. It still will not change the fact that 60,000 connections have to be made to 50,000 sockets. There still be retrans and there will still be people complaining about it. It will not matter if the retrans succeed at a greater rate.. It is however a good suggestion.. |

tekwyzrd tekwyzrd Send message Joined: 21 Nov 01 Posts: 767 Credit: 30,009 RAC: 0

|

Would the congestion be reduced if multiple operations were performed in one session? Connect once to upload, report, and download if work needed instead of connecting to upload, connecting again to report work units, and yet again to download a unit. Nothing travels faster than the speed of light with the possible exception of bad news, which obeys its own special laws. Douglas Adams (1952 - 2001) |

Celtic Wolf Celtic Wolf Send message Joined: 3 Apr 99 Posts: 3278 Credit: 595,676 RAC: 0

|

Would the congestion be reduced if multiple operations were performed in one session? Connect once to upload, report, and download if work needed instead of connecting to upload, connecting again to report work units, and yet again to download a unit. Depends.. The optimized clients might actually slow down the non-optimized clients, but after thinking about this more Prognatus is right. Zipping up the results before sending will free up resources faster. The validator could unzip them. |

mikey mikey Send message Joined: 17 Dec 99 Posts: 4215 Credit: 3,474,603 RAC: 0

|

It still will not change the fact that 60,000 connections have to be made to 50,000 sockets. There still be retrans and there will still be people complaining about it. It will not matter if the retrans succeed at a greater rate.. Is this a per server number? If so would more machines help in this area? For instance if we could get 10 more servers to Berkeley would that open 500,000 more sockets? Or are the two not related? If they are not related how do we get you more sockets? And on the zipping idea, you could SEND units out zipped but RECEIVE them unzipped in the beginning to see if that would help. At least one part of the conenction would be faster. This would also make our machines do the unzipping work instead of yours. Then if this isn't helpful enough you could add in the returning units in zip format at a later time.

|

Celtic Wolf Celtic Wolf Send message Joined: 3 Apr 99 Posts: 3278 Credit: 595,676 RAC: 0

|

It still will not change the fact that 60,000 connections have to be made to 50,000 sockets. There still be retrans and there will still be people complaining about it. It will not matter if the retrans succeed at a greater rate.. Actually there are 65,536 TCP and 65,536 UDP sockets per IP address. The first 1024 are reserved and there are quite a few above 1024 assigned to specific products including BOINC. I suggested load balancing across several servers and even offered to give them two of my E-220R's on permenant loan. I am still waiting for an answer. It's still a matter of spending the money to get the hardware or have it donated to them. besides the servers it will require a load balancer too. It would not help if the upload rotines had to unzip the files when they were received. That process needs to be strictly read in a packet, write out the data. |

Prognatus Prognatus Send message Joined: 6 Jul 99 Posts: 1600 Credit: 391,546 RAC: 0

|

Just in from the front page: We will be extending the deadline for returning results so that the troubles with the result upload handler will not result in lost credit. |

mikey mikey Send message Joined: 17 Dec 99 Posts: 4215 Credit: 3,474,603 RAC: 0

|

So you need more IP addresses? What is a "load balancer"? Is it a machine that thru programming does it or a special piece of equipment?

|

©2024 University of California

SETI@home and Astropulse are funded by grants from the National Science Foundation, NASA, and donations from SETI@home volunteers. AstroPulse is funded in part by the NSF through grant AST-0307956.